Pseudoscience

Pseudoscience consists of statements, beliefs, or practices that are claimed to be both scientific and factual but are incompatible with the scientific method.[1][Note 1] Pseudoscience is often characterized by contradictory, exaggerated or unfalsifiable claims; reliance on confirmation bias rather than rigorous attempts at refutation; lack of openness to evaluation by other experts; absence of systematic practices when developing hypotheses; and continued adherence long after the pseudoscientific hypotheses have been experimentally discredited. The term pseudoscience is considered pejorative,[4] because it suggests something is being presented as science inaccurately or even deceptively. Those described as practicing or advocating pseudoscience often dispute the characterization.[2]

| Part of a series on |

| Science |

|---|

|

The demarcation between science and pseudoscience has philosophical and scientific implications.[5] Differentiating science from pseudoscience has practical implications in the case of health care, expert testimony, environmental policies, and science education.[6] Distinguishing scientific facts and theories from pseudoscientific beliefs, such as those found in astrology, alchemy, alternative medicine, occult beliefs, religious beliefs, and creation science, is part of science education and scientific literacy.[6][7]

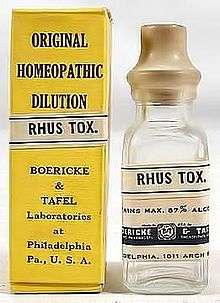

Pseudoscience can be harmful. For example, pseudoscientific anti-vaccine activism and promotion of homeopathic remedies as alternative disease treatments can result in people forgoing important medical treatment with demonstrable health benefits.[8]

Etymology

The word pseudoscience is derived from the Greek root pseudo meaning false[9][10] and the English word science, from the Latin word scientia, meaning "knowledge". Although the term has been in use since at least the late 18th century (e.g., in 1796 by James Pettit Andrews in reference to alchemy[11][12]), the concept of pseudoscience as distinct from real or proper science seems to have become more widespread during the mid-19th century. Among the earliest uses of "pseudo-science" was in an 1844 article in the Northern Journal of Medicine, issue 387:

That opposite kind of innovation which pronounces what has been recognized as a branch of science, to have been a pseudo-science, composed merely of so-called facts, connected together by misapprehensions under the disguise of principles.

An earlier use of the term was in 1843 by the French physiologist François Magendie, that refers to phrenology as "a pseudo-science of the present day".[13][14] During the 20th century, the word was used pejoratively to describe explanations of phenomena which were claimed to be scientific, but which were not in fact supported by reliable experimental evidence.

- Dismissing the separate issue of intentional fraud—such as the Fox sisters’ “rappings” in the 1850s (Abbott, 2012)—the pejorative label pseudoscience distinguishes the scientific ‘us’, at one extreme, from the pseudo-scientific ‘them’, at the other, and asserts that ‘'our’ beliefs, practices, theories, etc., by contrast with that of ‘'the others'’, are scientific. There are four criteria:

(a) the ‘pseudoscientific’ group asserts that its beliefs, practices, theories, etc., are ‘scientific’;

(b) the ‘pseudoscientific’ group claims that its allegedly established facts are justified true beliefs;

(c) the ‘pseudoscientific’ group asserts that its ‘established facts’ have been justified by genuine, rigorous, scientific method; and

(d) this assertion is false or deceptive: “it is not simply that subsequent evidence overturns established conclusions, but rather that the conclusions were never warranted in the first place” (Blum, 1978, p.12 [Yeates' emphasis]; also, see Moll, 1902, pp.44-47).[15]

- Dismissing the separate issue of intentional fraud—such as the Fox sisters’ “rappings” in the 1850s (Abbott, 2012)—the pejorative label pseudoscience distinguishes the scientific ‘us’, at one extreme, from the pseudo-scientific ‘them’, at the other, and asserts that ‘'our’ beliefs, practices, theories, etc., by contrast with that of ‘'the others'’, are scientific. There are four criteria:

From time to time, however, the usage of the word occurred in a more formal, technical manner in response to a perceived threat to individual and institutional security in a social and cultural setting.[16]

Relationship to science

Pseudoscience is differentiated from science because – although it claims to be science – pseudoscience does not adhere to accepted scientific standards, such as the scientific method, falsifiability of claims, and Mertonian norms.

Scientific method

.png)

A number of basic principles are accepted by scientists as standards for determining whether a body of knowledge, method, or practice is scientific. Experimental results should be reproducible and verified by other researchers.[18] These principles are intended to ensure experiments can be reproduced measurably given the same conditions, allowing further investigation to determine whether a hypothesis or theory related to given phenomena is valid and reliable. Standards require the scientific method to be applied throughout, and bias to be controlled for or eliminated through randomization, fair sampling procedures, blinding of studies, and other methods. All gathered data, including the experimental or environmental conditions, are expected to be documented for scrutiny and made available for peer review, allowing further experiments or studies to be conducted to confirm or falsify results. Statistical quantification of significance, confidence, and error[19] are also important tools for the scientific method.

Falsifiability

During the mid-20th century, the philosopher Karl Popper emphasized the criterion of falsifiability to distinguish science from nonscience.[20] Statements, hypotheses, or theories have falsifiability or refutability if there is the inherent possibility that they can be proven false. That is, if it is possible to conceive of an observation or an argument which negates them. Popper used astrology and psychoanalysis as examples of pseudoscience and Einstein's theory of relativity as an example of science. He subdivided nonscience into philosophical, mathematical, mythological, religious and metaphysical formulations on one hand, and pseudoscientific formulations on the other.[21]

Another example which shows the distinct need for a claim to be falsifiable was stated in Carl Sagan's publication The Demon-Haunted World when he discusses an invisible dragon that he has in his garage. The point is made that there is no physical test to refute the claim of the presence of this dragon. Whatever test one thinks can be devised, there is a reason why it does not apply to the invisible dragon, so one can never prove that the initial claim is wrong. Sagan concludes; "Now, what's the difference between an invisible, incorporeal, floating dragon who spits heatless fire and no dragon at all?". He states that "your inability to invalidate my hypothesis is not at all the same thing as proving it true",[22] once again explaining that even if such a claim were true, it would be outside the realm of scientific inquiry.

Mertonian norms

During 1942, Robert K. Merton identified a set of five "norms" which he characterized as what makes a real science. If any of the norms were violated, Merton considered the enterprise to be nonscience. These are not broadly accepted by the scientific community. His norms were:

- Originality: The tests and research done must present something new to the scientific community.

- Detachment: The scientists' reasons for practicing this science must be simply for the expansion of their knowledge. The scientists should not have personal reasons to expect certain results.

- Universality: No person should be able to more easily obtain the information of a test than another person. Social class, religion, ethnicity, or any other personal factors should not be factors in someone's ability to receive or perform a type of science.

- Skepticism: Scientific facts must not be based on faith. One should always question every case and argument and constantly check for errors or invalid claims.

- Public accessibility: Any scientific knowledge one obtains should be made available to everyone. The results of any research should be published and shared with the scientific community.[23]

Refusal to acknowledge problems

During 1978, Paul Thagard proposed that pseudoscience is primarily distinguishable from science when it is less progressive than alternative theories over a long period of time, and its proponents fail to acknowledge or address problems with the theory.[24] In 1983, Mario Bunge suggested the categories of "belief fields" and "research fields" to help distinguish between pseudoscience and science, where the former is primarily personal and subjective and the latter involves a certain systematic method.[25] The 2018 book by Steven Novella, et al. The Skeptics' Guide to the Universe lists hostility to criticism as one of the major features of pseudoscience.[26]

Criticism of the term

Philosophers of science such as Paul Feyerabend argued that a distinction between science and nonscience is neither possible nor desirable.[27][Note 2] Among the issues which can make the distinction difficult is variable rates of evolution among the theories and methods of science in response to new data.[Note 3]

Larry Laudan has suggested pseudoscience has no scientific meaning and is mostly used to describe our emotions: "If we would stand up and be counted on the side of reason, we ought to drop terms like 'pseudo-science' and 'unscientific' from our vocabulary; they are just hollow phrases which do only emotive work for us".[30] Likewise, Richard McNally states, "The term 'pseudoscience' has become little more than an inflammatory buzzword for quickly dismissing one's opponents in media sound-bites" and "When therapeutic entrepreneurs make claims on behalf of their interventions, we should not waste our time trying to determine whether their interventions qualify as pseudoscientific. Rather, we should ask them: How do you know that your intervention works? What is your evidence?"[31]

Alternative definition

For philosophers Silvio Funtowicz and Jerome R. Ravetz "pseudo-science may be defined as one where the uncertainty of its inputs must be suppressed, lest they render its outputs totally indeterminate". The definition, in the book Uncertainty and Quality in Science for Policy (p. 54),[32] alludes to the loss of craft skills in handling quantitative information, and to the bad practice of achieving precision in prediction (inference) only at the expenses of ignoring uncertainty in the input which was used to formulate the prediction. This use of the term is common among practitioners of post-normal science. Understood in this way, pseudoscience can be fought using good practices to assesses uncertainty in quantitative information, such as NUSAP and – in the case of mathematical modelling – sensitivity auditing.

History

The history of pseudoscience is the study of pseudoscientific theories over time. A pseudoscience is a set of ideas that presents itself as science, while it does not meet the criteria to be properly called such.[33][34]

Distinguishing between proper science and pseudoscience is sometimes difficult.[35] One proposal for demarcation between the two is the falsification criterion, attributed most notably to the philosopher Karl Popper.[36] In the history of science and the history of pseudoscience it can be especially difficult to separate the two, because some sciences developed from pseudosciences. An example of this transformation is the science chemistry, which traces its origins to pseudoscientific or pre-scientific study of alchemy.

The vast diversity in pseudosciences further complicates the history of science. Some modern pseudosciences, such as astrology and acupuncture, originated before the scientific era. Others developed as part of an ideology, such as Lysenkoism, or as a response to perceived threats to an ideology. Examples of this ideological process are creation science and intelligent design, which were developed in response to the scientific theory of evolution.[37]

Indicators of possible pseudoscience

A topic, practice, or body of knowledge might reasonably be termed pseudoscientific when it is presented as consistent with the norms of scientific research, but it demonstrably fails to meet these norms.[1][38]

Use of vague, exaggerated or untestable claims

- Assertion of scientific claims that are vague rather than precise, and that lack specific measurements.[39]

- Assertion of a claim with little or no explanatory power.[40]

- Failure to make use of operational definitions (i.e., publicly accessible definitions of the variables, terms, or objects of interest so that persons other than the definer can measure or test them independently)[Note 4] (See also: Reproducibility).

- Failure to make reasonable use of the principle of parsimony, i.e., failing to seek an explanation that requires the fewest possible additional assumptions when multiple viable explanations are possible (see: Occam's razor).[42]

- Use of obscurantist language, and use of apparently technical jargon in an effort to give claims the superficial trappings of science.

- Lack of boundary conditions: Most well-supported scientific theories possess well-articulated limitations under which the predicted phenomena do and do not apply.[43]

- Lack of effective controls, such as placebo and double-blind, in experimental design.

- Lack of understanding of basic and established principles of physics and engineering.[44]

Over-reliance on confirmation rather than refutation

- Assertions that do not allow the logical possibility that they can be shown to be false by observation or physical experiment (see also: Falsifiability).[20][45]

- Assertion of claims that a theory predicts something that it has not been shown to predict.[46] Scientific claims that do not confer any predictive power are considered at best "conjectures", or at worst "pseudoscience" (e.g., ignoratio elenchi).[47]

- Assertion that claims which have not been proven false must therefore be true, and vice versa (see: Argument from ignorance).[48]

- Over-reliance on testimonial, anecdotal evidence, or personal experience: This evidence may be useful for the context of discovery (i.e., hypothesis generation), but should not be used in the context of justification (e.g., statistical hypothesis testing).[49]

- Presentation of data that seems to support claims while suppressing or refusing to consider data that conflict with those claims.[29] This is an example of selection bias, a distortion of evidence or data that arises from the way that the data are collected. It is sometimes referred to as the selection effect.

- Promulgating to the status of facts excessive or untested claims that have been previously published elsewhere; an accumulation of such uncritical secondary reports, which do not otherwise contribute their own empirical investigation, is called the Woozle effect.[50]

- Reversed burden of proof: science places the burden of proof on those making a claim, not on the critic. "Pseudoscientific" arguments may neglect this principle and demand that skeptics demonstrate beyond a reasonable doubt that a claim (e.g., an assertion regarding the efficacy of a novel therapeutic technique) is false. It is essentially impossible to prove a universal negative, so this tactic incorrectly places the burden of proof on the skeptic rather than on the claimant.[51]

- Appeals to holism as opposed to reductionism: proponents of pseudoscientific claims, especially in organic medicine, alternative medicine, naturopathy and mental health, often resort to the "mantra of holism" to dismiss negative findings.[52]

Lack of openness to testing by other experts

- Evasion of peer review before publicizing results (termed "science by press conference"):[51][53][Note 5] Some proponents of ideas that contradict accepted scientific theories avoid subjecting their ideas to peer review, sometimes on the grounds that peer review is biased towards established paradigms, and sometimes on the grounds that assertions cannot be evaluated adequately using standard scientific methods. By remaining insulated from the peer review process, these proponents forgo the opportunity of corrective feedback from informed colleagues.[52]

- Some agencies, institutions, and publications that fund scientific research require authors to share data so others can evaluate a paper independently. Failure to provide adequate information for other researchers to reproduce the claims contributes to a lack of openness.[54]

- Appealing to the need for secrecy or proprietary knowledge when an independent review of data or methodology is requested.[54]

- Substantive debate on the evidence by knowledgeable proponents of all viewpoints is not encouraged.[55]

Absence of progress

- Failure to progress towards additional evidence of its claims.[45][Note 3] Terence Hines has identified astrology as a subject that has changed very little in the past two millennia.[43][24]

- Lack of self-correction: scientific research programmes make mistakes, but they tend to reduce these errors over time.[56] By contrast, ideas may be regarded as pseudoscientific because they have remained unaltered despite contradictory evidence. The work Scientists Confront Velikovsky (1976) Cornell University, also delves into these features in some detail, as does the work of Thomas Kuhn, e.g., The Structure of Scientific Revolutions (1962) which also discusses some of the items on the list of characteristics of pseudoscience.

- Statistical significance of supporting experimental results does not improve over time and are usually close to the cutoff for statistical significance. Normally, experimental techniques improve or the experiments are repeated, and this gives ever stronger evidence. If statistical significance does not improve, this typically shows the experiments have just been repeated until a success occurs due to chance variations.

Personalization of issues

- Tight social groups and authoritarian personality, suppression of dissent and groupthink can enhance the adoption of beliefs that have no rational basis. In attempting to confirm their beliefs, the group tends to identify their critics as enemies.[57]

- Assertion of claims of a conspiracy on the part of the scientific community to suppress the results.[Note 6]

- Attacking the motives, character, morality, or competence of anyone who questions the claims (see Ad hominem fallacy).[57][Note 7]

Use of misleading language

- Creating scientific-sounding terms to persuade nonexperts to believe statements that may be false or meaningless: For example, a long-standing hoax refers to water by the rarely used formal name "dihydrogen monoxide" and describes it as the main constituent in most poisonous solutions to show how easily the general public can be misled.

- Using established terms in idiosyncratic ways, thereby demonstrating unfamiliarity with mainstream work in the discipline.

Prevalence of pseudoscientific beliefs

United States

A large percentage of the United States population lacks scientific literacy, not adequately understanding scientific principles and method.[Note 8][Note 9][60][Note 10] In the Journal of College Science Teaching, Art Hobson writes, "Pseudoscientific beliefs are surprisingly widespread in our culture even among public school science teachers and newspaper editors, and are closely related to scientific illiteracy."[62] However, a 10,000-student study in the same journal concluded there was no strong correlation between science knowledge and belief in pseudoscience.[63]

In his book The Demon-Haunted World, Carl Sagan discusses the government of China and the Chinese Communist Party's concern about Western pseudoscience developments and certain ancient Chinese practices in China. He sees pseudoscience occurring in the United States as part of a worldwide trend and suggests its causes, dangers, diagnosis and treatment may be universal.[64]

During 2006, the U.S. National Science Foundation (NSF) issued an executive summary of a paper on science and engineering which briefly discussed the prevalence of pseudoscience in modern times. It said, "belief in pseudoscience is widespread" and, referencing a Gallup Poll,[65] stated that belief in the 10 commonly believed examples of paranormal phenomena listed in the poll were "pseudoscientific beliefs".[66] The items were "extrasensory perception (ESP), that houses can be haunted, ghosts, telepathy, clairvoyance, astrology, that people can communicate mentally with someone who has died, witches, reincarnation, and channelling".[66] Such beliefs in pseudoscience represent a lack of knowledge of how science works. The scientific community may attempt to communicate information about science out of concern for the public's susceptibility to unproven claims.[66] The National Science Foundation stated that pseudoscientific beliefs in the U.S. became more widespread during the 1990s, peaked about 2001, and then decreased slightly since with pseudoscientific beliefs remaining common. According to the NSF report, there is a lack of knowledge of pseudoscientific issues in society and pseudoscientific practices are commonly followed.[67] Surveys indicate about a third of all adult Americans consider astrology to be scientific.[68][69][70]

Explanations

In a 1981 report Singer and Benassi wrote that pseudoscientific beliefs have their origin from at least four sources.[71]

- Common cognitive errors from personal experience.

- Erroneous sensationalistic mass media coverage.

- Sociocultural factors.

- Poor or erroneous science education.

A 1990 study by Eve and Dunn supported the findings of Singer and Benassi and found pseudoscientific belief being promoted by high school life science and biology teachers.[72]

Psychology

The psychology of pseudoscience attempts to explore and analyze pseudoscientific thinking by means of thorough clarification on making the distinction of what is considered scientific vs. pseudoscientific. The human proclivity for seeking confirmation rather than refutation (confirmation bias),[73] the tendency to hold comforting beliefs, and the tendency to overgeneralize have been proposed as reasons for pseudoscientific thinking. According to Beyerstein (1991), humans are prone to associations based on resemblances only, and often prone to misattribution in cause-effect thinking.[74]

Michael Shermer's theory of belief-dependent realism is driven by the belief that the brain is essentially a "belief engine" which scans data perceived by the senses and looks for patterns and meaning. There is also the tendency for the brain to create cognitive biases, as a result of inferences and assumptions made without logic and based on instinct – usually resulting in patterns in cognition. These tendencies of patternicity and agenticity are also driven "by a meta-bias called the bias blind spot, or the tendency to recognize the power of cognitive biases in other people but to be blind to their influence on our own beliefs".[75] Lindeman states that social motives (i.e., "to comprehend self and the world, to have a sense of control over outcomes, to belong, to find the world benevolent and to maintain one's self-esteem") are often "more easily" fulfilled by pseudoscience than by scientific information. Furthermore, pseudoscientific explanations are generally not analyzed rationally, but instead experientially. Operating within a different set of rules compared to rational thinking, experiential thinking regards an explanation as valid if the explanation is "personally functional, satisfying and sufficient", offering a description of the world that may be more personal than can be provided by science and reducing the amount of potential work involved in understanding complex events and outcomes.[76]

Education and scientific literacy

There is a trend to believe in pseudoscience more than scientific evidence.[77] Some people believe the prevalence of pseudoscientific beliefs is due to widespread "scientific illiteracy".[78] Individuals lacking scientific literacy are more susceptible to wishful thinking, since they are likely to turn to immediate gratification powered by System 1, our default operating system which requires little to no effort. This system encourages one to accept the conclusions they believe, and reject the ones they do not. Further analysis of complex pseudoscientific phenomena require System 2, which follows rules, compares objects along multiple dimensions and weighs options. These two systems have several other differences which are further discussed in the dual-process theory. The scientific and secular systems of morality and meaning are generally unsatisfying to most people. Humans are, by nature, a forward-minded species pursuing greater avenues of happiness and satisfaction, but we are all too frequently willing to grasp at unrealistic promises of a better life.[79]

Psychology has much to discuss about pseudoscience thinking, as it is the illusory perceptions of causality and effectiveness of numerous individuals that needs to be illuminated. Research suggests that illusionary thinking happens in most people when exposed to certain circumstances such as reading a book, an advertisement or the testimony of others are the basis of pseudoscience beliefs. It is assumed that illusions are not unusual, and given the right conditions, illusions are able to occur systematically even in normal emotional situations. One of the things pseudoscience believers quibble most about is that academic science usually treats them as fools. Minimizing these illusions in the real world is not simple.[80] To this aim, designing evidence-based educational programs can be effective to help people identify and reduce their own illusions.[80]

Boundaries with science

Classification

Philosophers classify types of knowledge. In English, the word science is used to indicate specifically the natural sciences and related fields, which are called the social sciences.[81] Different philosophers of science may disagree on the exact limits – for example, is mathematics a formal science that is closer to the empirical ones, or is pure mathematics closer to the philosophical study of logic and therefore not a science?[82] – but all agree that all of the ideas that are not scientific are non-scientific. The large category of non-science includes all matters outside the natural and social sciences, such as the study of history, metaphysics, religion, art, and the humanities.[81] Dividing the category again, unscientific claims are a subset of the large category of non-scientific claims. This category specifically includes all matters that are directly opposed to good science.[81] Un-science includes both "bad science" (such as an error made in a good-faith attempt at learning something about the natural world) and pseudoscience.[81] Thus pseudoscience is a subset of un-science, and un-science, in turn, is subset of non-science.

Science is also distinguishable from revelation, theology, or spirituality in that it offers insight into the physical world obtained by empirical research and testing.[83][84] The most notable disputes concern the evolution of living organisms, the idea of common descent, the geologic history of the Earth, the formation of the solar system, and the origin of the universe.[85] Systems of belief that derive from divine or inspired knowledge are not considered pseudoscience if they do not claim either to be scientific or to overturn well-established science. Moreover, some specific religious claims, such as the power of intercessory prayer to heal the sick, although they may be based on untestable beliefs, can be tested by the scientific method.

Some statements and common beliefs of popular science may not meet the criteria of science. "Pop" science may blur the divide between science and pseudoscience among the general public, and may also involve science fiction.[86] Indeed, pop science is disseminated to, and can also easily emanate from, persons not accountable to scientific methodology and expert peer review.

If claims of a given field can be tested experimentally and standards are upheld, it is not pseudoscience, regardless of how odd, astonishing, or counterintuitive those claims are. If claims made are inconsistent with existing experimental results or established theory, but the method is sound, caution should be used, since science consists of testing hypotheses which may turn out to be false. In such a case, the work may be better described as ideas that are "not yet generally accepted". Protoscience is a term sometimes used to describe a hypothesis that has not yet been tested adequately by the scientific method, but which is otherwise consistent with existing science or which, where inconsistent, offers reasonable account of the inconsistency. It may also describe the transition from a body of practical knowledge into a scientific field.[20]

Philosophy

Karl Popper stated it is insufficient to distinguish science from pseudoscience, or from metaphysics (such as the philosophical question of what existence means), by the criterion of rigorous adherence to the empirical method, which is essentially inductive, based on observation or experimentation.[40] He proposed a method to distinguish between genuine empirical, nonempirical or even pseudoempirical methods. The latter case was exemplified by astrology, which appeals to observation and experimentation. While it had astonishing empirical evidence based on observation, on horoscopes and biographies, it crucially failed to use acceptable scientific standards.[40] Popper proposed falsifiability as an important criterion in distinguishing science from pseudoscience.

To demonstrate this point, Popper[40] gave two cases of human behavior and typical explanations from Sigmund Freud and Alfred Adler's theories: "that of a man who pushes a child into the water with the intention of drowning it; and that of a man who sacrifices his life in an attempt to save the child."[40] From Freud's perspective, the first man would have suffered from psychological repression, probably originating from an Oedipus complex, whereas the second man had attained sublimation. From Adler's perspective, the first and second man suffered from feelings of inferiority and had to prove himself, which drove him to commit the crime or, in the second case, drove him to rescue the child. Popper was not able to find any counterexamples of human behavior in which the behavior could not be explained in the terms of Adler's or Freud's theory. Popper argued[40] it was that the observation always fitted or confirmed the theory which, rather than being its strength, was actually its weakness. In contrast, Popper[40] gave the example of Einstein's gravitational theory, which predicted "light must be attracted by heavy bodies (such as the Sun), precisely as material bodies were attracted."[40] Following from this, stars closer to the Sun would appear to have moved a small distance away from the Sun, and away from each other. This prediction was particularly striking to Popper because it involved considerable risk. The brightness of the Sun prevented this effect from being observed under normal circumstances, so photographs had to be taken during an eclipse and compared to photographs taken at night. Popper states, "If observation shows that the predicted effect is definitely absent, then the theory is simply refuted."[40] Popper summed up his criterion for the scientific status of a theory as depending on its falsifiability, refutability, or testability.

Paul R. Thagard used astrology as a case study to distinguish science from pseudoscience and proposed principles and criteria to delineate them.[87] First, astrology has not progressed in that it has not been updated nor added any explanatory power since Ptolemy. Second, it has ignored outstanding problems such as the precession of equinoxes in astronomy. Third, alternative theories of personality and behavior have grown progressively to encompass explanations of phenomena which astrology statically attributes to heavenly forces. Fourth, astrologers have remained uninterested in furthering the theory to deal with outstanding problems or in critically evaluating the theory in relation to other theories. Thagard intended this criterion to be extended to areas other than astrology. He believed it would delineate as pseudoscientific such practices as witchcraft and pyramidology, while leaving physics, chemistry and biology in the realm of science. Biorhythms, which like astrology relied uncritically on birth dates, did not meet the criterion of pseudoscience at the time because there were no alternative explanations for the same observations. The use of this criterion has the consequence that a theory can be scientific at one time and pseudoscientific at a later time.[87]

In the philosophy and history of science, Imre Lakatos stresses the social and political importance of the demarcation problem, the normative methodological problem of distinguishing between science and pseudoscience. His distinctive historical analysis of scientific methodology based on research programmes suggests: "scientists regard the successful theoretical prediction of stunning novel facts – such as the return of Halley's comet or the gravitational bending of light rays – as what demarcates good scientific theories from pseudo-scientific and degenerate theories, and in spite of all scientific theories being forever confronted by 'an ocean of counterexamples'".[5] Lakatos offers a "novel fallibilist analysis of the development of Newton's celestial dynamics, [his] favourite historical example of his methodology" and argues in light of this historical turn, that his account answers for certain inadequacies in those of Karl Popper and Thomas Kuhn.[5] "Nonetheless, Lakatos did recognize the force of Kuhn's historical criticism of Popper – all important theories have been surrounded by an 'ocean of anomalies', which on a falsificationist view would require the rejection of the theory outright...Lakatos sought to reconcile the rationalism of Popperian falsificationism with what seemed to be its own refutation by history".[88]

Many philosophers have tried to solve the problem of demarcation in the following terms: a statement constitutes knowledge if sufficiently many people believe it sufficiently strongly. But the history of thought shows us that many people were totally committed to absurd beliefs. If the strengths of beliefs were a hallmark of knowledge, we should have to rank some tales about demons, angels, devils, and of heaven and hell as knowledge. Scientists, on the other hand, are very sceptical even of their best theories. Newton's is the most powerful theory science has yet produced, but Newton himself never believed that bodies attract each other at a distance. So no degree of commitment to beliefs makes them knowledge. Indeed, the hallmark of scientific behaviour is a certain scepticism even towards one's most cherished theories. Blind commitment to a theory is not an intellectual virtue: it is an intellectual crime.

Thus a statement may be pseudoscientific even if it is eminently 'plausible' and everybody believes in it, and it may be scientifically valuable even if it is unbelievable and nobody believes in it. A theory may even be of supreme scientific value even if no one understands it, let alone believes in it.[5]— Imre Lakatos, Science and Pseudoscience

The boundary between science and pseudoscience is disputed and difficult to determine analytically, even after more than a century of study by philosophers of science and scientists, and despite some basic agreements on the fundamentals of the scientific method.[1][89][90] The concept of pseudoscience rests on an understanding that the scientific method has been misrepresented or misapplied with respect to a given theory, but many philosophers of science maintain that different kinds of methods are held as appropriate across different fields and different eras of human history. According to Lakatos, the typical descriptive unit of great scientific achievements is not an isolated hypothesis but "a powerful problem-solving machinery, which, with the help of sophisticated mathematical techniques, digests anomalies and even turns them into positive evidence".[5]

To Popper, pseudoscience uses induction to generate theories, and only performs experiments to seek to verify them. To Popper, falsifiability is what determines the scientific status of a theory. Taking a historical approach, Kuhn observed that scientists did not follow Popper's rule, and might ignore falsifying data, unless overwhelming. To Kuhn, puzzle-solving within a paradigm is science. Lakatos attempted to resolve this debate, by suggesting history shows that science occurs in research programmes, competing according to how progressive they are. The leading idea of a programme could evolve, driven by its heuristic to make predictions that can be supported by evidence. Feyerabend claimed that Lakatos was selective in his examples, and the whole history of science shows there is no universal rule of scientific method, and imposing one on the scientific community impedes progress.[91]

— David Newbold and Julia Roberts, "An analysis of the demarcation problem in science and its application to therapeutic touch theory" in International Journal of Nursing Practice, Vol. 13

Laudan maintained that the demarcation between science and non-science was a pseudo-problem, preferring to focus on the more general distinction between reliable and unreliable knowledge.[92]

[Feyerabend] regards Lakatos's view as being closet anarchism disguised as methodological rationalism. Feyerabend's claim was not that standard methodological rules should never be obeyed, but rather that sometimes progress is made by abandoning them. In the absence of a generally accepted rule, there is a need for alternative methods of persuasion. According to Feyerabend, Galileo employed stylistic and rhetorical techniques to convince his reader, while he also wrote in Italian rather than Latin and directed his arguments to those already temperamentally inclined to accept them.[88]

— Alexander Bird, "The Historical Turn in the Philosophy of Science" in Routledge Companion to the Philosophy of Science

Politics, health, and education

Political implications

The demarcation problem between science and pseudoscience brings up debate in the realms of science, philosophy and politics. Imre Lakatos, for instance, points out that the Communist Party of the Soviet Union at one point declared that Mendelian genetics was pseudoscientific and had its advocates, including well-established scientists such as Nikolai Vavilov, sent to a Gulag and that the "liberal Establishment of the West" denies freedom of speech to topics it regards as pseudoscience, particularly where they run up against social mores.[5]

Something becomes pseudoscientific when science cannot be separated from ideology, scientists misrepresent scientific findings to promote or draw attention for publicity, when politicians, journalists and a nation's intellectual elite distort the facts of science for short-term political gain, or when powerful individuals of the public conflate causation and cofactors by clever wordplay. These ideas reduce the authority, value, integrity and independence of science in society.[93]

Health and education implications

Distinguishing science from pseudoscience has practical implications in the case of health care, expert testimony, environmental policies, and science education. Treatments with a patina of scientific authority which have not actually been subjected to actual scientific testing may be ineffective, expensive and dangerous to patients and confuse health providers, insurers, government decision makers and the public as to what treatments are appropriate. Claims advanced by pseudoscience may result in government officials and educators making bad decisions in selecting curricula.[Note 11]

The extent to which students acquire a range of social and cognitive thinking skills related to the proper usage of science and technology determines whether they are scientifically literate. Education in the sciences encounters new dimensions with the changing landscape of science and technology, a fast-changing culture and a knowledge-driven era. A reinvention of the school science curriculum is one that shapes students to contend with its changing influence on human welfare. Scientific literacy, which allows a person to distinguish science from pseudosciences such as astrology, is among the attributes that enable students to adapt to the changing world. Its characteristics are embedded in a curriculum where students are engaged in resolving problems, conducting investigations, or developing projects.[7]

Friedman mentions why most scientists avoid educating about pseudoscience, including that paying undue attention to pseudoscience could dignify it.[94]

On the other hand, Park emphasizes how pseudoscience can be a threat to society and considers that scientists have a responsibility to teach how to distinguish science from pseudoscience.[95]

Pseudosciences such as homeopathy, even if generally benign, are used by charlatans. This poses a serious issue because it enables incompetent practitioners to administer health care. True-believing zealots may pose a more serious threat than typical con men because of their affection to homeopathy's ideology. Irrational health care is not harmless and it is careless to create patient confidence in pseudomedicine.[96]

On 8 December 2016, Michael V. LeVine, writing in Business Insider, pointed out the dangers posed by the Natural News website: "Snake-oil salesmen have pushed false cures since the dawn of medicine, and now websites like Natural News flood social media with dangerous anti-pharmaceutical, anti-vaccination and anti-GMO pseudoscience that puts millions at risk of contracting preventable illnesses."[97]

The anti-vaccine movement has persuaded large number of parents not to vaccinate their children, citing pseudoscientific research that links childhood vaccines with the onset of autism.[98] These include the study by Andrew Wakefield, which claimed that a combination of gastrointestinal disease and developmental regression, which are often seen in children with ASD, occurred within two weeks of receiving vaccines.[99][100] The study was eventually retracted by its publisher, while Wakefield was stripped of his license to practice medicine.[98]

See also

Related concepts

Similar terms

Notes

- Definition:

- "A pretended or spurious science; a collection of related beliefs about the world mistakenly regarded as being based on scientific method or as having the status that scientific truths now have". Oxford English Dictionary, second edition 1989.

- "Many writers on pseudoscience have emphasized that pseudoscience is non-science posing as science. The foremost modern classic on the subject (Gardner 1957) bears the title Fads and Fallacies in the Name of Science. According to Brian Baigrie (1988, 438), '[w]hat is objectionable about these beliefs is that they masquerade as genuinely scientific ones.' These and many other authors assume that to be pseudoscientific, an activity or a teaching has to satisfy the following two criteria (Hansson 1996): (1) it is not scientific, and (2) its major proponents try to create the impression that it is scientific."[2]

- '"claims presented so that they appear [to be] scientific even though they lack supporting evidence and plausibility"(p. 33). In contrast, science is "a set of methods designed to describe and interpret observed and inferred phenomena, past or present, and aimed at building a testable body of knowledge open to rejection or confirmation"(p. 17)'[3] (this was the definition adopted by the National Science Foundation)

- 'A particularly radical reinterpretation of science comes from Paul Feyerabend, "the worst enemy of science"... Like Lakatos, Feyerabend was also a student under Popper. In an interview with Feyerabend in Science, [he says] "Equal weight... should be given to competing avenues of knowledge such as astrology, acupuncture, and witchcraft..."'[28]

- "We can now propose the following principle of demarcation: A theory or discipline which purports to be scientific is pseudoscientific if and only if: it has been less progressive than alternative theories over a long period of time, and faces many unsolved problems; but the community of practitioners makes little attempt to develop the theory towards solutions of the problems, shows no concern for attempts to evaluate the theory in relation to others, and is selective in considering confirmations and non confirmations."[29]

- 'Most terms in theoretical physics, for example, do not enjoy at least some distinct connections with observables, but not of the simple sort that would permit operational definitions in terms of these observables. [..] If a restriction in favor of operational definitions were to be followed, therefore, most of theoretical physics would have to be dismissed as meaningless pseudoscience!'[41]

- For an opposing perspective, e.g. Chapter 5 of Suppression Stories by Brian Martin (Wollongong: Fund for Intellectual Dissent, 1997), pp. 69–83.

- e.g. archivefreedom.org, which claims that "The list of suppressed scientists even includes Nobel Laureates!"

- e.g. Philosophy 103: Introduction to Logic Argumentum Ad Hominem.

- "Surveys conducted in the United States and Europe reveal that many citizens do not have a firm grasp of basic scientific facts and concepts, nor do they have an understanding of the scientific process. In addition, belief in pseudoscience (an indicator of scientific illiteracy) seems to be widespread among Americans and Europeans."[58]

- "A new national survey commissioned by the California Academy of Sciences and conducted by Harris Interactive® reveals that the U.S. public is unable to pass even a basic scientific literacy test."[59]

- "In a survey released earlier this year, Miller and colleagues found that about 28 percent of American adults qualified as scientifically literate, which is an increase of about 10 percent from the late 1980s and early 1990s."[61]

- "From a practical point of view, the distinction is important for decision guidance in both private and public life. Since science is our most reliable source of knowledge in a wide variety of areas, we need to distinguish scientific knowledge from its look-alikes. Due to the high status of science in present-day society, attempts to exaggerate the scientific status of various claims, teachings, and products are common enough to make the demarcation issue serious. For example, creation science may replace evolution in studies of biology."[6]

References

- Cover JA, Curd M, eds. (1998), Philosophy of Science: The Central Issues, pp. 1–82

- Hansson SO (2008), "Science and Pseudoscience", Stanford Encyclopedia of Philosophy, Metaphysics Research Lab, Stanford University, Section 2: The "science" of pseudoscienceCS1 maint: ref=harv (link)

- Shermer (1997)

- Frietsch U (7 April 2015). "The boundaries of science / pseudoscience". European History Online (EGO). Archived from the original on 15 April 2017. Retrieved 15 April 2017.

- Lakatos I (1973), Science and Pseudoscience, The London School of Economics and Political Science, Dept of Philosophy, Logic and Scientific Method, (archive of transcript), archived from the original (mp3) on 25 July 2011

- Hansson (2008), Section 1: The purpose of demarcations

- Hurd PD (June 1998). "Scientific literacy: New minds for a changing world". Science Education. 82 (3): 407–16. Bibcode:1998SciEd..82..407H. doi:10.1002/(SICI)1098-237X(199806)82:3<407::AID-SCE6>3.0.CO;2-G.(subscription required)

- Vyse, Stuart (10 July 2019). "What Should Become of a Monument to Pseudoscience?". Skeptical Inquirer. Center for Inquiry. Retrieved 1 December 2019.

- "pseudo", The Free Dictionary, Farlex, Inc., 2015CS1 maint: ref=harv (link)

- "Online Etymology Dictionary". Douglas Harper. 2015.

- "pseudoscience". Oxford English Dictionary (3rd ed.). Oxford University Press. September 2005. (Subscription or UK public library membership required.)

- Andrews & Henry (1796), p. 87

- Magendie F (1843). An Elementary Treatise on Human Physiology. John Revere (5th ed.). New York: Harper. p. 150.CS1 maint: ref=harv (link)

- Lamont, Peter (2013). Extraordinary Beliefs: A Historical Approach to a Psychological Problem. Cambridge University Press. p. 58. ISBN 978-1107019331.

When the eminent French physiologist, François Magendie, first coined the term ‘pseudo-science’ in 1843, he was referring to phrenology.

- Yeates (2018), p.42.

- Still A, Dryden W (2004). "The Social Psychology of "Pseudoscience": A Brief History". J Theory Soc Behav. 34 (3): 265–90. doi:10.1111/j.0021-8308.2004.00248.x.CS1 maint: ref=harv (link)

- Bowler J (2003). Evolution: The History of an Idea (3rd ed.). University of California Press. p. 128. ISBN 978-0-520-23693-6.CS1 maint: ref=harv (link)

- e.g. Gauch (2003), pp. 3–5 ff

- Gauch (2003), pp. 191 ff, especially Chapter 6, "Probability", and Chapter 7, "inductive Logic and Statistics"

- Popper K (1959). The Logic of Scientific Discovery. Routledge. ISBN 978-0-415-27844-7.CS1 maint: ref=harv (link) The German version is currently in print by Mohr Siebeck (ISBN 3-16-148410-X).

- Popper (1963), pp. 43–86

- Sagan (1994), p. 171

- Casti JL (1990). Paradigms lost: tackling the unanswered mysteries of modern science (1st ed.). New York: Avon Books. pp. 51–52. ISBN 978-0-380-71165-9.

- Thagard (1978), pp. 223 ff

- Bunge (1983a)

- Novella, Steven (2018). The Skeptics' Guide to the Universe: How to Know What's Really Real in a World Increasingly Full of Fake. Grand Central Publishing. p. 165.

- Feyerabend, Paul (1975). "Table of contents and final chapter". Against Method: Outline of an Anarchistic Theory of Knowledge. ISBN 978-0-86091-646-8.

- Gauch (2003), p. 88

- Thagard (1978), pp. 227–228

- Laudan L (1996). "The demise of the demarcation problem". In Ruse M (ed.). But Is It Science?: The Philosophical Question in the Creation/Evolution Controversy. pp. 337–350.

- McNally RJ (2003). "Is the pseudoscience concept useful for clinical psychology?". The Scientific Review of Mental Health Practice. 2 (2). Archived from the original on 30 April 2010.

- Funtowicz S, Ravetz J (1990). Uncertainty and Quality in Science for Policy. Dordrecht: Kluwer Academic Publishers.

- "Pseudoscientific". Oxford American Dictionary. Oxford English Dictionary.

Pseudoscientific – pretending to be scientific, falsely represented as being scientific

CS1 maint: ref=harv (link) - "Pseudoscience". The Skeptic's Dictionary. Archived from the original on 1 February 2009.CS1 maint: ref=harv (link)

- Kåre Letrud, "The Gordian Knot of Demarcation: Tying Up Some Loose Ends" International Studies in the Philosophy of Science 32 (1):3–11 (2019)

- Popper, Karl R. (Karl Raimund) (2002). Conjectures and refutations : the growth of scientific knowledge. London: Routledge. pp. 33–39. ISBN 0415285933. OCLC 49593492.

- Greener M (December 2007). "Taking on creationism. Which arguments and evidence counter pseudoscience?". EMBO Reports. 8 (12): 1107–09. doi:10.1038/sj.embor.7401131. PMC 2267227. PMID 18059309.

- Bunge 1983b.

- e.g. Gauch (2003), pp. 211 ff (Probability, "Common Blunders").

- Popper K (1963). Conjectures and Refutations (PDF). Archived (PDF) from the original on 13 October 2017.CS1 maint: ref=harv (link)

- Churchland PM (1999). Matter and Consciousness: A Contemporary Introduction to the Philosophy of Mind. MIT Press. p. 90. ISBN 978-0262530743.CS1 maint: ref=harv (link)

- Gauch (2003), pp. 269 ff, "Parsimony and Efficiency"

- Hines T (1988). Pseudoscience and the Paranormal: A Critical Examination of the Evidence. Buffalo, NY: Prometheus Books. ISBN 978-0-87975-419-8.

- Donald E. Simanek. "What is science? What is pseudoscience?". Archived from the original on 25 April 2009.

- Lakatos I (1970). "Falsification and the Methodology of Scientific Research Programmes". In Lakatos I, Musgrave A (eds.). Criticism and the Growth of Knowledge. pp. 91–195.

- e.g. Gauch (2003), pp. 178 ff (Deductive Logic, "Fallacies"), and at 211 ff (Probability, "Common Blunders")

- Macmillan Encyclopedia of Philosophy Vol. 3, "Fallacies" 174 ff, esp. section on "Ignoratio elenchi"

- Macmillan Encyclopedia of Philosophy Vol 3, "Fallacies" 174 ff esp. 177–178

- Bunge (1983), p. 381

- Eileen Gambrill (1 May 2012). Critical Thinking in Clinical Practice: Improving the Quality of Judgments and Decisions (3rd ed.). John Wiley & Sons. p. 109. ISBN 978-0-470-90438-1.

- Lilienfeld SO (2004). Science and Pseudoscience in Clinical Psychology Guildford Press ISBN 1-59385-070-0

- Ruscio (2002)

- Gitanjali B (2001). "Peer review – process, perspectives and the path ahead" (PDF). Journal of Postgraduate Medicine. 47 (3): 210–14. PMID 11832629. Archived from the original (PDF) on 23 June 2006.CS1 maint: ref=harv (link)

- Gauch (2003), pp. 124 ff

- Sagan (1994), p. 210

- Ruscio (2002), p. 120

- Devilly (2005)

- National Science Board (May 2004), "Chapter 7 Science and Technology: Public Attitudes and Understanding: Public Knowledge About S&T", Science and Engineering Indicators 2004, Arlington, VA: National Science Foundation, archived from the original on 28 June 2015, retrieved 28 August 2013

- Stone S, Ng A. "American adults flunk basic science: National survey shows only one-in-five adults can answer three science questions correctly" (Press release). California Academy of Sciences. Archived from the original on 18 October 2013.

- Raloff J (21 February 2010). "Science literacy: U.S. college courses really count". Science News. Society for Science & the Public. Archived from the original on 13 October 2017. Retrieved 13 October 2017.

- Oswald T (15 November 2007). "MSU prof: Lack of science knowledge hurting democratic process". MSUToday. Michigan State University. Archived from the original on 11 September 2013. Retrieved 28 August 2013.

- Hobson A (2011). "Teaching relevant science for scientific literacy" (PDF). Journal of College Science Teaching. Archived from the original (PDF) on 24 August 2011.

- Impey C, Buxner S, et al. (2011). "A twenty-year survey of science literacy among college undergraduates" (PDF). Journal of College Science Teaching. 40 (1): 31–37.

- Sagan (1994), pp. 1–22

- National Science Board (2006), Figure 7-8 – Belief in paranormal phenomena: 1990, 2001, and 2005. "Figure 7-8". Archived from the original on 17 June 2016. Retrieved 20 April 2010.CS1 maint: BOT: original-url status unknown (link)

David W. Moore (16 June 2005). "Three in Four Americans Believe in Paranormal". Archived from the original on 22 August 2010. - National Science Board (February 2006), "Chapter 7: Science and Technology Public Attitudes and Understanding: Public Knowledge About S&T", Science and Engineering Indicators 2006, Arlington, VA: National Science Foundation, Footnote 29, archived from the original on 28 June 2015CS1 maint: ref=harv (link)

- National Science Board (February 2006). Science and Engineering Indicators 2006. Volume 1. Arlington, VA: National Science Foundation.

- National Science Board (February 2006). "Appendix table 7-16: Attitudes toward science and technology, by country/region: Most recent year". Science and Engineering Indicators 2006. Volume 2: Appendix Tables. Arlington, VA: National Science Foundation. pp. A7–17.

- FOX News (18 June 2004). "Poll: More Believe In God Than Heaven". Fox News Channel. Archived from the original on 5 March 2009. Retrieved 26 April 2009. Cite journal requires

|journal=(help) - Taylor H (26 February 2003). "Harris Poll: The Religious and Other Beliefs of Americans 2003". Archived from the original on 11 January 2007. Retrieved 26 April 2009.

- Singer B, Benassi VA (January–February 1981). "Occult beliefs: Media distortions, social uncertainty, and deficiencies of human reasoning seem to be at the basis of occult beliefs". American Scientist. Vol. 69 no. 1. pp. 49–55. JSTOR 27850247.

- Eve RA, Dunn D (January 1990). "Psychic powers, astrology & creationism in the classroom? Evidence of pseudoscientific beliefs among high school biology & life science teachers" (PDF). The American Biology Teacher. Vol. 52 no. 1. pp. 10–21. doi:10.2307/4449018. JSTOR 4449018. Archived (PDF) from the original on 13 October 2017.

- Devilly (2005), p. 439

- Beyerstein B, Hadaway P (1991). "On avoiding folly". Journal of Drug Issues. 20 (4): 689–700. doi:10.1177/002204269002000418.

- Shermer M (July 2011). "Understanding the believing brain: Why science is the only way out of belief-dependent realism". Scientific American. doi:10.1038/scientificamerican0711-85. Archived from the original on 30 August 2016. Retrieved 14 August 2016.

- Lindeman M (December 1998). "Motivation, cognition and pseudoscience". Scandinavian Journal of Psychology. 39 (4): 257–65. doi:10.1111/1467-9450.00085. PMID 9883101.

- Matute H, Blanco F, Yarritu I, Díaz-Lago M, Vadillo MA, Barberia I (2015). "Illusions of causality: how they bias our everyday thinking and how they could be reduced". Frontiers in Psychology. 6: 888. doi:10.3389/fpsyg.2015.00888. PMC 4488611. PMID 26191014.

- Lack C (10 October 2013). "What does Scientific Literacy look like in the 21st Century?". Great Plains Skeptic. Skeptic Ink Network. Archived from the original on 13 April 2014. Retrieved 9 April 2014.

- Shermer M, Gould SJ (2002). Why People Believe Weird Things: Pseudoscience, Superstition, and Other Confusions of Our Time. New York: Holt Paperbacks. ISBN 978-0-8050-7089-7.

- Matute H, Yarritu I, Vadillo MA (August 2011). "Illusions of causality at the heart of pseudoscience". British Journal of Psychology. 102 (3): 392–405. CiteSeerX 10.1.1.298.3070. doi:10.1348/000712610X532210. PMID 21751996.

- Hansson, Sven Ove (2017). Zalta, Edward N. (ed.). The Stanford Encyclopedia of Philosophy (Summer 2017 ed.). Metaphysics Research Lab, Stanford University.

- Bunge, Mario Augusto (1998). Philosophy of Science: From Problem to Theory. Transaction Publishers. p. 24. ISBN 978-0-7658-0413-6.

- Gould SJ (March 1997). "Nonoverlapping magisteria". Natural History. No. 106. pp. 16–22. Archived from the original on 4 January 2017.CS1 maint: ref=harv (link)

- Sager (2008), p. 10

- "Royal Society statement on evolution, creationism and intelligent design" (Press release). London: Royal Society. 11 April 2006. Archived from the original on 13 October 2007.CS1 maint: ref=harv (link)

- Pendle G. "Popular Science Feature – When Science Fiction is Science Fact". Archived from the original on 14 February 2006.CS1 maint: ref=harv (link)

- Thagard (1978)

- Bird A (2008). "The Historical Turn in the Philosophy of Science" (PDF). In Psillos S, Curd M (eds.). Routledge Companion to the Philosophy of Science. Abingdon: Routledge. pp. 9, 14. Archived (PDF) from the original on 1 June 2013.CS1 maint: ref=harv (link)

- Gauch (2003), pp. 3–7

- Gordin MD (2015). "That a clear line of demarcation has separated science from pseudoscience". In Numbers RL, Kampourakis K (eds.). Newton's Apple and Other Myths about Science. Harvard University Press. pp. 219–25. ISBN 978-0674915473.

- Newbold D, Roberts J (December 2007). "An analysis of the demarcation problem in science and its application to therapeutic touch theory". International Journal of Nursing Practice. 13 (6): 324–30. doi:10.1111/j.1440-172X.2007.00646.x. PMID 18021160.

- Laudan L (1983). "The Demise of the Demarcation Problem". In Cohen RS, Laudan L (eds.). Physics, Philosophy and Psychoanalysis: Essays in Honor of Adolf Grünbaum. Boston Studies in the Philosophy of Science. 76. Dordrecht: D. Reidel. pp. 111–27. ISBN 978-90-277-1533-3.

- Makgoba MW (May 2002). "Politics, the media and science in HIV/AIDS: the peril of pseudoscience". Vaccine. 20 (15): 1899–904. doi:10.1016/S0264-410X(02)00063-4. PMID 11983241.

- Efthimiou (2006), p. 4 – Efthimiou quoting Friedman: "We could dignify pseudoscience by mentioning it at all".

- Efthimiou (2006), p. 4 – Efthimiou quoting Park: "The more serious threat is to the public, which is not often in a position to judge which claims are real and which are voodoo. ... Those who are fortunate enough to have chosen science as a career have an obligation to inform the public about voodoo science".

- The National Council Against Health Fraud (1994). "NCAHF Position Paper on Homeopathy".CS1 maint: ref=harv (link)

- LeVine M (8 December 2016). "What scientists can teach us about fake news and disinformation". Business Insider. Archived from the original on 10 December 2016. Retrieved 15 December 2016.

- Kaufman, Allison; Kaufman, James (2017). Pseudoscience: The Conspiracy Against Science. Cambridge, MA: MIT Press. p. 239. ISBN 978-0262037426.

- Lack, Caleb; Rousseau, Jacques (2016). Critical Thinking, Science, and Pseudoscience: Why We Can't Trust Our Brains. New York: Springer Publishing Company, LLC. p. 221. ISBN 978-0826194190.

- Lilienfeld, Scott; Lynn, Steven Jay; Lohr, Jeffrey (2014). Science and Pseudoscience in Clinical Psychology, Second Edition. New York: Guilford Publications. p. 435. ISBN 978-1462517893.

Bibliography

- Abbott, K. (2012, October 30). The Fox Sisters and the Rap on Spiritualism. Smithsonian.com.

- Andrews JP, Henry R (1796). History of Great Britain, from the death of Henry VIII to the accession of James VI of Scotland to the crown of England. II. London: T. Cadell and W. Davies.CS1 maint: ref=harv (link)

- Blum, J.M. (1978). Pseudoscience and Mental Ability: The Origins and Fallacies of the IQ Controversy. New York, NY: Monthly Review Press.

- Bunge M (1983a). "Demarcating science from pseudoscience". Fundamenta Scientiae. 3: 369–88.CS1 maint: ref=harv (link)

- Bunge, Mario (1983b). Treatise on Basic Philosophy: Volume 6: Epistemology & Methodology II: Understanding the World. Treatise on Basic Philosophy. Springer Netherlands. pp. 223–228. ISBN 978-90-277-1634-7.CS1 maint: ref=harv (link)

- Devilly GJ (June 2005). "Power therapies and possible threats to the science of psychology and psychiatry". The Australian and New Zealand Journal of Psychiatry. 39 (6): 437–45. doi:10.1080/j.1440-1614.2005.01601.x. PMID 15943644.CS1 maint: ref=harv (link)

- Gauch HG (2003). Scientific Method in Practice. Cambridge University Press. ISBN 978-0521017084. LCCN 2002022271.CS1 maint: ref=harv (link)

- A bot will complete this citation soon. Click here to jump the queue arXiv:physics/0608061.

- Moll, A. (1902). Christian Science, Medicine, and Occultism. London: Rebman, Limited.

- Ruscio, John (2002). Clear thinking with psychology : separating sense from nonsense. Belmont, CA: Brooks/Cole-Thomson Learning. ISBN 978-0-534-53659-6. OCLC 47013264.CS1 maint: ref=harv (link)

- Sager C, ed. (2008). "Voices for evolution" (PDF). National Center for Science Education. Retrieved 21 May 2010.CS1 maint: ref=harv (link)

- Sagan C (1994). The demon-haunted world. New York: Ballantine Books. ISBN 978-0-345-40946-1.CS1 maint: ref=harv (link)

- Shermer M (1997). Why people believe weird things: pseudoscience, superstition, and other confusions of our time. New York: W. H. Freeman and Company. ISBN 978-0-7167-3090-3.CS1 maint: ref=harv (link)

- Thagard, Paul R. (1978). "Why astrology is a pseudoscience". PSA: Proceedings of the Biennial Meeting of the Philosophy of Science Association. 1978 (1): 223–234. doi:10.1086/psaprocbienmeetp.1978.1.192639. ISSN 0270-8647.CS1 maint: ref=harv (link)

- Yeates, L.B. (2018), "James Braid (II): Mesmerism, Braid’s Crucial Experiment, and Braid’s Discovery of Neuro-Hypnotism", Australian Journal of Clinical Hypnotherapy & Hypnosis, Vol.40, No.1, (Autumn 2018), pp. 40–92.

Further reading

- Pigliucci M, Boudry M (2013). Philosophy of Pseudoscience: Reconsidering the Demarcation Problem. Chicago: University of Chicago Press. ISBN 978-0-226-05196-3.

- Shermer M (September 2011). "What Is Pseudoscience?: Distinguishing between science and pseudoscience is problematic". Scientific American. 305 (3): 92. doi:10.1038/scientificamerican0911-92. PMID 21870452.

- Hansson SO (3 September 2008). "Science and Pseudo-Science". Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University.

- Shermer M, Gould SJ (2002). Why People Believe Weird Things: Pseudoscience, Superstition, and Other Confusions of Our Time. New York: Holt Paperbacks. ISBN 978-0-8050-7089-7.

- Derksen AA (2001). "The seven strategies of the sophisticated pseudo-scientist: a look into Freud's rhetorical toolbox". J Gen Phil Sci. 32 (2): 329–50. doi:10.1023/A:1013100717113.

- Wilson F. (2000). The Logic and Methodology of Science and Pseudoscience. Canadian Scholars Press. ISBN 978-1-55130-175-4.

- Bauer HH (2000). Science or Pseudoscience: Magnetic Healing, Psychic Phenomena, and Other Heterodoxies. University of Illinois Press. ISBN 978-0-252-02601-0.

- Charpak G, Broch H (2004). Debunked: Esp, telekinesis, other pseudoscience (in French). Translated by Holland BK. Johns Hopkins University Press. ISBN 978-0-8018-7867-1.

Debunked.

- Cioffi F (1998). Freud and the Question of Pseudoscience. Chicago and La Salle, Illinois: Open Court, division of Carus. pp. 314. ISBN 978-0-8126-9385-0.

- Hansson SO (1996). "Defining pseudoscience". Philosophia Naturalis. 33: 169–76.

- Pratkanis AR (July–August 1995). "How to Sell a Pseudoscience". Skeptical Inquirer. 19 (4): 19–25. Archived from the original on 11 December 2006.

- Wolpert, Lewis (1994). The Unnatural Nature of Science. Lancet. 341. Harvard University Press. p. 310. doi:10.1016/0140-6736(93)92665-g. ISBN 978-0-6749-2981-4. PMID 8093949. First published 1992 by Faber & Faber, London.

- Martin M (1994). "Pseudoscience, the paranormal, and science education". Science & Education. 3 (4): 1573–901. Bibcode:1994Sc&Ed...3..357M. doi:10.1007/BF00488452.

- Derksen AA (1993). "The seven sins of pseudo-science". J Gen Phil Sci. 24: 17–42. doi:10.1007/BF00769513.

- Gardner M (1990). Science – Good, Bad and Bogus. Prometheus Books. ISBN 978-0-87975-573-7.

- Little, John (29 October 1981). "Review and useful overview of Gardner's book". New Scientist. 92 (1277): 320.

- Gardner M (1957). Fads and Fallacies in the Name of Science (2nd, revised & expanded ed.). Mineola, NY: Dover Publications. ISBN 978-0-486-20394-2.

fads and fallacies.

Originally published 1952 by G.P. Putnam's Sons, under the title In the Name of Science. - Gardner M (2000). Did Adam and Eve Have Navels?: Debunking Pseudoscience. New York: W.W. Norton & Company. ISBN 978-0-393-32238-5.

External links

| Look up pseudoscience in Wiktionary, the free dictionary. |

| Library resources about Pseudoscience |

| Wikimedia Commons has media related to Pseudoscience. |

- Skeptic Dictionary: Pseudoscience – Robert Todd Carroll, PhD

- Distinguishing Science from Pseudoscience – Rory Coker, PhD

- Pseudoscience. What is it? How can I recognize it? – Stephen Lower

- Science and Pseudoscience – transcript and broadcast of talk by Imre Lakatos

- Science, Pseudoscience, and Irrationalism – Steven Dutch

- Skeptic Dictionary: Pseudoscientific topics and discussion – Robert Todd Carroll

- Why Is Pseudoscience Dangerous? – Edward Kruglyakov

- "Why garbage science gets published". Adam Marcas, Ivan Oransky. Nautilus. 2017.

- Michael Shermer: Baloney Detection Kit on YouTube – 10 questions to challenge false claims and uncover the truth.