Emotion recognition in conversation

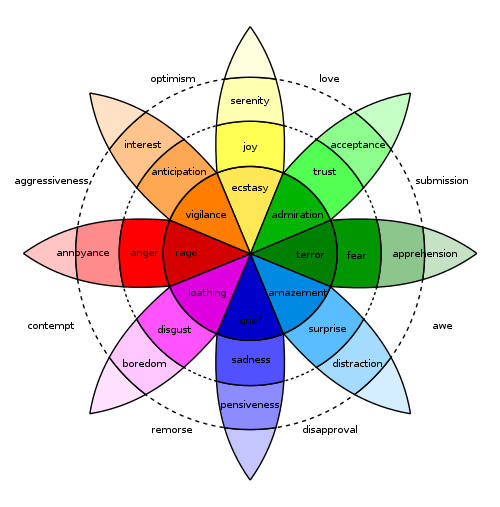

Emotion recognition in conversation (ERC, emotion recognition in dialogues) is a sub-field of emotion recognition, that focuses on mining human emotions from conversations or dialogues having two or more interlocutors.[1] The datasets in this field are usually derived from social platforms that allow free and plenty of samples, often containing multimodal data (i.e., some combination of textual, visual, and acoustic data).[2] Self- and inter-personal influences play critical role[3] in identifying some basic emotions, such as, fear, anger, joy, surprise, etc. The more fine grained the emotion labels are the harder it is to detect the correct emotion. ERC poses a number of challenges,[1] such as, conversational-context modeling, speaker-state modeling, presence of sarcasm in conversation, emotion shift across consecutive utterances of the same interlocutor.

The task

The task of ERC deals with detecting emotions expressed by the speakers in each utterance of the conversation. ERC depends on three primary factors – the conversational context, interlocutors' mental state, and intent.[1]

Datasets

IEMOCAP,[4] SEMAINE,[5] DailyDialogue,[6] and MELD[7] are the four widely used datasets in ERC. Among these four datasets, MELD contains multiparty dialogues.

Methods

Approaches to ERC consist of unsupervised, semi-unsupervised, and supervised [8] methods. Popular supervised methods include using or combining pre-defined features, recurrent neural networks [9] (DialogueRNN[10]), graph convolutional networks [11] (DialogueGCN [12]), and attention gated hierarchical memory network.[13] Most of the contemporary methods for ERC are deep learning based and rely on the idea of latent speaker-state modeling.

See also

References

- Poria, Soujanya; Majumder, Navonil; Mihalcea, Rada; Hovy, Eduard (2019). "Emotion Recognition in Conversation: Research Challenges, Datasets, and Recent Advances". IEEE Access. 7: 100943–100953. arXiv:1905.02947. Bibcode:2019arXiv190502947P. doi:10.1109/ACCESS.2019.2929050.

- Lee, Chul Min; Narayanan, Shrikanth (March 2005). "Toward Detecting Emotions in Spoken Dialogs". IEEE Transactions on Speech and Audio Processing. 13 (2): 293–303. doi:10.1109/TSA.2004.838534.

- Hazarika, Devamanyu; Poria, Soujanya; Zimmermann, Roger; Mihalcea, Rada (Oct 2019). "Emotion Recognition in Conversations with Transfer Learning from Generative Conversation Modeling". arXiv:1910.04980 [cs.CL].

- Busso, Carlos; Bulut, Murtaza; Lee, Chi-Chun; Kazemzadeh, Abe; Mower, Emily; Kim, Samuel; Chang, Jeannette N.; Lee, Sungbok; Narayanan, Shrikanth S. (2008-11-05). "IEMOCAP: interactive emotional dyadic motion capture database". Language Resources and Evaluation. 42 (4): 335–359. doi:10.1007/s10579-008-9076-6. ISSN 1574-020X.

- McKeown, G.; Valstar, M.; Cowie, R.; Pantic, M.; Schroder, M. (2012-01-02). "The SEMAINE Database: Annotated Multimodal Records of Emotionally Colored Conversations between a Person and a Limited Agent". IEEE Transactions on Affective Computing. 3 (1): 5–17. doi:10.1109/t-affc.2011.20. ISSN 1949-3045.

- Li, Yanran, Hui Su, Xiaoyu Shen, Wenjie Li, Ziqiang Cao, and Shuzi Niu. "DailyDialog: A Manually Labelled Multi-turn Dialogue Dataset." In Proceedings of the Eighth International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pp. 986-995. 2017.

- Poria, Soujanya; Hazarika, Devamanyu; Majumder, Navonil; Naik, Gautam; Cambria, Erik; Mihalcea, Rada (2019). "MELD: A Multimodal Multi-Party Dataset for Emotion Recognition in Conversations". Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics. Stroudsburg, PA, USA: Association for Computational Linguistics: 527–536. arXiv:1810.02508. doi:10.18653/v1/p19-1050.

- Abdelwahab, Mohammed; Busso, Carlos (March 2005). "Supervised domain adaptation for emotion recognition from speech". IEEE Transactions on Speech and Audio Processing: 5058–5062. doi:10.1109/ICASSP.2015.7178934. ISBN 978-1-4673-6997-8.

- Chernykh, Vladimir; Prikhodko, Pavel; King, Irwin (Jul 2019). "Emotion Recognition From Speech With Recurrent Neural Networks". arXiv:1701.08071 [cs.CL].

- Majumder, Navonil; Poria, Soujanya; Hazarika, Devamanyu; Mihalcea, Rada; Gelbukh, Alexander; Cambria, Erik (2019-07-17). "DialogueRNN: An Attentive RNN for Emotion Detection in Conversations". Proceedings of the AAAI Conference on Artificial Intelligence. 33: 6818–6825. doi:10.1609/aaai.v33i01.33016818. ISSN 2374-3468.

- "Graph Convolutional Networks are Bringing Emotion Recognition Closer to Machines. Here's how". Tech Times. 2019-11-26. Retrieved February 25, 2020.

- Ghosal, Deepanway; Majumder, Navonil; Soujanya, Poria (Aug 2019). DialogueGCN: A Graph Convolutional Neural Network for Emotion Recognition in Conversation. Conference on Empirical Methods in Natural Language Processing (EMNLP).

- Jiao, Wenxiang; R. Lyu, Michael; King, Irwin (November 2019). "Real-Time Emotion Recognition via Attention Gated Hierarchical Memory Network". arXiv:1911.09075 [cs.CL].