VMware memory management seems to be a tricky balancing act. With cluster RAM, Resource Pools, VMware's management techniques (TPS, ballooning, host swapping), in-guest RAM utilization, swapping, reservations, shares and limits, there are a lot of variables.

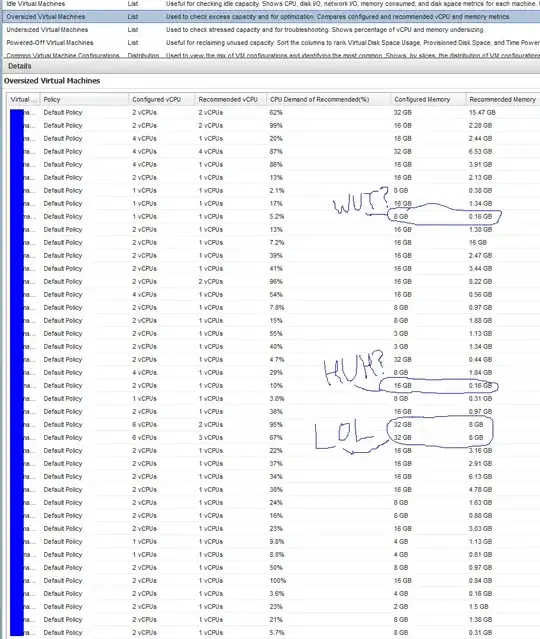

I'm in a situation where clients are using dedicated vSphere cluster resources. However, they are configuring the virtual machines as though they were on physical hardware. In turn, this means a standard VM build may have 4 vCPUs and 16GB or more of RAM. I come from the school of starting small (1 vCPU, minimal RAM), checking real-world use and adjusting up as necessary. Unfortunately, many vendor requirements and people unfamiliar with virtualization request more resources than necessary... I'm interested in quantifying the impact of this decision.

Some examples from a "problem" cluster.

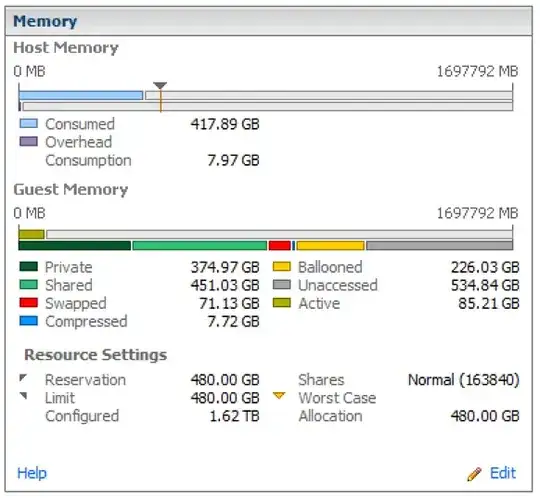

Resource pool summary - Looks almost 4:1 overcommitted. Note the high amount of ballooned RAM.

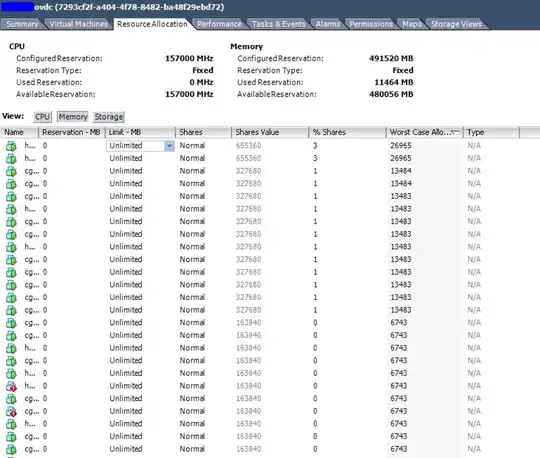

Resource allocation - The Worst Case Allocation column shows that these VMs would have access to less than 50% of their configured RAM under constrained conditions.

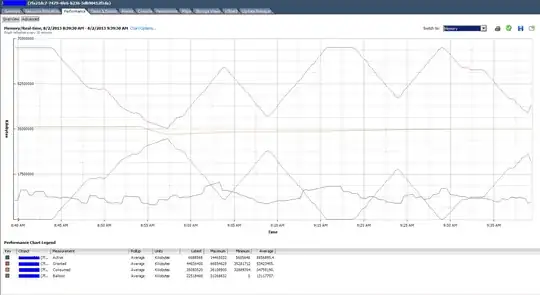

The real-time memory utilization graph of the top VM in the listing above. 4 vCPU and 64GB RAM allocated. It averages under 9GB use.

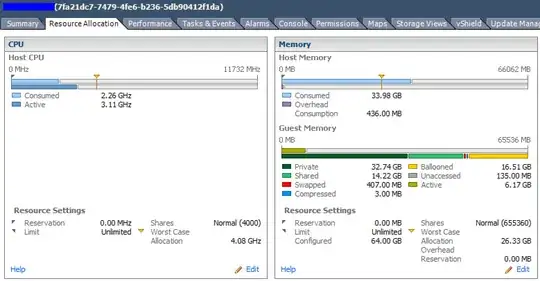

Summary of the same VM

What are the downsides of overcommitting and overconfiguring resources (specifically RAM) in vSphere environments?

Assuming that the VMs can run in less RAM, is it fair to say that there's overhead to configuring virtual machines with more RAM than they actually need?

What is the counter-argument to: "if a VM has 16GB of RAM allocated, but only uses 4GB, what's the problem??"? E.g. do customers need to be educated that VMs are not the same as physical hardware?

What specific metric(s) should be used to meter RAM usage. Tracking the peaks of "Active" versus time? Watching "Consumed"?

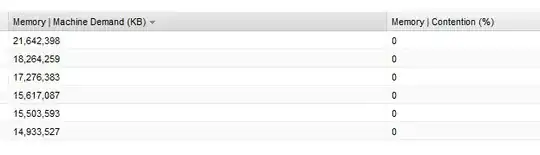

Update: I used vCenter Operations Manager to profile this environment and get some detail on the cluster stats listed above. While things are definitely overcommitted, the VMs are actually so overconfigured with unnecessary RAM that the real (tiny) memory footprint shows no memory contention at the cluster/host level...

My takeaway is that VMs should really be right-sized with a little bit of buffer for OS-level caching. Overcommitting out of ignorance or vendor "requirements" leads to the situation presented here. Memory ballooning seems to be bad in every case, as there is a performance impact, so right-sizing can help prevent this.

Update 2: Some of these VMs are beginning to crash with:

kernel:BUG: soft lockup - CPU#1 stuck for 71s!

VMware describes this as a symptom of heavy memory overcommitment. So I guess that answers the question.

vCops "Oversized Virtual Machines" report...

vCops "Reclaimable Waste" graph...