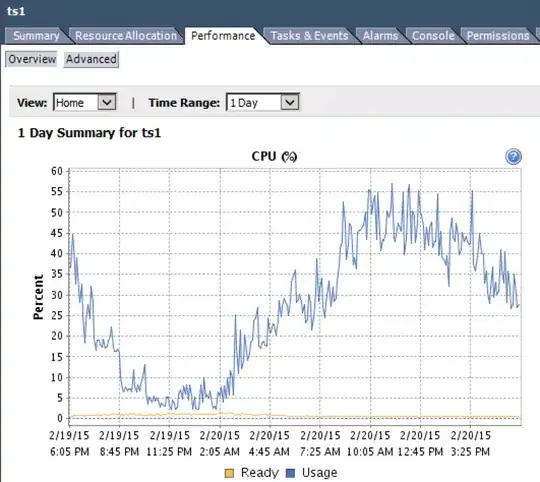

For a while now I've been trying to figure out why quite a few of our business-critical systems are getting reports of "slowness" ranging from mild to extreme. I've recently turned my eye to the VMware environment where all the servers in question are hosted.

I recently downloaded and installed the trial for the Veeam VMware management pack for SCOM 2012, but I'm having a hard time beliving (and so is my boss) the numbers that it is reporting to me. To try to convince my boss that the numbers it's telling me are true I started looking into the VMware client itself to verify the results.

I've looked at this VMware KB article; specifically for the definition of Co-Stop which is defined as:

Amount of time a MP virtual machine was ready to run, but incurred delay due to co-vCPU scheduling contention

Which I am translating to

The guest OS needs time from the host but has to wait for resources to become available and therefore can be considered "unresponsive"

Does this translation seem correct?

If so, here is where I have a hard time beliving what I am seeing: The host that contains the majority of the VMs that are "slow" is currently showing a CPU Co-stop average of 127,835.94 milliseconds!

Does this mean that on average the VMs on this host have to wait 2+ minutes for CPU time???

This host does have two 4 core CPU's on it and it has 1x8 CPU guest and 14x4 CPU guests.