Workstation

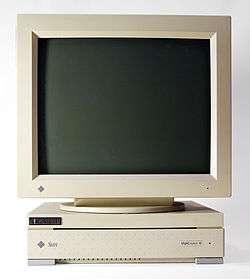

A workstation is a special computer designed for technical or scientific applications. Intended primarily to be used by one person at a time, they are commonly connected to a local area network and run multi-user operating systems. The term workstation has also been used loosely to refer to everything from a mainframe computer terminal to a PC connected to a network, but the most common form refers to the class of hardware offered by several current and defunct companies such as Sun Microsystems, Silicon Graphics, Apollo Computer, DEC, HP, NeXT and IBM which opened the door for the 3D graphics animation revolution of the late 1990s.

Workstations offer higher performance than mainstream personal computers, especially with respect to CPU and graphics, memory capacity, and multitasking capability. Workstations are optimized for the visualization and manipulation of different types of complex data such as 3D mechanical design, engineering simulation (e.g., computational fluid dynamics), animation and rendering of images, and mathematical plots. Typically, the form factor is that of a desktop computer, consist of a high resolution display, a keyboard and a mouse at a minimum, but also offer multiple displays, graphics tablets, 3D mice (devices for manipulating 3D objects and navigating scenes), etc. Workstations were the first segment of the computer market to present advanced accessories and collaboration tools.

The increasing capabilities of mainstream PCs in the late 1990s have blurred the lines between PCs and technical/scientific workstations. Typical workstations previously employed proprietary hardware which made them distinct from PCs; for instance IBM used RISC-based CPUs for its workstations and Intel x86 CPUs for its business/consumer PCs during the 1990s and 2000s. However, by the early 2000s this difference largely disappeared, as workstations now use highly commoditized hardware dominated by large PC vendors, such as Dell, Hewlett-Packard (later HP Inc.) and Fujitsu, selling Microsoft Windows or Linux systems running on x86-64 processors.

History

Origins and development

Perhaps the first computer that might qualify as a "workstation" was the IBM 1620, a small scientific computer designed to be used interactively by a single person sitting at the console. It was introduced in 1960. One peculiar feature of the machine was that it lacked any actual arithmetic circuitry. To perform addition, it required a memory-resident table of decimal addition rules. This saved on the cost of logic circuitry, enabling IBM to make it inexpensive. The machine was code-named CADET and rented initially for $1000 a month.

In 1965, IBM introduced the IBM 1130 scientific computer, which was meant as the successor to the 1620. Both of these systems came with the ability to run programs written in Fortran and other languages. Both the 1620 and the 1130 were built into roughly desk-sized cabinets. Both were available with add-on disk drives, printers, and both paper-tape and punched-card I/O. A console typewriter for direct interaction was standard on each.

Early examples of workstations were generally dedicated minicomputers; a system designed to support a number of users would instead be reserved exclusively for one person. A notable example was the PDP-8 from Digital Equipment Corporation, regarded to be the first commercial minicomputer.

The Lisp machines developed at MIT in the early 1970s pioneered some of the principles of the workstation computer, as they were high-performance, networked, single-user systems intended for heavily interactive use. Lisp Machines were commercialized beginning 1980 by companies like Symbolics, Lisp Machines, Texas Instruments (the TI Explorer) and Xerox (the Interlisp-D workstations). The first computer designed for a single-user, with high-resolution graphics facilities (and so a workstation in the modern sense of the term) was the Xerox Alto developed at Xerox PARC in 1973. Other early workstations include the Terak 8510/a (1977), Three Rivers PERQ (1979) and the later Xerox Star (1981).

1980s rise in popularity

In the early 1980s, with the advent of 32-bit microprocessors such as the Motorola 68000, a number of new participants in this field appeared, including Apollo Computer and Sun Microsystems, who created Unix-based workstations based on this processor. Meanwhile, DARPA's VLSI Project created several spinoff graphics products as well, notably the SGI 3130, and Silicon Graphics' range of machines that followed. It was not uncommon to differentiate the target market for the products, with Sun and Apollo considered to be network workstations, while the SGI machines were graphics workstations. As RISC microprocessors became available in the mid-1980s, these were adopted by many workstation vendors.

Workstations tended to be very expensive, typically several times the cost of a standard PC and sometimes costing as much as a new car. However, minicomputers sometimes cost as much as a house. The high expense usually came from using costlier components that ran faster than those found at the local computer store, as well as the inclusion of features not found in PCs of the time, such as high-speed networking and sophisticated graphics. Workstation manufacturers also tend to take a "balanced" approach to system design, making certain to avoid bottlenecks so that data can flow unimpeded between the many different subsystems within a computer. Additionally, workstations, given their more specialized nature, tend to have higher profit margins than commodity-driven PCs.

The systems that come out of workstation companies often feature SCSI or Fibre Channel disk storage systems, high-end 3D accelerators, single or multiple 64-bit processors, large amounts of RAM, and well-designed cooling. Additionally, the companies that make the products tend to have comprehensive repair/replacement plans. As the distinction between workstation and PC fades, however, workstation manufacturers have increasingly employed "off the shelf" PC components and graphics solutions rather than proprietary hardware or software. Some "low-cost" workstations are still expensive by PC standards, but offer binary compatibility with higher-end workstations and servers made by the same vendor. This allows software development to take place on low-cost (relative to the server) desktop machines.

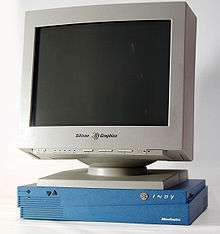

Graphics workstations

Graphics workstations (e.g. machines from Silicon Graphics) often shipped with graphics accelerators.

Thin clients and X terminals

There have been several attempts to produce a workstation-like machine specifically for the lowest possible price point as opposed to performance. One approach is to remove local storage and reduce the machine to the processor, keyboard, mouse and screen. In some cases, these diskless nodes would still run a traditional operating system and perform computations locally, with storage on a remote server. These approaches are intended not just to reduce the initial system purchase cost, but lower the total cost of ownership by reducing the amount of administration required per user.

This approach was actually first attempted as a replacement for PCs in office productivity applications, with the 3Station by 3Com as an early example; in the 1990s, X terminals filled a similar role for technical computing. Sun has also introduced "thin clients", most notably its Sun Ray product line. However, traditional workstations and PCs continue to drop in price, which tends to undercut the market for products of this type.

"3M computer"

In the early 1980s, a high-end workstation had to meet the three Ms. The so-called "3M computer" had a Megabyte of memory, a Megapixel display (roughly 1000×1000), and a "MegaFLOPS" compute performance (at least one million floating point operations per second).[lower-alpha 1] As limited as this seems today, it was at least an order of magnitude beyond the capacity of the personal computer of the time; the original 1981 IBM Personal Computer had 16 KB memory, a text-only display, and floating-point performance around 1 kiloFLOPS (30 kiloFLOPS with the optional 8087 math coprocessor). Other desirable features not found in desktop computers at that time included networking, graphics acceleration, and high-speed internal and peripheral data buses.

Another goal was to bring the price for such a system down under a "Megapenny", that is, less than $10,000; this was not achieved until the late 1980s, although many workstations, particularly mid-range or high-end still cost anywhere from $15,000 to $100,000 and over throughout the early to mid-1990s.

Trends leading to decline

The more widespread adoption of these technologies into mainstream PCs was a direct factor in the decline of the workstation as a separate market segment:

- High-performance CPUs: while RISC in its early days (early 1980s) offered roughly an order-of-magnitude performance improvement over CISC processors of comparable cost, one particular family of CISC processors, Intel's x86, always had the edge in market share and the economies of scale that this implied. By the mid-1990s, some x86 CPUs had achieved performance on a parity with RISC in some areas, such as integer performance (albeit at the cost of greater chip complexity), relegating the latter to even more high-end markets for the most part.

- Hardware support for floating-point operations: optional on the original IBM PC; remained on a separate chip for Intel systems until the 80486DX processor. Even then, x86 floating-point performance continued to lag behind other processors due to limitations in its architecture. Today even low-price PCs now have performance in the gigaFLOPS range.

- Large memory configurations: PCs (i.e. IBM-compatibles) were originally limited to a 640 KB memory capacity (not counting bank-switched "expanded memory") until the 1982 introduction of the 80286 processor; early workstations provided access to several megabytes of memory. Even after PCs broke the 640 KB limit with the 80286, special programming techniques were required to address significant amounts of memory until the 80386, as opposed to other 32-bit processors such as SPARC which provided straightforward access to nearly their entire 4 GB memory address range. 64-bit workstations and servers supporting an address range far beyond 4 GB have been available since the early 1990s, a technology just beginning to appear in the PC desktop and server market in the mid-2000s.

- Operating system: early workstations ran the Unix operating system (OS), a Unix-like variant, or an unrelated equivalent OS such as VMS. The PC CPUs of the time had limitations in memory capacity and memory access protection, making them unsuitable to run OSes of this sophistication, but this, too, began to change in the late 1980s as PCs with the 32-bit 80386 with integrated paged MMUs became widely affordable.

- High-speed networking (10 Mbit/s or better): 10 Mbit/s network interfaces were commonly available for PCs by the early 1990s, although by that time workstations were pursuing even higher networking speeds, moving to 100 Mbit/s, 1 Gbit/s, and 10 Gbit/s. However, economies of scale and the demand for high-speed networking in even non-technical areas has dramatically decreased the time it takes for newer networking technologies to reach commodity price points.

- Large displays (17- to 21-inch) with high resolutions and high refresh rate, which were rare among PCs in the late 1980s and early 1990s but became common among PCs by the late 1990s.

- High-performance 3D graphics hardware for computer-aided design (CAD) and computer-generated imagery (CGI) animation: though this increasingly popular in the PC market around the mid-to-late 1990s mostly driven by computer gaming. For Nvidia, the integration of the transform and lighting hardware into the GPU itself set the GeForce 256 apart from older 3D accelerators that relied on the CPU to perform these calculations (also known as software transform and lighting). This reduction of 3D graphics solution complexity brought the cost of such hardware to a new low and made it accessible to cheap consumer graphics cards instead of being limited to the previous expensive, professionally oriented niche designed for computer-aided design (CAD). NV10's T&L engine also allowed Nvidia to enter the CAD market for the first time, with the Quadro line that uses the same silicon chips as the GeForce cards, but has different driver support and certifications tailored to the unique requirements of CAD applications. However, users could soft-mod the GeForce such that it could perform many of the tasks intended for the much more expensive Quadro.

- High-performance/high-capacity data storage: early workstations tended to use proprietary disk interfaces until the emergence of the SCSI standard in the mid-1980s. Although SCSI interfaces soon became available for PCs, they were comparatively expensive and tended to be limited by the speed of the PC's ISA peripheral bus (although SCSI did become standard on the Apple Macintosh). SCSI is an advanced controller interface which is particularly good where the disk has to cope with multiple requests at once. This makes it suited for use in servers, but its benefits to desktop PCs which mostly run single-user operating systems are less clear. These days, with desktop systems acquiring more multi-user capabilities, the new disk interface of choice is Serial ATA, which has throughput comparable to SCSI but at a lower cost.

- Extremely reliable components: together with multiple CPUs with greater cache and error-correcting memory, this may remain the distinguishing feature of a workstation today. Although most technologies implemented in modern workstations are also available at lower cost for the consumer market, finding good components and making sure they work compatibly with each other is a great challenge in workstation building. Because workstations are designed for high-end tasks such as weather forecasting, video rendering, and game design, it is taken for granted that these systems must be running under full-load, non-stop for several hours or even days without issue. Any off-the-shelf components can be used to build a workstation, but the reliability of such components under such rigorous conditions is uncertain. For this reason, almost no workstations are built by the customer themselves but rather purchased from a vendor such as Hewlett-Packard / HP Inc., Fujitsu, IBM / Lenovo, Sun Microsystems, SGI, Apple, or Dell.

- Tight integration between the OS and the hardware: Workstation vendors both design the hardware and maintain the Unix operating system variant that runs on it. This allows for much more rigorous testing than is possible with an operating system such as Windows. Windows requires that third-party hardware vendors write compliant hardware drivers that are stable and reliable. Also, minor variation in hardware quality such as timing or build quality can affect the reliability of the overall machine. Workstation vendors are able to ensure both the quality of the hardware, and the stability of the operating system drivers by validating these things in-house, and this leads to a generally much more reliable and stable machine.

Place in the market

.jpeg)

Since the turn of the millennium, the definition of "workstation" has blurred to some extent. Many of the components used in lower-end "workstations" are now the same as those used in the consumer market, and the price differential between the lower-end workstation and consumer PCs can be narrower than it once was (and in certain cases in the high-end consumer market, such as the "enthusiast" game market, it can be difficult to tell what qualifies as a "desktop PC" and a "workstation"). In another instance, the Nvidia GeForce 256 graphics card spawned the Quadro, which had the same GPU but different driver support and certifications tailored to the unique requirements of CAD applications and retailed for a much higher price, so many took to using the GeForce as a "poor-man's" workstation card since the hardware was largely as capable plus it could be soft-modded to unlock features nominally exclusive to the Quadro.[1]

Workstations have typically been the drivers of advancements in CPU technology. Although both the consumer desktop and the workstation benefit from CPUs designed around the multicore concept (essentially, multiple processors on a die, the application of which IBM's POWER4 was a pioneer), modern workstations typically use multiple multicore CPUs, error-correcting memory and much larger on-die caches than those found on "consumer-level" CPUs. Such power and reliability are not normally required on a general desktop computer. IBM's POWER-based processor boards and the workstation-level Intel-based Xeon processor boards, for example, have multiple CPUs, more on-die cache and ECC memory, which are features more suited to demanding content-creation, engineering and scientific work than to general desktop computing.

Some workstations are designed for use with only one specific application such as AutoCAD, Avid Xpress Studio HD, 3D Studio Max, etc. To ensure compatibility with the software, purchasers usually ask for a certificate from the software vendor. The certification process makes the workstation's price jump several notches but for professional purposes, reliability may be more important than the initial purchase cost.

Current workstation market

Decline of RISC-based workstations

By January 2009, all RISC-based workstation product lines had been discontinued:

- SGI ended general availability of its MIPS-based SGI Fuel and SGI Tezro workstations in December 2006.[2]

- Hewlett-Packard withdrew its last HP 9000 PA-RISC-based desktop products from the market in January 2008.[3]

- Sun Microsystems announced end-of-life for its last Sun Ultra SPARC workstations in October 2008.[4]

- IBM retired the IntelliStation POWER on January 2, 2009.[5]

Early 2018 saw the reintroduction of commercially available RISC-based workstations in the form of a series of IBM POWER9-based systems by Raptor Computing Systems.[6][7]

Change to x86-64 workstations

The current workstation market uses x86-64 microprocessors. Operating systems available for these platforms include Microsoft Windows, FreeBSD, various Linux distributions, Apple macOS (formerly known as OS X), and Oracle Solaris. Some vendors also market commodity mono-socket systems as workstations.

Three types of products are marketed under the workstation umbrella:

- Workstation blade systems (IBM HC10 or Hewlett-Packard xw460c. Sun Visualization System is akin to these solutions)

- Ultra high-end workstation (SGI Virtu VS3xx)

- Deskside systems containing server-class CPUs and chipsets on large server-class motherboards with high-end RAM (HP Z-series workstations and Fujitsu CELSIUS workstations)

Workstation definition

A significant segment of the desktop market are computers expected to perform as workstations, but using PC operating systems and components. Component manufacturers will often segment their product line, and market premium components which are functionally similar to the cheaper "consumer" models but feature a higher level of robustness or performance.

A workstation-class PC may have some of the following features:

- Support for ECC memory

- Larger number of memory sockets which use registered (buffered) modules

- Multiple processor sockets, powerful CPUs

- Multiple displays

- Run reliable operating system with advanced features

- Reliable high-performance graphics card

See also

Notes

- RFC 782 defined the workstation environment more generally as hardware and software dedicated to serve a single user, and that it provide for the use of additional shared resources.

References

- "Workstation Products". Nvidia. Retrieved 2007-10-02.

- End of General Availability for MIPS® IRIX® Products, Silicon Graphics, December 2006

- "Discontinuance Notice", c8000 Workstation, HP, July 2007.

- A remarketed EOL Sun Ultra 45 workstation, Solar systems.

- "Hardware Withdrawal Announcement", IntelliStation POWER 185 and 285 (PDF), IBM.

- Raptor Launching Talos II Lite POWER9 Computer System At A Lower Cost, Phoronix

- Raptor Announces "Blackbird" Micro-ATX, Low-Cost POWER9 Motherboard, Phoronix

- This article is based on material taken from the Free On-line Dictionary of Computing prior to 1 November 2008 and incorporated under the "relicensing" terms of the GFDL, version 1.3 or later.