Line search

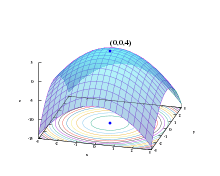

In optimization, the line search strategy is one of two basic iterative approaches to find a local minimum of an objective function . The other approach is trust region.

The line search approach first finds a descent direction along which the objective function will be reduced and then computes a step size that determines how far should move along that direction. The descent direction can be computed by various methods, such as gradient descent or quasi-Newton method. The step size can be determined either exactly or inexactly.

Example use

Here is an example gradient method that uses a line search in step 4.

- Set iteration counter , and make an initial guess for the minimum

- Repeat:

- Compute a descent direction

- Choose to 'loosely' minimize over

- Update , and

- Until < tolerance

At the line search step (4) the algorithm might either exactly minimize h, by solving , or loosely, by asking for a sufficient decrease in h. One example of the former is conjugate gradient method. The latter is called inexact line search and may be performed in a number of ways, such as a backtracking line search or using the Wolfe conditions.

Like other optimization methods, line search may be combined with simulated annealing to allow it to jump over some local minima.

Algorithms

Direct search methods

In this method, the minimum must first be bracketed, so the algorithm must identify points x1 and x2 such that the sought minimum lies between them. The interval is then divided by computing at two internal points, x3 and x4, and rejecting whichever of the two outer points is not adjacent to that of x3 and x4 which has the lowest function value. In subsequent steps, only one extra internal point needs to be calculated. Of the various methods of dividing the interval,[1] golden section search is particularly simple and effective, as the interval proportions are preserved regardless of how the search proceeds:

where

See also

- Golden section search

- Grid search

- Learning rate

- Pattern search (optimization)

- Secant method

References

- Box, M. J.; Davies, D.; Swann, W. H. (1969). Non-Linear optimisation Techniques. Oliver & Boyd.

Further reading

- Dennis, J. E., Jr.; Schnabel, Robert B. (1983). "Globally Convergent Modifications of Newton's Method". Numerical Methods for Unconstrained Optimization and Nonlinear Equations. Englewood Cliffs: Prentice-Hall. pp. 111–154. ISBN 0-13-627216-9.

- Nocedal, Jorge; Wright, Stephen J. (1999). "Line Search Methods". Numerical Optimization. New York: Springer. pp. 34–63. ISBN 0-387-98793-2.

- Sun, Wenyu; Yuan, Ya-Xiang (2006). "Line Search". Optimization Theory and Methods : Nonlinear Programming. New York: Springer. pp. 71–117. ISBN 0-387-24975-3.