So, I'll pose another question:

Why is it necessary to run HP Insight hardware diagnostics on servers prior to provisioning?

In my comment above, I indicated that there's little to gain by doing this preemptively in large HP ProLiant environments. I should clarify my thoughts on that...

In order of descending frequency, let's look at the types of issues you'll typically encounter:

Storage array and disks: The RAID controller will report to the OS, logs, SNMP, email, ILO and light up pretty lights to indicate health.

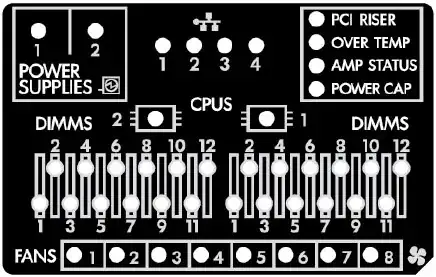

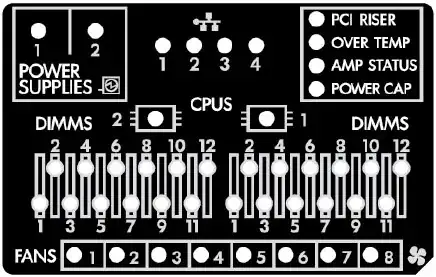

RAM: The POST process will detect RAM status, as well as the system reporting to the OS, logs, SNMP, email, ILO and lighting up an LED indicator on the front panel Systems Insight Display (SID). Also, I'm not a fan of RAM burn-in processes because the error detection of these systems is already robust.

Thermal and fans: Server temperature and fan speed are regulated by the ILO. There are 30+ temperature sensors on these systems, so the cooling system is extremely efficient. This still reports to the OS, logs, SNMP, email and on the SID.

Power Supply: PSU status is reported to the OS, logs, SNMP, email and on the SID, as well as an actual indicator light on the actual power supply unit.

Overall health: This is easy to assess from a glance with the SID display, in addition to the Internal Health and External Health LED. This is also reported to the server's logs, SNMP, email and ILO.

I can't think of any conditions that would be found pre-deployment that wouldn't/couldn't be reported during runtime or post OS install.

The diagnostics loop usually won't find anything when run on a system with no obvious prior issues. This is mainly because the server needs to POST and boot into the utility or Intelligent Provisioning firmware in order to run the utility.

Put another way, any item that would be a serious "SPOF" for the server would probably prevent the system from running its self-diagnostics.

The most common failure items are still fairly robust; disks should be in RAID and are hot-swappable. Fans and power supplies are also hot-swappable. Your RAM has ECC thresholds and there are online spare options for most ProLiant platforms. There's nothing you'll be able to do to induce failure in these components by running diagnostics. Add the fact that you're using HP C7000 Blade enclosures, which have internal redundancies, and your incidence of failure should be pretty low.