Lead time bias

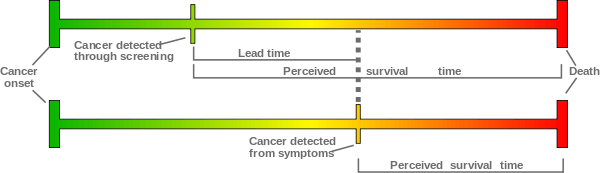

Lead time is the length of time between the detection of a disease (usually based on new, experimental criteria) and its usual clinical presentation and diagnosis (based on traditional criteria). It is the time between early diagnosis with screening and the time in which diagnosis would have been made without screening. It is an important factor when evaluating the effectiveness of a specific test.[1]

Relationship between screening and survival

By screening, the intention is to diagnose a disease earlier than it would be without screening. Without screening, the disease may be discovered later, when symptoms appear.

Early diagnosis by screening may not prolong the life of someone but just determine the propensity of the person to a disease or medical condition such as by DNA testing. No additional life span has been gained and the patient may even be subject to added anxiety as the patient must live for longer with knowledge of the disease. For example, the genetic disorder Huntington's disease is diagnosed when symptoms appear at around 50, and the person dies at around 65. The typical patient, therefore, lives about 15 years after diagnosis. A genetic test at birth makes it possible to diagnose this disorder earlier. If this newborn baby dies at around 65, the person will have "survived" 65 years after diagnosis, without having actually lived any longer than those diagnosed without DNA detection.

Raw statistics can make screening appear to increase survival time (called lead time). If the person dies at a time in life that previously has been the usual course of the disease than when detected by early screening, the person's life has not been prolonged.

Detection by advanced screening does not always mean prolonged survival.

Lead time bias can affect interpretation of the five-year survival rate.[2]

See also

Notes

- Lead time bias - General Practice Notebook

- Gordis, Leon (2008). Epidemiology. Philadelphia: Saunders. p. 318. ISBN 978-1-4160-4002-6.