Integral test for convergence

In mathematics, the integral test for convergence is a method used to test infinite series of non-negative terms for convergence. It was developed by Colin Maclaurin and Augustin-Louis Cauchy and is sometimes known as the Maclaurin–Cauchy test.

| Part of a series of articles about | ||||||

| Calculus | ||||||

|---|---|---|---|---|---|---|

| ||||||

|

||||||

|

||||||

|

||||||

|

Specialized |

||||||

Statement of the test

Consider an integer N and a non-negative function f defined on the unbounded interval [N, ∞), on which it is monotone decreasing. Then the infinite series

converges to a real number if and only if the improper integral

is finite. In other words, if the integral diverges, then the series diverges as well.

Remark

If the improper integral is finite, then the proof also gives the lower and upper bounds

-

(1)

for the infinite series.

Proof

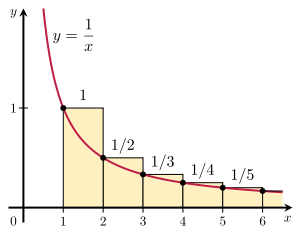

The proof basically uses the comparison test, comparing the term f(n) with the integral of f over the intervals [n − 1, n) and [n, n + 1), respectively.

Since f is a monotone decreasing function, we know that

and

Hence, for every integer n ≥ N,

-

(2)

and, for every integer n ≥ N + 1,

-

(3)

By summation over all n from N to some larger integer M, we get from (2)

and from (3)

Combining these two estimates yields

Letting M tend to infinity, the bounds in (1) and the result follow.

Applications

The harmonic series

diverges because, using the natural logarithm, its antiderivative, and the fundamental theorem of calculus, we get

Contrary, the series

(cf. Riemann zeta function) converges for every ε > 0, because by the power rule

From (1) we get the upper estimate

which can be compared with some of the particular values of Riemann zeta function.

Borderline between divergence and convergence

The above examples involving the harmonic series raise the question, whether there are monotone sequences such that f(n) decreases to 0 faster than 1/n but slower than 1/n1+ε in the sense that

for every ε > 0, and whether the corresponding series of the f(n) still diverges. Once such a sequence is found, a similar question can be asked with f(n) taking the role of 1/n, and so on. In this way it is possible to investigate the borderline between divergence and convergence of infinite series.

Using the integral test for convergence, one can show (see below) that, for every natural number k, the series

-

(4)

still diverges (cf. proof that the sum of the reciprocals of the primes diverges for k = 1) but

-

(5)

converges for every ε > 0. Here lnk denotes the k-fold composition of the natural logarithm defined recursively by

Furthermore, Nk denotes the smallest natural number such that the k-fold composition is well-defined and lnk(Nk) ≥ 1, i.e.

using tetration or Knuth's up-arrow notation.

To see the divergence of the series (4) using the integral test, note that by repeated application of the chain rule

hence

To see the convergence of the series (5), note that by the power rule, the chain rule and the above result

hence

and (1) gives bounds for the infinite series in (5).

See also

- Convergence tests

- Convergence (mathematics)

- Direct comparison test

- Dominated convergence theorem

- Euler-Maclaurin formula

- Limit comparison test

- Monotone convergence theorem

References

- Knopp, Konrad, "Infinite Sequences and Series", Dover Publications, Inc., New York, 1956. (§ 3.3) ISBN 0-486-60153-6

- Whittaker, E. T., and Watson, G. N., A Course in Modern Analysis, fourth edition, Cambridge University Press, 1963. (§ 4.43) ISBN 0-521-58807-3

- Ferreira, Jaime Campos, Ed Calouste Gulbenkian, 1987, ISBN 972-31-0179-3