An auto-scaling group launches EC2 instances and it appears that instances that run roughly >24 hours begin to degrade in performance. The longest one was running for 3 days until I manually terminated it. That seems unusually long in an auto-scaling group where instances are terminated every so often.

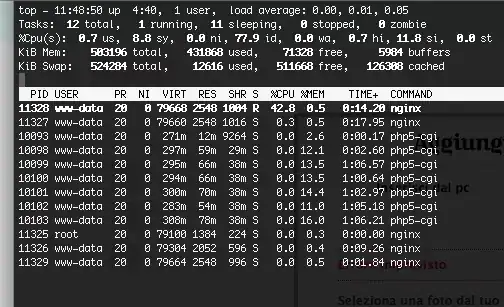

Specifically the CPU Utilization User% goes up to 30-40% and stays that high, while other instances in the auto-scaling group are only at around 10-15%. This uses up CPU credits and degrades general EB environment metrics such as avg. response time and 5xx status code responses.

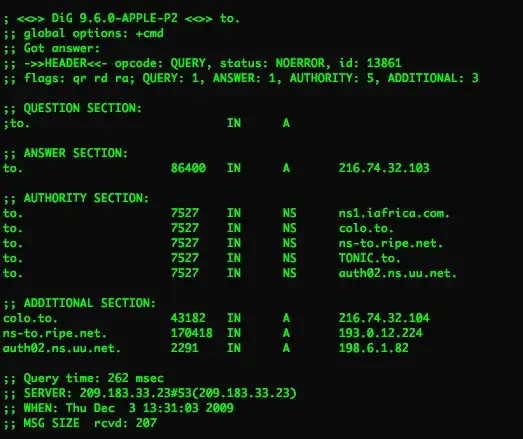

1) Why would an instance start to gradually impair after 24 hours? The instances are running Parse Server (nodeJS). How can I figure out what's wrong with the instance? I plan to SSH into the instance when it occurs again and take a look at the processes with top.

2) How can I auto-terminate instances that run longer than 24 hours? I tried to set up a Cloud Watch alarm but EC2 > Per Instance does not provide an "up-time" metric. I could set an alarm on the CPU Utilization, but I am unsure about the characteristics of this metric for faulty instances, so terminating after 24h seems to be a safer bet.

Update

ad 1) The issue could be this: https://github.com/parse-community/parse-server/issues/6061