To give you some context:

I have two server environments running the same app. The first, which I intend to abandon, is a Standard Google App Engine environment that has many limitations. The second one is a Google Kubernetes cluster running my Python app with Gunicorn.

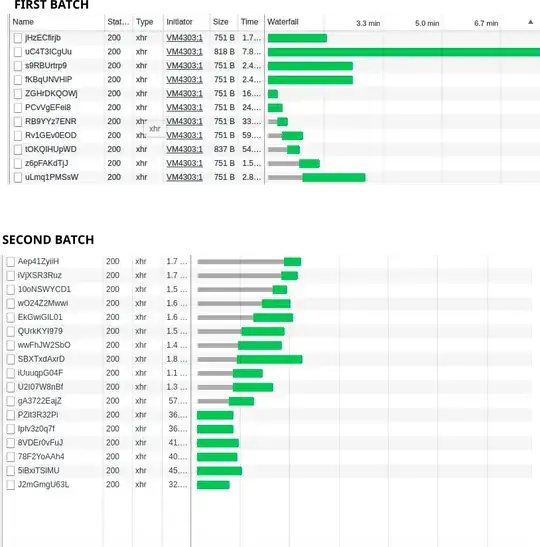

Concurrency

At the first server, I can send multiple requests to the app and it will answer many simultaneously. I run two batches of simultaneous requests against the app on both environments. At Google App Engine the first batch and the second were responded simultaneously and the first din't block the second.

At the Kubernetes, the server only responses 6 simultanous, and the first batch blocks the second. I've read some posts on how to achieve Gunicorn concurrency with gevent or multiple threading, and all of them say I need to have CPU cores, but the problem is that no matter how much cpu I put into it, the limitation continues. I've tried Google nodes from 1VCPU to 8VCPU and it doesn't change much.

Can you guys give me any ideas on what I'm possibly missing? Maybe Google Cluster nodes limitation?

Kubernetes response waterfall

As you can notice, the second batch only started to be responded after the first one started to finish.

App Engine response waterfall

Gunicorn configuration

I've tried with both the standard class with the recommended setting: 2 * cores + 1 for and 12 threads.

I've also tried gevent with --worker-connections 2000.

None of them made a difference. The response times were very similar.

My kubernetes file container section:

spec:

nodeSelector:

cloud.google.com/gke-nodepool: default-pool

containers:

- name: python-gunicorn

image: gcr.io/project-name/webapp:1.0

command:

- /env/bin/gunicorn

- --bind

- 0.0.0.0:8000

- main:app

- --chdir

- /deploy/app

#- --error-logfile

#- "-"

- --timeout

- "7200"

- -w

- "3"

- --threads

- "8"

#- -k

#- gevent

#- --worker-connections

#- "2000"