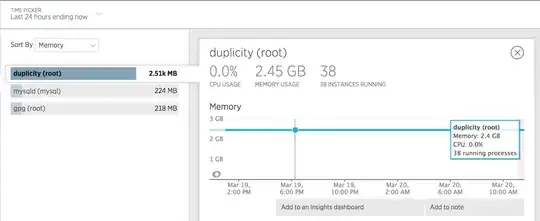

I'm experiencing constant high memory usage from Duplicity on all servers running it as a backup tool to S3.

Isn't Duplicity supposed to run its backup task and kill its job afterwards, or am I missing something here?

duply -v

duply version 2.0.1

(http://duply.net)

Using installed duplicity version 0.7.11, python 2.7.6, gpg 1.4.16 (Home: ~/.gnupg), awk 'GNU Awk 4.0.1', grep 'grep (GNU grep) 2.16', bash '4.3.11(1)-release (x86_64-pc-linux-gnu)'.

I'm using Duply to manage profiles on each server, here's one of them:

GPG_KEY='FOO'

GPG_PW='FOO'

TARGET='s3://s3-eu-central-1.amazonaws.com/foo-bucket/bar-location'

export AWS_ACCESS_KEY_ID='FOO'

export AWS_SECRET_ACCESS_KEY='FOO'

# base directory to backup

SOURCE='/'

# exclude folders containing exclusion file (since duplicity 0.5.14)

# Uncomment the following two lines to enable this setting.

FILENAME='.duplicity-ignore'

DUPL_PARAMS="$DUPL_PARAMS --exclude-if-present '$FILENAME'"

# Time frame for old backups to keep, Used for the "purge" command.

# see duplicity man page, chapter TIME_FORMATS)

MAX_AGE=2M

# Number of full backups to keep. Used for the "purge-full" command.

# See duplicity man page, action "remove-all-but-n-full".

MAX_FULL_BACKUPS=2

# Number of full backups for which incrementals will be kept for.

# Used for the "purge-incr" command.

# See duplicity man page, action "remove-all-inc-of-but-n-full".

MAX_FULLS_WITH_INCRS=1

# activates duplicity --full-if-older-than option (since duplicity v0.4.4.RC3)

# forces a full backup if last full backup reaches a specified age, for the

# format of MAX_FULLBKP_AGE see duplicity man page, chapter TIME_FORMATS

# Uncomment the following two lines to enable this setting.

MAX_FULLBKP_AGE=1M

DUPL_PARAMS="$DUPL_PARAMS --full-if-older-than $MAX_FULLBKP_AGE "

# sets duplicity --volsize option (available since v0.4.3.RC7)

# set the size of backup chunks to VOLSIZE MB instead of the default 25MB.

# VOLSIZE must be number of MB's to set the volume size to.

# Uncomment the following two lines to enable this setting.

VOLSIZE=100

DUPL_PARAMS="$DUPL_PARAMS --volsize $VOLSIZE "

# more duplicity command line options can be added in the following way

# don't forget to leave a separating space char at the end

#DUPL_PARAMS="$DUPL_PARAMS --put_your_options_here "

Here's the cronjob to run the backups:

12 3 * * * nice -n19 ionice -c2 -n7 duply database backup_verify_purge --force --name foo_database >> /var/log/duplicity.log 2>&1

26 3 * * * nice -n19 ionice -c2 -n7 duply websites backup_verify_purge --force --name foo_websites >> /var/log/duplicity.log 2>&1

53 4 * * * nice -n19 ionice -c2 -n7 duply system backup_verify_purge --force --name foo_system >> /var/log/duplicity.log 2>&1

Here's a 24 hour graph of the memory usage: