Interior-point method

Interior-point methods (also referred to as barrier methods or IPMs) are a certain class of algorithms that solve linear and nonlinear convex optimization problems.

John von Neumann[1] suggested an interior-point method of linear programming, which was neither a polynomial-time method nor an efficient method in practice. In fact, it turned out to be slower than the commonly used simplex method.

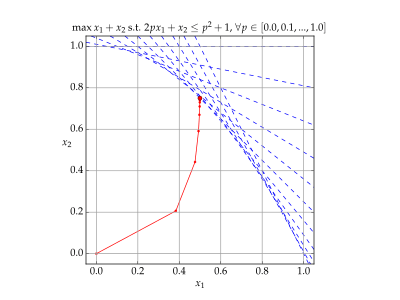

An interior point method, discovered by Soviet mathematician I. I. Dikin in 1967 and reinvented in the U.S. in the mid-1980s. In 1984, Narendra Karmarkar developed a method for linear programming called Karmarkar's algorithm, which runs in provably polynomial time and is also very efficient in practice. It enabled solutions of linear programming problems that were beyond the capabilities of the simplex method. Contrary to the simplex method, it reaches a best solution by traversing the interior of the feasible region. The method can be generalized to convex programming based on a self-concordant barrier function used to encode the convex set.

Any convex optimization problem can be transformed into minimizing (or maximizing) a linear function over a convex set by converting to the epigraph form.[2] The idea of encoding the feasible set using a barrier and designing barrier methods was studied by Anthony V. Yurii Nesterov and Arkadi Nemirovski came up with a special class of such barriers that can be used to encode any convex set. They guarantee that the number of iterations of the algorithm is bounded by a polynomial in the dimension and accuracy of the solution.[3]

Karmarkar's breakthrough revitalized the study of interior-point methods and barrier problems, showing that it was possible to create an algorithm for linear programming characterized by polynomial complexity and, moreover, that was competitive with the simplex method. Already Khachiyan's ellipsoid method was a polynomial-time algorithm; however, it was too slow to be of practical interest.

The class of primal-dual path-following interior-point methods is considered the most successful. Mehrotra's predictor–corrector algorithm provides the basis for most implementations of this class of methods.[4]

Primal-dual interior-point method for nonlinear optimization

The primal-dual method's idea is easy to demonstrate for constrained nonlinear optimization. For simplicity, consider the all-inequality version of a nonlinear optimization problem:

- minimize subject to where

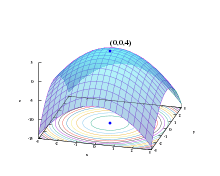

The logarithmic barrier function associated with (1) is

Here is a small positive scalar, sometimes called the "barrier parameter". As converges to zero the minimum of should converge to a solution of (1).

The barrier function gradient is

where is the gradient of the original function , and is the gradient of .

In addition to the original ("primal") variable we introduce a Lagrange multiplier inspired dual variable

(4) is sometimes called the "perturbed complementarity" condition, for its resemblance to "complementary slackness" in KKT conditions.

We try to find those for which the gradient of the barrier function is zero.

Applying (4) to (3), we get an equation for the gradient:

where the matrix is the Jacobian of the constraints .

The intuition behind (5) is that the gradient of should lie in the subspace spanned by the constraints' gradients. The "perturbed complementarity" with small (4) can be understood as the condition that the solution should either lie near the boundary , or that the projection of the gradient on the constraint component normal should be almost zero.

Applying Newton's method to (4) and (5), we get an equation for update :

where is the Hessian matrix of , is a diagonal matrix of , and is a diagonal matrix with .

Because of (1), (4) the condition

should be enforced at each step. This can be done by choosing appropriate :

References

- Dantzig, George B.; Thapa, Mukund N. (2003). Linear Programming 2: Theory and Extensions. Springer-Verlag.

- Boyd, Stephen; Vandenberghe, Lieven (2004). Convex Optimization. Cambridge: Cambridge University Press. p. 143. ISBN 978-0-521-83378-3. MR 2061575.

- Wright, Margaret H. (2004). "The interior-point revolution in optimization: History, recent developments, and lasting consequences". Bulletin of the American Mathematical Society. 42: 39–57. doi:10.1090/S0273-0979-04-01040-7. MR 2115066.

- Potra, Florian A.; Stephen J. Wright (2000). "Interior-point methods". Journal of Computational and Applied Mathematics. 124 (1–2): 281–302. doi:10.1016/S0377-0427(00)00433-7.

Bibliography

- Dikin, I.I. (1967). "Iterative solution of problems of linear and quadratic programming". Dokl. Akad. Nauk SSSR. 174 (1): 747–748.

- Bonnans, J. Frédéric; Gilbert, J. Charles; Lemaréchal, Claude; Sagastizábal, Claudia A. (2006). Numerical optimization: Theoretical and practical aspects. Universitext (Second revised ed. of translation of 1997 French ed.). Berlin: Springer-Verlag. pp. xiv+490. doi:10.1007/978-3-540-35447-5. ISBN 978-3-540-35445-1. MR 2265882.

- Karmarkar, N. (1984). "A new polynomial-time algorithm for linear programming" (PDF). Proceedings of the sixteenth annual ACM symposium on Theory of computing - STOC '84. p. 302. doi:10.1145/800057.808695. ISBN 0-89791-133-4. Archived from the original (PDF) on 28 December 2013.

- Mehrotra, Sanjay (1992). "On the Implementation of a Primal-Dual Interior Point Method". SIAM Journal on Optimization. 2 (4): 575–601. doi:10.1137/0802028.

- Nocedal, Jorge; Stephen Wright (1999). Numerical Optimization. New York, NY: Springer. ISBN 978-0-387-98793-4.

- Press, WH; Teukolsky, SA; Vetterling, WT; Flannery, BP (2007). "Section 10.11. Linear Programming: Interior-Point Methods". Numerical Recipes: The Art of Scientific Computing (3rd ed.). New York: Cambridge University Press. ISBN 978-0-521-88068-8.

- Wright, Stephen (1997). Primal-Dual Interior-Point Methods. Philadelphia, PA: SIAM. ISBN 978-0-89871-382-4.

- Boyd, Stephen; Vandenberghe, Lieven (2004). Convex Optimization (PDF). Cambridge University Press.