Heritrix

Heritrix is a web crawler designed for web archiving. It was written by the Internet Archive. It is available under a free software license and written in Java. The main interface is accessible using a web browser, and there is a command-line tool that can optionally be used to initiate crawls.

| |

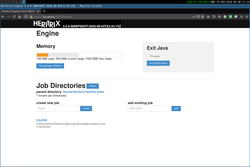

Screenshot of Heritrix Admin Console. | |

| Stable release | 3.4.0

/ August 3, 2020 |

|---|---|

| Repository | |

| Written in | Java |

| Operating system | Linux/Unix-like/Windows (unsupported) |

| Type | Web crawler |

| License | Apache License |

| Website | crawler |

Heritrix was developed jointly by the Internet Archive and the Nordic national libraries on specifications written in early 2003. The first official release was in January 2004, and it has been continually improved by employees of the Internet Archive and other interested parties.

Heritrix was not the main crawler used to crawl content for the Internet Archive's web collection for many years.[1] The largest contributor to the collection, as of 2011, is Alexa Internet.[1] Alexa crawls the web for its own purposes,[1] using a crawler named ia_archiver. Alexa then donates the material to the Internet Archive.[1] The Internet Archive itself did some of its own crawling using Heritrix, but only on a smaller scale.[1]

Starting in 2008, the Internet Archive began performance improvements to do its own wide scale crawling, and now does collect most of its content.[2]

Projects using Heritrix

A number of organizations and national libraries are using Heritrix, among them:

- Austrian National Library, Web Archiving

- Bibliotheca Alexandrina's Internet Archive

- Bibliothèque nationale de France

- British Library

- California Digital Library's Web Archiving Service

- CiteSeerX

- Documenting Internet2

- Internet Memory Foundation

- Library and Archives Canada

- Library of Congress[3]

- National and University Library of Iceland

- National Library of Finland

- National Library of New Zealand

- National Library of the Netherlands (Koninklijke Bibliotheek)[4]

- Netarkivet.dk

- Smithsonian Institution Archives

- National Library of Israel

Arc files

Older versions of Heritrix by default stored the web resources it crawls in an Arc file. This file format is wholly unrelated to ARC (file format). This format has been used by the Internet Archive since 1996 to store its web archives. More recently it saves by default in the WARC file format, which is similar to ARC but more precisely specified and more flexible. Heritrix can also be configured to store files in a directory format similar to the Wget crawler that uses the URL to name the directory and filename of each resource.

An Arc file stores multiple archived resources in a single file in order to avoid managing a large number of small files. The file consists of a sequence of URL records, each with a header containing metadata about how the resource was requested followed by the HTTP header and the response. Arc files range between 100 and 600 MB.

Example:

filedesc://IA-2006062.arc 0.0.0.0 20060622190110 text/plain 76

1 1 InternetArchive

URL IP-address Archive-date Content-type Archive-length

http://foo.edu:80/hello.html 127.10.100.2 19961104142103 text/html 187

HTTP/1.1 200 OK

Date: Thu, 22 Jun 2006 19:01:15 GMT

Server: Apache

Last-Modified: Sat, 10 Jun 2006 22:33:11 GMT

Content-Length: 30

Content-Type: text/html

<html>

Hello World!!!

</html>

Tools for processing Arc files

Heritrix includes a command-line tool called arcreader which can be used to extract the contents of an Arc file. The following command lists all the URLs and metadata stored in the given Arc file (in CDX format):

arcreader IA-2006062.arc

The following command extracts hello.html from the above example assuming the record starts at offset 140:

arcreader -o 140 -f dump IA-2006062.arc

Other tools:

Command-line tools

Heritrix comes with several command-line tools:

- htmlextractor - displays the links Heritrix would extract for a given URL

- hoppath.pl - recreates the hop path (path of links) to the specified URL from a completed crawl

- manifest_bundle.pl - bundles up all resources referenced by a crawl manifest file into an uncompressed or compressed tar ball

- cmdline-jmxclient - enables command-line control of Heritrix

- arcreader - extracts contents of ARC files (see above)

Further tools are available as part of the Internet Archive's warctools project.[5]

See also

References

As of this edit, this article uses content from "Re: Control over the Internet Archive besides just “Disallow /”?", which is licensed in a way that permits reuse under the Creative Commons Attribution-ShareAlike 3.0 Unported License, but not under the GFDL. All relevant terms must be followed.

- Kris (September 6, 2011). "Re: Control over the Internet Archive besides just "Disallow /"?". Pro Webmasters Stack Exchange. Stack Exchange, Inc. Retrieved January 7, 2013.

- "Wayback Machine: Now with 240,000,000,000 URLs - Internet Archive Blogs". blog.archive.org. Retrieved 11 September 2017.

- "About - Web Archiving (Library of Congress)". www.loc.gov. Retrieved 2017-10-29.

- "Technische aspecten bij webarchivering - Koninklijke Bibliotheek". www.kb.nl. Retrieved 11 September 2017.

- "warctools". 25 August 2017. Retrieved 11 September 2017 – via GitHub.

- Burner, M. (1997). "Crawling towards eternity – building an archive of the World Wide Web". Web Techniques. 2 (5). Archived from the original on January 1, 2008.

- Mohr, G., Kimpton, M., Stack, M., Ranitovic, I. (2004). "Introduction to Heritrix, an archival quality web crawler" (PDF). Proceedings of the 4th International Web Archiving Workshop (IWAW’04). Archived from the original (PDF) on 2011-06-12. Retrieved 2007-03-09.CS1 maint: multiple names: authors list (link)

- Sigurðsson, K. (2005). "Incremental crawling with Heritrix" (PDF). Proceedings of the 5th International Web Archiving Workshop (IWAW’05). Archived from the original (PDF) on 2011-06-12. Retrieved 2006-06-23.

External links

Tools by Internet Archive:

- Heritrix - official wiki

- NutchWAX - search web archive collections

- Wayback (Open source Wayback Machine) - search and navigate web archive collections using NutchWax

Links to related tools:

- Arc file format

- How to run Heritrix in Windows

- WERA (Web ARchive Access) - search and navigate web archive collections using NutchWAX