Connectionism

Connectionism is an approach in the fields of cognitive science that hopes to explain mental phenomena using artificial neural networks (ANN).[1] Connectionism presents a cognitive theory based on simultaneously occurring, distributed signal activity via connections that can be represented numerically, where learning occurs by modifying connection strengths based on experience.[2]

Some advantages of the connectionist approach include its applicability to a broad array of functions, structural approximation to biological neurons, low requirements for innate structure, and capacity for graceful degradation.[3] Some disadvantages include the difficulty in deciphering how ANNs process information and a resultant difficulty explaining phenomena at a higher level.[2]

The success of deep learning networks in the past decade has greatly increased the popularity of this approach, but the complexity and scale of such networks has brought with them increased interpretability problems.[1] Connectionism is seen by many to offer an alternative to classical theories of mind based on symbolic computation, but the extent to which the two approaches are compatible has been the subject of much debate since their inception.[1]

Basic principles

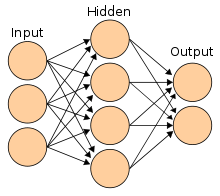

The central connectionist principle is that mental phenomena can be described by interconnected networks of simple and often uniform units. The form of the connections and the units can vary from model to model. For example, units in the network could represent neurons and the connections could represent synapses, as in the human brain.

Spreading activation

In most connectionist models, networks change over time. A closely related and very common aspect of connectionist models is activation. At any time, a unit in the network has an activation, which is a numerical value intended to represent some aspect of the unit. For example, if the units in the model are neurons, the activation could represent the probability that the neuron would generate an action potential spike. Activation typically spreads to all the other units connected to it. Spreading activation is always a feature of neural network models, and it is very common in connectionist models used by cognitive psychologists.

Neural networks

Neural networks are by far the most commonly used connectionist model today. Though there are a large variety of neural network models, they almost always follow two basic principles regarding the mind:

- Any mental state can be described as an (N)-dimensional vector of numeric activation values over neural units in a network.

- Memory is created by modifying the strength of the connections between neural units. The connection strengths, or "weights", are generally represented as an N×N matrix.

Most of the variety among neural network models comes from:

- Interpretation of units: Units can be interpreted as neurons or groups of neurons.

- Definition of activation: Activation can be defined in a variety of ways. For example, in a Boltzmann machine, the activation is interpreted as the probability of generating an action potential spike, and is determined via a logistic function on the sum of the inputs to a unit.

- Learning algorithm: Different networks modify their connections differently. In general, any mathematically defined change in connection weights over time is referred to as the "learning algorithm".

Connectionists are in agreement that recurrent neural networks (directed networks wherein connections of the network can form a directed cycle) are a better model of the brain than feedforward neural networks (directed networks with no cycles, called DAG). Many recurrent connectionist models also incorporate dynamical systems theory. Many researchers, such as the connectionist Paul Smolensky, have argued that connectionist models will evolve toward fully continuous, high-dimensional, non-linear, dynamic systems approaches.

Biological realism

Connectionist work in general does not need to be biologically realistic and therefore suffers from a lack of neuroscientific plausibility.[4][5][6][7][8][9][10] However, the structure of neural networks is derived from that of biological neurons, and this parallel in low-level structure is often argued to be an advantage of connectionism in modeling cognitive structures compared with other approaches.[3] One area where connectionist models are thought to be biologically implausible is with respect to error-propagation networks that are needed to support learning,[11][12] but error propagation can explain some of the biologically-generated electrical activity seen at the scalp in event-related potentials such as the N400 and P600,[13] and this provides some biological support for one of the key assumptions of connectionist learning procedures.

Learning

The weights in a neural network are adjusted according to some learning rule or algorithm, such as Hebbian learning. Thus, connectionists have created many sophisticated learning procedures for neural networks. Learning always involves modifying the connection weights. In general, these involve mathematical formulas to determine the change in weights when given sets of data consisting of activation vectors for some subset of the neural units. Several studies have been focused on designing teaching-learning methods based on connectionism.[14]

By formalizing learning in such a way, connectionists have many tools. A very common strategy in connectionist learning methods is to incorporate gradient descent over an error surface in a space defined by the weight matrix. All gradient descent learning in connectionist models involves changing each weight by the partial derivative of the error surface with respect to the weight. Backpropagation (BP), first made popular in the 1980s, is probably the most commonly known connectionist gradient descent algorithm today.[12]

Connectionism can be traced to ideas more than a century old, which were little more than speculation until the mid-to-late 20th century.

Parallel distributed processing

The prevailing connectionist approach today was originally known as parallel distributed processing (PDP). It was an artificial neural network approach that stressed the parallel nature of neural processing, and the distributed nature of neural representations. It provided a general mathematical framework for researchers to operate in. The framework involved eight major aspects:

- A set of processing units, represented by a set of integers.

- An activation for each unit, represented by a vector of time-dependent functions.

- An output function for each unit, represented by a vector of functions on the activations.

- A pattern of connectivity among units, represented by a matrix of real numbers indicating connection strength.

- A propagation rule spreading the activations via the connections, represented by a function on the output of the units.

- An activation rule for combining inputs to a unit to determine its new activation, represented by a function on the current activation and propagation.

- A learning rule for modifying connections based on experience, represented by a change in the weights based on any number of variables.

- An environment that provides the system with experience, represented by sets of activation vectors for some subset of the units.

A lot of the research that led to the development of PDP was done in the 1970s, but PDP became popular in the 1980s with the release of the books Parallel Distributed Processing: Explorations in the Microstructure of Cognition - Volume 1 (foundations) and Volume 2 (Psychological and Biological Models), by James L. McClelland, David E. Rumelhart and the PDP Research Group. The books are now considered seminal connectionist works, and it is now common to fully equate PDP and connectionism, although the term "connectionism" is not used in the books.

Earlier work

PDP's direct roots were the perceptron theories of researchers such as Frank Rosenblatt from the 1950s and 1960s. But perceptron models were made very unpopular by the book Perceptrons by Marvin Minsky and Seymour Papert, published in 1969. It demonstrated the limits on the sorts of functions that single-layered (no hidden layer) perceptrons can calculate, showing that even simple functions like the exclusive disjunction (XOR) could not be handled properly. The PDP books overcame this limitation by showing that multi-level, non-linear neural networks were far more robust and could be used for a vast array of functions.[15]

Many earlier researchers advocated connectionist style models, for example in the 1940s and 1950s, Warren McCulloch and Walter Pitts (MP neuron), Donald Olding Hebb, and Karl Lashley. McCulloch and Pitts showed how neural systems could implement first-order logic: Their classic paper "A Logical Calculus of Ideas Immanent in Nervous Activity" (1943) is important in this development here. They were influenced by the important work of Nicolas Rashevsky in the 1930s. Hebb contributed greatly to speculations about neural functioning, and proposed a learning principle, Hebbian learning, that is still used today. Lashley argued for distributed representations as a result of his failure to find anything like a localized engram in years of lesion experiments.

Connectionism apart from PDP

Though PDP is the dominant form of connectionism, other theoretical work should also be classified as connectionist.

Many connectionist principles can be traced to early work in psychology, such as that of William James.[16] Psychological theories based on knowledge about the human brain were fashionable in the late 19th century. As early as 1869, the neurologist John Hughlings Jackson argued for multi-level, distributed systems. Following from this lead, Herbert Spencer's Principles of Psychology, 3rd edition (1872), and Sigmund Freud's Project for a Scientific Psychology (composed 1895) propounded connectionist or proto-connectionist theories. These tended to be speculative theories. But by the early 20th century, Edward Thorndike was experimenting on learning that posited a connectionist type network.

Friedrich Hayek independently conceived the Hebbian synapse learning model in a paper presented in 1920 and developed that model into global brain theory constituted of networks Hebbian synapses building into larger systems of maps and memory network . Hayek's breakthrough work was cited by Frank Rosenblatt in his perceptron paper.

Another form of connectionist model was the relational network framework developed by the linguist Sydney Lamb in the 1960s. Relational networks have been only used by linguists, and were never unified with the PDP approach. As a result, they are now used by very few researchers.

There are also hybrid connectionist models, mostly mixing symbolic representations with neural network models. The hybrid approach has been advocated by some researchers (such as Ron Sun).

Connectionism vs. computationalism debate

As connectionism became increasingly popular in the late 1980s, some researchers (including Jerry Fodor, Steven Pinker and others) reacted against it. They argued that connectionism, as then developing, threatened to obliterate what they saw as the progress being made in the fields of cognitive science and psychology by the classical approach of computationalism. Computationalism is a specific form of cognitivism that argues that mental activity is computational, that is, that the mind operates by performing purely formal operations on symbols, like a Turing machine. Some researchers argued that the trend in connectionism represented a reversion toward associationism and the abandonment of the idea of a language of thought, something they saw as mistaken. In contrast, those very tendencies made connectionism attractive for other researchers.

Connectionism and computationalism need not be at odds, but the debate in the late 1980s and early 1990s led to opposition between the two approaches. Throughout the debate, some researchers have argued that connectionism and computationalism are fully compatible, though full consensus on this issue has not been reached. Differences between the two approaches include the following:

- Computationalists posit symbolic models that are structurally similar to underlying brain structure, whereas connectionists engage in "low-level" modeling, trying to ensure that their models resemble neurological structures.

- Computationalists in general focus on the structure of explicit symbols (mental models) and syntactical rules for their internal manipulation, whereas connectionists focus on learning from environmental stimuli and storing this information in a form of connections between neurons.

- Computationalists believe that internal mental activity consists of manipulation of explicit symbols, whereas connectionists believe that the manipulation of explicit symbols provides a poor model of mental activity.

- Computationalists often posit domain specific symbolic sub-systems designed to support learning in specific areas of cognition (e.g., language, intentionality, number), whereas connectionists posit one or a small set of very general learning-mechanisms.

Despite these differences, some theorists have proposed that the connectionist architecture is simply the manner in which organic brains happen to implement the symbol-manipulation system. This is logically possible, as it is well known that connectionist models can implement symbol-manipulation systems of the kind used in computationalist models,[17] as indeed they must be able if they are to explain the human ability to perform symbol-manipulation tasks. Several cognitive models combining both symbol-manipulative and connectionist architectures have been proposed, notably among them Paul Smolensky's Integrated Connectionist/Symbolic Cognitive Architecture (ICS).[1][18] But the debate rests on whether this symbol manipulation forms the foundation of cognition in general, so this is not a potential vindication of computationalism. Nonetheless, computational descriptions may be helpful high-level descriptions of cognition of logic, for example.

The debate was largely centred on logical arguments about whether connectionist networks could produce the syntactic structure observed in this sort of reasoning. This was later achieved although using fast-variable binding abilities outside of those standardly assumed in connectionist models.[17][19] As of 2016, progress in neurophysiology and general advances in the understanding of neural networks have led to the successful modelling of a great many of these early problems, and the debate about fundamental cognition has, thus, largely been decided among neuroscientists in favour of connectionism. However, these fairly recent developments have yet to reach consensus acceptance among those working in other fields, such as psychology or philosophy of mind.

Part of the appeal of computational descriptions is that they are relatively easy to interpret, and thus may be seen as contributing to our understanding of particular mental processes, whereas connectionist models are in general more opaque, to the extent that they may be describable only in very general terms (such as specifying the learning algorithm, the number of units, etc.), or in unhelpfully low-level terms. In this sense connectionist models may instantiate, and thereby provide evidence for, a broad theory of cognition (i.e., connectionism), without representing a helpful theory of the particular process that is being modelled. In this sense the debate might be considered as to some extent reflecting a mere difference in the level of analysis in which particular theories are framed. Some researchers suggest that the analysis gap is the consequence of connectionist mechanisms giving rise to emergent phenomena that may be describable in computational terms.[20]

The recent popularity of dynamical systems in philosophy of mind have added a new perspective on the debate; some authors now argue that any split between connectionism and computationalism is more conclusively characterized as a split between computationalism and dynamical systems.

In 2014, Alex Graves and others from DeepMind published a series of papers describing a novel Deep Neural Network structure called the Neural Turing Machine[21] able to read symbols on a tape and store symbols in memory. Relational Networks, another Deep Network module published by DeepMind are able to create object-like representations and manipulate them to answer complex questions. Relational Networks and Neural Turing Machines are further evidence that connectionism and computationalism need not be at odds.

See also

Notes

- Garson, James (27 November 2018). Zalta, Edward N. (ed.). The Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University – via Stanford Encyclopedia of Philosophy.

- Smolensky, Paul (1999). "Grammar-based Connectionist Approaches to Language" (PDF). Cognitive Science. 23 (4): 589–613. doi:10.1207/s15516709cog2304_9.

- Marcus, Gary F. (2001). The Algebraic Mind: Integrating Connectionism and Cognitive Science (Learning, Development, and Conceptual Change). Cambridge, Massachusetts: MIT Press. pp. 27–28. ISBN 978-0262632683.

- "Encephalos Journal". www.encephalos.gr. Retrieved 2018-02-20.

- Wilson, Elizabeth A. (2016-02-04). Neural Geographies: Feminism and the Microstructure of Cognition. Routledge. ISBN 9781317958765.

- "Organismically-inspired robotics: homeostatic adaptation and teleology beyond the closed sensorimotor loop". S2CID 15349751. Cite journal requires

|journal=(help) - Zorzi, Marco; Testolin, Alberto; Stoianov, Ivilin P. (2013-08-20). "Modeling language and cognition with deep unsupervised learning: a tutorial overview". Frontiers in Psychology. 4: 515. doi:10.3389/fpsyg.2013.00515. ISSN 1664-1078. PMC 3747356. PMID 23970869.

- "ANALYTIC AND CONTINENTAL PHILOSOPHY".

- Browne, A. (1997-01-01). Neural Network Perspectives on Cognition and Adaptive Robotics. CRC Press. ISBN 9780750304559.

- Pfeifer, R.; Schreter, Z.; Fogelman-Soulié, F.; Steels, L. (1989-08-23). Connectionism in Perspective. Elsevier. ISBN 9780444598769.

- Crick, Francis (January 1989). "The recent excitement about neural networks". Nature. 337 (6203): 129–132. Bibcode:1989Natur.337..129C. doi:10.1038/337129a0. ISSN 1476-4687. PMID 2911347.

- Rumelhart, David E.; Hinton, Geoffrey E.; Williams, Ronald J. (October 1986). "Learning representations by back-propagating errors". Nature. 323 (6088): 533–536. Bibcode:1986Natur.323..533R. doi:10.1038/323533a0. ISSN 1476-4687.

- Fitz, Hartmut; Chang, Franklin (2019-06-01). "Language ERPs reflect learning through prediction error propagation". Cognitive Psychology. 111: 15–52. doi:10.1016/j.cogpsych.2019.03.002. hdl:21.11116/0000-0003-474F-6. ISSN 0010-0285. PMID 30921626.

- Novo, María-Luisa; Alsina, Ángel; Marbán, José-María; Berciano, Ainhoa (2017). "Connective Intelligence for Childhood Mathematics Education". Comunicar (in Spanish). 25 (52): 29–39. doi:10.3916/c52-2017-03. ISSN 1134-3478.

- Hornik, K.; Stinchcombe, M.; White, H. (1989). "Multilayer feedforward networks are universal approximators". Neural Networks. 2 (5): 359. doi:10.1016/0893-6080(89)90020-8.

- Anderson, James A.; Rosenfeld, Edward (1989). "Chapter 1: (1890) William James Psychology (Brief Course)". Neurocomputing: Foundations of Research. A Bradford Book. p. 1. ISBN 978-0262510486.

- Chang, Franklin (2002). "Symbolically speaking: a connectionist model of sentence production". Cognitive Science. 26 (5): 609–651. doi:10.1207/s15516709cog2605_3. ISSN 1551-6709.

- Smolensky, Paul (1990). "Tensor Product Variable Binding and the Representation of Symbolic Structures in Connectionist Systems" (PDF). Artificial Intelligence. 46 (1–2): 159–216. doi:10.1016/0004-3702(90)90007-M.

- Shastri, Lokendra; Ajjanagadde, Venkat (September 1993). "From simple associations to systematic reasoning: A connectionist representation of rules, variables and dynamic bindings using temporal synchrony". Behavioral and Brain Sciences. 16 (3): 417–451. doi:10.1017/S0140525X00030910. ISSN 1469-1825.

- Ellis, Nick C. (1998). "Emergentism, Connectionism and Language Learning" (PDF). Language Learning. 48:4 (4): 631–664. doi:10.1111/0023-8333.00063.

- Graves, Alex (2014). "Neural Turing Machines". arXiv:1410.5401 [cs.NE].

References

- Rumelhart, D.E., J.L. McClelland and the PDP Research Group (1986). Parallel Distributed Processing: Explorations in the Microstructure of Cognition. Volume 1: Foundations, Cambridge, Massachusetts: MIT Press, ISBN 978-0262680530

- McClelland, J.L., D.E. Rumelhart and the PDP Research Group (1986). Parallel Distributed Processing: Explorations in the Microstructure of Cognition. Volume 2: Psychological and Biological Models, Cambridge, Massachusetts: MIT Press, ISBN 978-0262631105

- Pinker, Steven and Mehler, Jacques (1988). Connections and Symbols, Cambridge MA: MIT Press, ISBN 978-0262660648

- Jeffrey L. Elman, Elizabeth A. Bates, Mark H. Johnson, Annette Karmiloff-Smith, Domenico Parisi, Kim Plunkett (1996). Rethinking Innateness: A connectionist perspective on development, Cambridge MA: MIT Press, ISBN 978-0262550307

- Marcus, Gary F. (2001). The Algebraic Mind: Integrating Connectionism and Cognitive Science (Learning, Development, and Conceptual Change), Cambridge, Massachusetts: MIT Press, ISBN 978-0262632683

- David A. Medler (1998). "A Brief History of Connectionism" (PDF). Neural Computing Surveys. 1: 61–101.