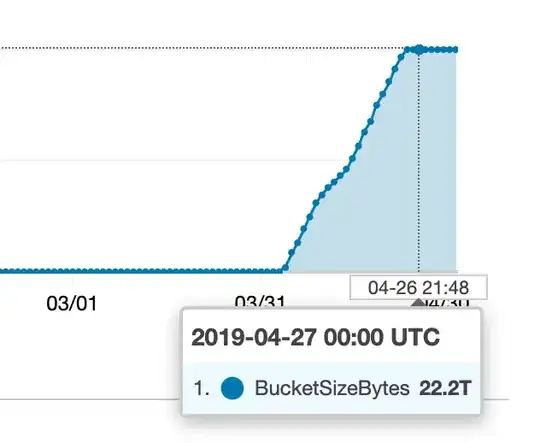

Something happened recently with one of our S3 buckets:

I started looking for where all this extra stuff was coming from, but the metrics I gathered don't seem to match what is going on in CloudWatch (or our bill).

The bucket has a handful of different key prefixes ('folders'), so the first thing I did was to try and work out if any of them was contributing significantly to this number, like so:

aws s3 ls --summarize --human-readable --recursive s3://my-bucket/prefix

However none of the prefixes seemed to contain a huge amount of data, nothing more than a few GB.

I finally tried running

aws s3 ls --summarize --human-readable --recursive s3://my-bucket

...and I got a total size of ~25GB. Am I doing the wrong thing to try and find the 'size of a folder', or misunderstanding something? How can I find where all this extra storage is being used (and find out what process is running amok)?