I am trying to deploy Influx DB on Kubernetes. Using the official Influx DB Helm chart.

I am deploying this Helm chart with the following values file:

persistence:

enabled: true

size: 5Gi

ingress:

enabled: true

# tls: true

hostname: influxdb.slackdog.space

annotations:

kubernetes.io/ingress.class: "gce"

external-dns.alpha.kubernetes.io/hostname: influxdb.slackdog.space.

service:

type: NodePort

When I deploy the Helm chart one of the Backend services stays in an UNHEALTHY state. I have left the deployment sitting for days with no status change.

Here is the ingress controller in question

Name: slack-dog-influxdb-influxdb

Namespace: slack-dog-influxdb

Address: xx.xxx.xxx.xx # Note: external IP

Default backend: default-http-backend:80 (xx.xx.x.xx:8080) # Note internal cluster IP

Rules:

Host Path Backends

---- ---- --------

influxdb.slackdog.space

/ slack-dog-influxdb-influxdb:8086 (<none>)

Annotations:

backends: {"k8s-be-30983--01721a5ee78653ec":"HEALTHY","k8s-be-32128--01721a5ee78653ec":"UNHEALTHY"}

forwarding-rule: k8s-fw-slack-dog-influxdb-slack-dog-influxdb-influxdb--01721a50

target-proxy: k8s-tp-slack-dog-influxdb-slack-dog-influxdb-influxdb--01721a50

url-map: k8s-um-slack-dog-influxdb-slack-dog-influxdb-influxdb--01721a50

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ADD 35m loadbalancer-controller slack-dog-influxdb/slack-dog-influxdb-influxdb

Normal CREATE 35m loadbalancer-controller ip: xx.xxx.xxx.xx # Note: external IP

I have run a bash terminal in the deployed InfluxDB pod and successfully pinged the localhost:8086/ping endpoint, which is used for health checks in the deployment.

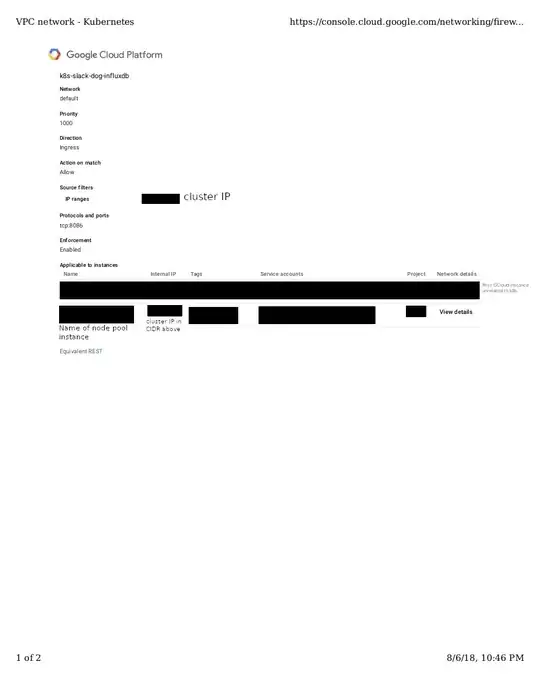

I tried adding a firewall rule to allow traffic in on port 8086 but this seams to have no effect:

What could be causing this behavior? Is there any manual tweaking I need to perform in the GCP to get things working?