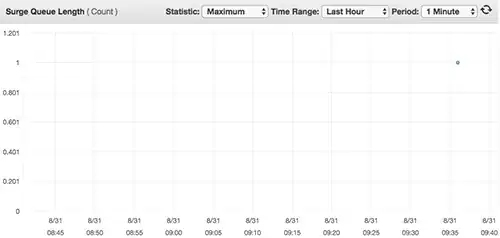

A problem: It seems like every single request to our application ends up in ELB surge queue

Example of a surge queue chart:

We have a classic ELB on AWS with multiple EC2 boxes behind it. ELB listeners setup in a next way

LB Protocol LB Port Instance Protocol Instance Port Cipher SSL Certificate

TCP 80 TCP 80 N/A N/A

On an EC2 instance we have an nginx server with next nginx.conf:

user nginx;

worker_processes 3;

pid /var/run/nginx.pid;

worker_rlimit_nofile 8192;

worker_rlimit_sigpending 32768;

events {

worker_connections 2048;

multi_accept on;

use epoll;

accept_mutex off;

}

http {

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

map_hash_bucket_size 128;

server_tokens off;

client_max_body_size 0;

server_names_hash_bucket_size 256;

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for" $request_time';

access_log /app/log/nginx/access.log main;

error_log /app/log/nginx/error.log;

gzip on;

gzip_disable "msie6";

gzip_vary on;

gzip_comp_level 4;

gzip_buffers 16 8k;

gzip_http_version 1.1;

gzip_types text/plain text/css application/json application/x-javascript text/xml application/xml application/xml+rss text/javascript;

client_body_temp_path /app/tmp/nginx;:q

include /etc/nginx/sites-enabled/*;

upstream tomcat {

server localhost:8080;

}

upstream httpd {

server localhost:9000;

}

upstream play {

server localhost:9000;

}

and vhost sites.conf

log_format proxylog '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$proxy_protocol_addr" $request_time';

server {

server_name www.my-site.com;

rewrite ^(.*) http://my-site.com$1 permanent;

}

server {

listen 80 proxy_protocol;

listen 443 ssl proxy_protocol;

ssl_certificate /etc/nginx/my-certificate.crt;

ssl_certificate_key /etc/nginx/my-key.key;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers "SECRET";

ssl_prefer_server_ciphers on;

set_real_ip_from 10.0.0.0/8;

root /app/websites/my-site.com/httpdocs;

index index.html index.htm;

real_ip_header proxy_protocol;

server_name my-site.com;

access_log /app/log/nginx/my-site.com.access.log proxylog buffer=16k flush=2s;

error_log /app/log/nginx/my-site.com.error.log;

charset utf-8;

location /foo {

proxy_pass http://play;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

location /bar {

add_header Access-Control-Allow-Origin "*" always;

add_header Access-Control-Allow-Methods "GET,POST,OPTIONS,DELETE,PUT" always;

add_header 'Access-Control-Allow-Headers' 'Origin, X-Requested-With, Content-Type, Accept, User-Agent, Authorization, Referer, Timestamp' always;

add_header Access-Control-Allow-Credentials true always;

proxy_pass http://play;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

location / {

add_header Access-Control-Allow-Origin "*" always;

add_header Access-Control-Allow-Methods "GET,POST,OPTIONS,DELETE,PUT" always;

add_header 'Access-Control-Allow-Headers' 'Origin, X-Requested-With, Content-Type, Accept, User-Agent, Authorization, Referer, Timestamp' always;

add_header Access-Control-Allow-Credentials true always;

real_ip_header proxy_protocol;

set_real_ip_from 10.0.0.0/8;

proxy_read_timeout 90s;

proxy_set_header X-Real-IP $proxy_protocol_addr;

proxy_set_header X-Forwarded-For $proxy_protocol_addr;

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Forwarded-Server $host;

proxy_pass http://play;

proxy_set_header Host $http_host;

}

location ~ ^/(images|css|js|html) {

root /app/websites/my-site.com/httpdocs;

}

error_page 404 /404.html;

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

I'm limiting possible suspects of a problem to ELB and nginx, and not the most obvious – actual web server processing requests, because in one of my tests I completely removed java application, and replaces it with a dummy node.js server which was replying 'hello world' to every request, and I was still getting all those requests logged in surge queue.

I also tried adjusting worker_processes and keepalive_timeout to see if it affects anything, and it doesn't.

What bothers me, is that this surge queue of 1 doesn't affect performance of a service, as it seems like requests tend to stay there fractions of seconds, but what I don't understand is why even a single request ends up passing through surge queue.