I think I've closely followed the documentation and tutorials I've found so far, but I still get this to work. I just can't convince AWS not to touch the binary data I'm posting in the body.

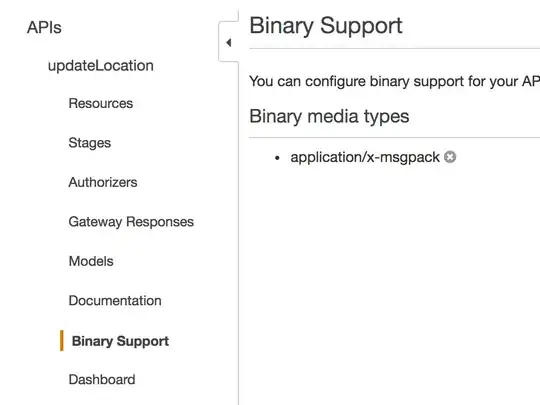

In my app, I'm setting both the Content-Type and Accept headers of the original API request to application/x-msgpack, which I have defined as a binary media type under Binary Support:

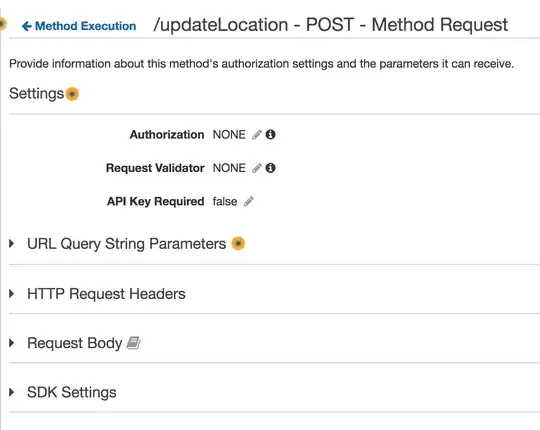

I haven't set anything in the Method Request:

In the Integration Request, I haven't enabled the proxy and I've enabled request body passthrough:

I've enabled CloudWatch logs for API Gateway execution and I can see that AWS is still base-64-encoding my binary data:

17:30:29 Starting execution for request: ...

17:30:29 HTTP Method: POST, Resource Path: /...

17:30:29 Method request path: {}

17:30:29 Method request query string: {}

17:30:29 Method request headers: {

Accept=application/x-msgpack,

Content-Type=application/x-msgpack,

...

}

17:30:29 Method request body before transformations: [Binary Data]

17:30:29 Endpoint request URI: https://...

17:30:29 Endpoint request headers: {

Accept=application/x-msgpack,

...

[TRUNCATED - I don't see the rest of the headers]

}

17:30:29 Endpoint request body after transformations: [Base-64 encoded binary data]

17:30:29 Sending request to https://...

Note that the endpoint request headers have been truncated in the CloudWatch logs (I haven't truncated them myself for this question). I therefore don't see what the Content-Type header is.

Note the lines with "Method request body before transformations" and "Endpoint request body after transformations". Why would it be still transforming the binary data to base-64?

Sources I've used so far are:

- http://docs.aws.amazon.com/apigateway/latest/developerguide/api-gateway-payload-encodings.html

- https://aws.amazon.com/blogs/compute/binary-support-for-api-integrations-with-amazon-api-gateway/

- http://docs.aws.amazon.com/apigateway/latest/developerguide/api-gateway-payload-encodings-workflow.html

- http://docs.aws.amazon.com/apigateway/api-reference/resource/integration/#contentHandling

Update

I've checked the integration setting via AWS CLI and got this:

> aws apigateway get-integration \

--rest-api-id ... \

--resource-id ... \

--http-method POST

{

"integrationResponses": {

"200": {

"selectionPattern": "",

"statusCode": "200"

}

},

"contentHandling": "CONVERT_TO_TEXT",

"cacheKeyParameters": [],

"uri": "...",

"httpMethod": "POST",

"passthroughBehavior": "WHEN_NO_TEMPLATES",

"cacheNamespace": "...",

"type": "AWS"

}

I noticed the "contentHandling": "CONVERT_TO_TEXT" bit and I've tried overriding it to both "" (empty value, which in turn removed the property altogether) and "CONVERT_TO_BINARY" by doing:

> aws apigateway update-integration \

--rest-api-id ... \

--resource-id ... \

--http-method POST \

--patch-operations '[{"op":"replace","path":"/contentHandling","value":""}]'

I now see the endpoint request being preserved as binary:

10:32:21 Endpoint request body after transformations: [Binary Data]

However, I get this error:

10:32:21 Endpoint response body before transformations: {"Type":"User","message":"Could not parse request body into json: Unexpected character ((CTRL-CHAR, code 129))...

And I don't get any activity on the CloudWatch logs for my Lambda function. And my Lambda function isn't the one trying to parse the incoming data as JSON. So, still somewhere along the API-Lambda integration path, the data is being parsed as JSON instead of being left alone as binary.