I'm trying to achieve 4Gbps throughput between my computer and my Synology Nas. Unfortunately I am only getting 1Gbps speeds between these systems. My setup is below:

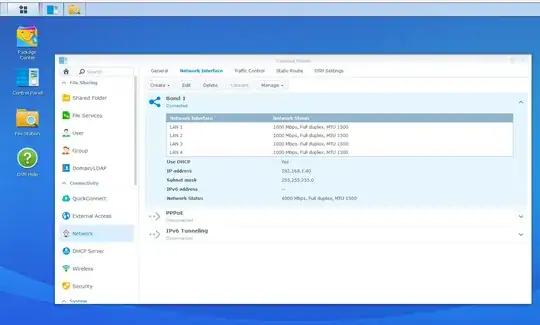

Synology DS1515+ with 4 NICS bonded:

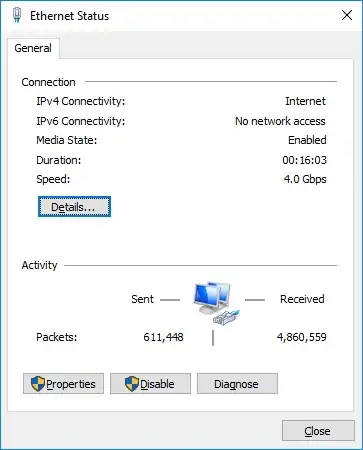

Windows 10 Enterprise system with a 4 port Intel I350-T4 NIC running Intel's 22.1 Drivers (which I grabbed from here: https://downloadcenter.intel.com/download/25016/Intel-Network-Adapter-Driver-for-Windows-10?product=59063):

Dell Powerconnect 5324 switch utilizing LACP with two LAG groups - one for the Synology and the other for the PC:

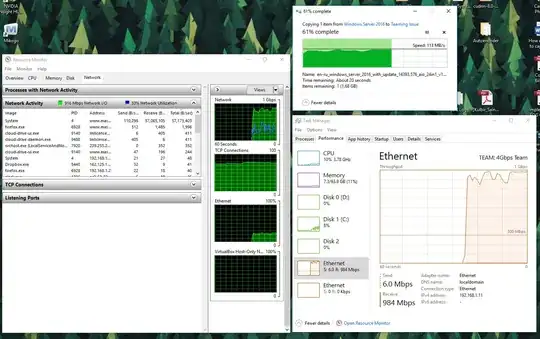

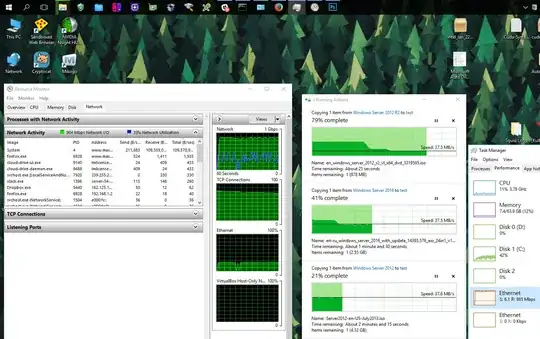

I tested the setup by sending a large file (4.5gb) from the Synology to the PC (also tried from PC to Synology). I checked out the network utilization while doing this:

Notice the maximum throughput shown in the Task Manager and Resource Monitor is 1Gbps rather than 4Gbps.

How can I utilize the full 4Gbps?

NOTE: Speed is still capped even when transferring more than one file at a time.