What is Split-Brain?

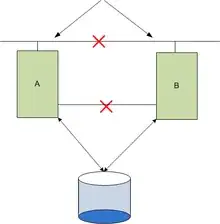

As mentioned in the Official Documentation on Managing Split-Brain provided by RedHat, split-brain is a state when a data or availability inconsistencies originating from the maintenance of two separate data sets with overlap in scope, either because of servers in a network design, or a failure condition based on servers not communicating and synchronizing their data to each other. And it is a term applicable to replicate configuration.

Pay attention that it is said "a failure condition based on servers not communicating and synchronizing their data to each other" - due to any likelihood - but it doesn't mean that your nodes might lose the connection. The Peer may be yet in cluster and connected.

Split-Brain Types :

We have three different types of split-brain, and as far as I can see yours is entry split-brain. To explain three types of split-brain :

Data split-brain : Contents of the file under split-brain are different in different replica pairs and automatic healing is not possible.

Metadata split-brain :, The metadata of the files (example, user defined extended attribute) are different and automatic healing is not possible.

Entry split-brain : It happens when a file have different gfids on each of the replica pair.

What is GFID ?

GlusterFS internal file identifier (GFID) is a uuid that is unique to each file across the entire cluster. This is analogous to inode number in a normal filesystem. The GFID of a file is stored in its xattr named trusted.gfid. To find the path from GFID, I highly recommend you read this official article provided by GlusterFS.

How to resolve entry split-brain?

There are multiple methods to prevent split-brain from occurring but to resolve it, the corresponding gfid-link files must be removed. The gfid-link files are present in the .glusterfs directory in the top-level directory of the brick. By the way, beware that before deleting the gfid-links, you must ensure that there are no hard links to the files present on that brick. If hard-links exist, you must delete them either. Then you can use self-healing process by running the following commands.

In the meantime, to view the list of files on a volume that are in a split-brain state you can use:

# gluster volume heal VOLNAME info split-brain

You should also beware that for replicated volumes, when a brick goes offline and comes back online, self-healing is required to resync all the replicas.

To check the healing status of volumes and files you can use:

# gluster volume heal VOLNAME info

Since you are using version 3.5, you don't have auto healing. So after doing the steps mentioned earlier, You need to trigger self-healing. To do so:

Only on the files which require healing:

# gluster volume heal VOLNAME

On all the files:

# gluster volume heal VOLNAME full

I hope this will help you through fixing your problem. Please read the official docs for further information. Cheers.