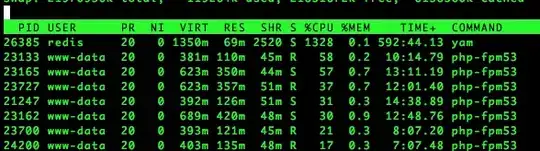

Recently, we have been noticing CPU spikes on our production environment caused by redis which can be seen below:

To combat this issue, I have been restarting the redis server about twice a day :( which is obviously far from ideal. I'd like to identify the root cause.

Here are some things I have looked into so far:

1) Look into any anomalies in the redis log file. The following seems suspicious:

2) Researched nginx access logs to see if we are experiencing unusually high traffic. The answer is no.

3) New Relic revealed that the issue started on Nov 21st, 16` (about a month ago) but no code was released around that time.

Here are some details about our setup:

Redis server: Redis server v=2.8.17 sha=00000000:0 malloc=jemalloc-3.6.0 bits=64 build=64a9cf396cbcc4c7

PHP: 5.3.27 with fpm

Redis configuration:

daemonize yes

pidfile /var/run/redis/redis.pid

port 6379

timeout 0

tcp-keepalive 0

loglevel notice

logfile /var/log/redis/redis.log

syslog-enabled yes

databases 16

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error no

rdbcompression yes

rdbchecksum yes

dbfilename redis.rdb

dir /var/lib/redis/

slave-serve-stale-data yes

slave-read-only yes

repl-disable-tcp-nodelay no

slave-priority 100

maxmemory 15GB

appendonly no

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

lua-time-limit 5000

slowlog-max-len 128

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-entries 512

list-max-ziplist-value 64

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

aof-rewrite-incremental-fsync yes

include /etc/redis/conf.d/local.conf

Framework: Magento 1.7.2 with Cm_Cache_Backend_Redis

Please let me know if given the above information there is anything I can do to mitigate the high cpu usage.