I have a written a piece of multi-threaded software that does a bunch of simulations a day. This is a very CPU-intensive task, and I have been running this program on cloud services, usually on configurations like 1GB per core.

I am running CentOS 6.7, and /proc/cpuinfo gives me that my four VPS cores are 2.5GHz.

processor : 3

vendor_id : GenuineIntel

cpu family : 6

model : 63

model name : Intel(R) Xeon(R) CPU E5-2680 v3 @ 2.50GHz

stepping : 2

microcode : 1

cpu MHz : 2499.992

cache size : 30720 KB

physical id : 3

siblings : 1

core id : 0

cpu cores : 1

apicid : 3

initial apicid : 3

fpu : yes

fpu_exception : yes

cpuid level : 13

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon rep_good unfair_spinlock pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm arat xsaveopt fsgsbase bmi1 avx2 smep bmi2 erms invpcid

bogomips : 4999.98

clflush size : 64

cache_alignment : 64

address sizes : 40 bits physical, 48 bits virtual

power management:

With a rise of exchange rates, my VPS started to be more expensive, and I have came to a "great deal" on used bare-metal servers.

I purchased four HP DL580 G5, with four Intel Xeon X7350s each. Basically, each machine has 16x 2.93GHz cores and 16GB, to keep things like my VPS cloud.

processor : 15

vendor_id : GenuineIntel

cpu family : 6

model : 15

model name : Intel(R) Xeon(R) CPU X7350 @ 2.93GHz

stepping : 11

microcode : 187

cpu MHz : 1600.002

cache size : 4096 KB

physical id : 6

siblings : 4

core id : 3

cpu cores : 4

apicid : 27

initial apicid : 27

fpu : yes

fpu_exception : yes

cpuid level : 10

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall lm constant_tsc arch_perfmon pebs bts rep_good aperfmperf pni dtes64 monitor ds_cpl vmx est tm2 ssse3 cx16 xtpr pdcm dca lahf_lm dts tpr_shadow vnmi flexpriority

bogomips : 5866.96

clflush size : 64

cache_alignment : 64

address sizes : 40 bits physical, 48 bits virtual

power management:

Essentially it seemed a great deal, as I could stop using VPS's to perform these batch works. Now it is the weird stuff...

- On the VPS's I have been running 1.25 thread per core, just like I have been doing on the bare metal. (The extra 0.25 thread is to compensate idle time caused by network use.)

- On my VPS, using in total 44x 2.5GHz cores, I get nearly 900 simulations per minute.

- On my DL580, using in total 64x 2.93GHz cores, I am only getting 300 simulations per minute.

I understand the DL580 has an older processor. But if I am running one thread per core, and the bare metal server has a faster core, why is it performing poorer than my VPS?

I have no memory swap happening in any of the servers.

TOP says my processors are running at 100%. I get an average load of 18 (5 on VPS).

Is this going to be this way, or am I missing something?

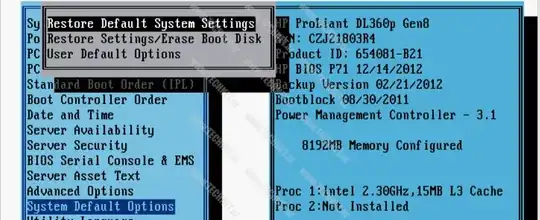

Running lscpu gives me 1.6GHz on my bare metal server. This was seen on the /proc/cpuinfo as well.

Is this information correct, or is it linked to some incorrect power management?

[BARE METAL] $ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 16

On-line CPU(s) list: 0-15

Thread(s) per core: 1

Core(s) per socket: 4

Socket(s): 4

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 15

Stepping: 11

**CPU MHz: 1600.002**

BogoMIPS: 5984.30

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 4096K

NUMA node0 CPU(s): 0-15

[VPS] $ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 4

On-line CPU(s) list: 0-3

Thread(s) per core: 1

Core(s) per socket: 1

Socket(s): 4

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 63

Stepping: 2

**CPU MHz: 2499.992**

BogoMIPS: 4999.98

Hypervisor vendor: KVM

Virtualization type: full

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 30720K

NUMA node0 CPU(s): 0-3