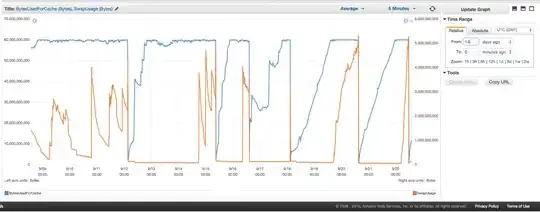

We have been having ongoing trouble with our ElastiCache Redis instance swapping. Amazon seems to have some crude internal monitoring in place which notices swap usage spikes and simply restarts the ElastiCache instance (thereby losing all our cached items). Here's the chart of BytesUsedForCache (blue line) and SwapUsage (orange line) on our ElastiCache instance for the past 14 days:

You can see the pattern of growing swap usage seeming to trigger reboots of our ElastiCache instance, wherein we lose all our cached items (BytesUsedForCache drops to 0).

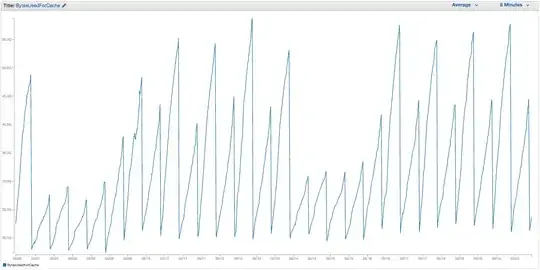

The 'Cache Events' tab of our ElastiCache dashboard has corresponding entries:

Source ID | Type | Date | Event

cache-instance-id | cache-cluster | Tue Sep 22 07:34:47 GMT-400 2015 | Cache node 0001 restarted

cache-instance-id | cache-cluster | Tue Sep 22 07:34:42 GMT-400 2015 | Error restarting cache engine on node 0001

cache-instance-id | cache-cluster | Sun Sep 20 11:13:05 GMT-400 2015 | Cache node 0001 restarted

cache-instance-id | cache-cluster | Thu Sep 17 22:59:50 GMT-400 2015 | Cache node 0001 restarted

cache-instance-id | cache-cluster | Wed Sep 16 10:36:52 GMT-400 2015 | Cache node 0001 restarted

cache-instance-id | cache-cluster | Tue Sep 15 05:02:35 GMT-400 2015 | Cache node 0001 restarted

(snip earlier entries)

SwapUsage -- in normal usage, neither Memcached nor Redis should be performing swaps

Our relevant (non-default) settings:

- Instance type:

cache.r3.2xlarge maxmemory-policy: allkeys-lru (we had been using the default volatile-lru previously without much difference)maxmemory-samples: 10reserved-memory: 2500000000- Checking the INFO command on the instance, I see

mem_fragmentation_ratiobetween 1.00 and 1.05

We have contacted AWS support and didn't get much useful advice: they suggested cranking up reserved-memory even higher (the default is 0, and we have 2.5 GB reserved). We do not have replication or snapshots set up for this cache instance, so I believe no BGSAVEs should be occurring and causing additional memory use.

The maxmemory cap of an cache.r3.2xlarge is 62495129600 bytes, and although we hit our cap (minus our reserved-memory) quickly, it seems strange to me that the host operating system would feel pressured to use so much swap here, and so quickly, unless Amazon has cranked up OS swappiness settings for some reason. Any ideas why we'd be causing so much swap usage on ElastiCache/Redis, or workaround we might try?