This is my storage network:

This is my storage network:

ESXi Host(Dual 10gb NICs) >> 2xNetgear xs712T >> Synology RS10613xs+ (Dual 10gb NICs)

ESXi vmnic1 >> NG Switch 1 >> Syn NIC 1

ESXi vmnic2 >> NG Switch 2 >> Syn NIC 2

Hello.

My Synology device performs better using NFS instead of iSCSI. A lot better.

The simple text diagram above is how I have my devices connected at the moment with a test ESXi host. My intention is to have this setup in my production environment if I can get it working how I want it.

My requirements: Have an NFS Share mounted as a data store on my hosts survive one of the Netgear switches failing.

The 10gb nic in the ESXi 5.5u2 host is an Intel 82599 10gb TN Dual Port card that is on the VMware HCL.

The Netgear xs712T switches (I know) do support the LAG protocol but not across separate switches.

The Synology RS10613xs+ (DSM 5.1-5022) does support the ability to Bond the two NICs into either a LAG or "Active/Standby".

I'm not sure this is technically possible. Synology support initially said it should work but couldn't give me any examples. Netgear support suggested factory resetting the switches at the first sign of trouble and didn't know anything really (fair enough they're not VMware experts). I don't have VMware support.

So to configure a LAG between the Synology NICs and the switches is pointless as I only have one physical link per switch making a LAG useless. Am I correct?

To create a LAG in this scenario I would need switches that support etherchannel/LAG/LACP across multiple separate switches e.g. top end HP/Cisco

Therefore I could get another dual port 10gb NIC in my host to try two separate LAGs across two separate switches but then I cannot get another 10gb NIC into my Synology :(

So I've setup my Synology with the two NICs bonded as "Active/Standby" which has generated one single NIC interface with a single Virtual IP that I present to my teamed 10gb NIC on my host.

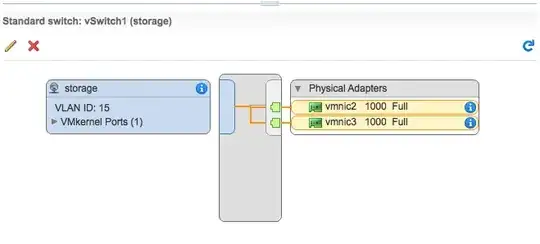

My host NIC is set like this:

1 vSwitch

1 VMKernel with both 10gb Adapters

NIC teaming:

Load Balancing: Use explicit failover order

Failback: No

ALL OTHER SETTINGS DEFAULT

I can ping from my Synology to my ESXi and vice versa. I can remove a Netgear switch and the pings remain working. Yay!!

But when I then mount an NFS store and test the store goes offline and the VMKernel logs show: Device or filesystem XXXXXXXX has entered the All Paths Down Timeout state

I tried lowering the NFS.MaxQueueDepth to 64 which made it slightly less crashy. But still cannot survive a Netgear switch being removed.

Am I trying to do the impossible with what I have?

This is my first post, please go easy. Thanks