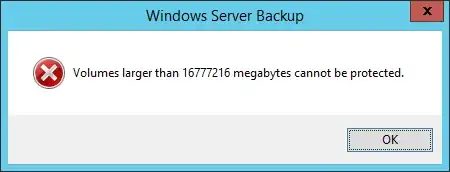

I'm trying to use Windows Server Backup to backup a RAID array on my new server. But, when I do, I run into this error:

The server is running Windows Server 2012 R2 and the array in question is 20TB in size (with 18TB usable); less than 1TB is currently being used.

I know that in Windows Server 2008, you couldn't backup volumes larger than 2TB due to a limitation in VHD, but that Microsoft has now switched to VHDX, which allows for 64TB volumes to be backed up. I'm also aware than in order to take advantage of this, the drive in question must be GPT.

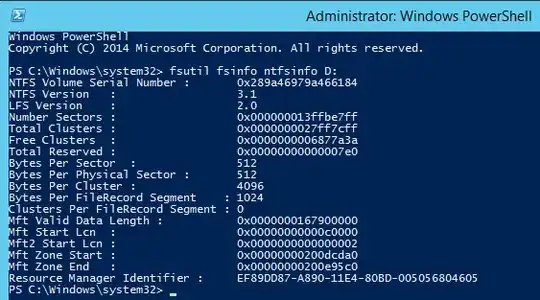

I have confirmed that my disk is, in fact, GPT.

When I run Windows Server Backup, I am using the "Backup Once" option and backing up to a network drive. I am also using what I believe to be standard settings. But, when I attempt to run the backup, I am presented with the error seen above.

I'm not sure why this is capping out at 16.7TB, since Windows Server Backup can backup volumes up to 64TB. Can anyone give me some insight as to why this may be happening or what I might be doing wrong?

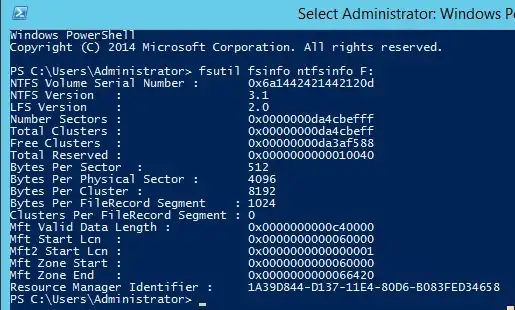

Update: I've received new drives and created the array again but I'm still getting the same error. I can confirm that my cluster count is under 2^32.

I read in this question that apparently Windows backup doesn't support backing up to or from disks that don't have either 512 or 512e byte sectors. Looking at the fileshare I'm attempting to backup to, it uses 4k sectors. Could this be the underlying issue? If it helps, the share that I'm trying to backup to is being hosted on a CentOS server.