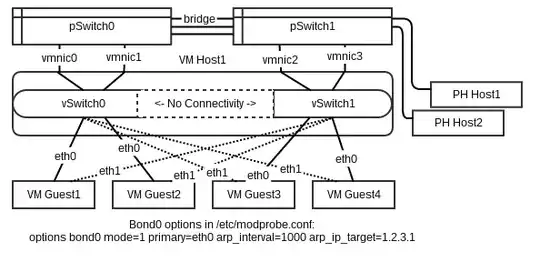

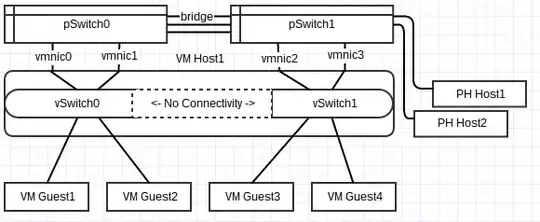

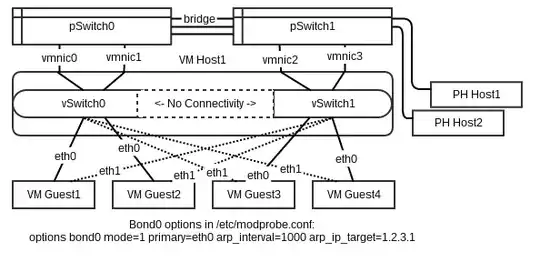

I have some servers in this configuration:

(complete configuration)

And I am not able, from VMGuest1, to ping either VMGuest3 or VMGuest4. I can, however, ping Host1 and Host2, which are attached to pSwitch1. The behavior is the same with VMGuest3 or 4 trying to ping VMGuest 1 or 2.

I don't have promiscuity enabled for any of these switches, nor do I have a bridge set up inside ESXi for the virtual switches. I know that one of these options is usually necessary when trying to get connectivity between two virtual switches. These switches are connected, however, through their respective physical switches which are bridged together.

Ping just times out, arp request looks like this:

[root@vmguest1:~]# arp -a vmguest3

vmguest3.example.com (1.2.3.4) at <incomplete> on eth0

[root@vmguest1:~]# arp -a host1

host1.example.com (1.2.3.5) at 00:0C:64:97:1C:FF [ether] on eth0

VMGuest1 can reach hosts on pSwitch1, so why can't it get to hosts on vSwitch1 through pSwitch1 the same way?