I have been doing a lot of research on vSwitch configurations, but I think I am more confused now after all of the reading that I have done. So here is my situation 3 ESX Hosts (12 nics each), 1 iSCSI SAN, 2 Force 10 switches. Should I create individual vSwitches for MGMT, vMotion, VM, and SCSI traffic? or do I need to group anything together in the same vSwitch? I am going to have 4 vLANS total, one for each of those items, do I need to do any trunking on the physical switch or just assign the correct vLAN to each physical switch port?

-

Why so many NICs? This can safely be accomplished in 4-6 NICs. – ewwhite Dec 18 '12 at 15:51

-

I think it was only a few more dollars to add a few additional nics, that is why there are so many. – Joshua Dec 18 '12 at 16:44

-

1Yeah, you don't need that many NICs. I'll answer below. – ewwhite Dec 18 '12 at 16:52

5 Answers

Use separate vSwitches with dedicated uplinks for Management, Data, and iSCSI traffic. You can share vMotion and Management in most cases, but if you have enough NICs to separate them, feel free.

You don't need to do trunking in this configuration, just have the physical ports in the correct VLAN and you should be all set. If you have multiple data VLANs that you want your VMs to be on, then you can trunk the data ports and configure the vSwitches appropriately, but it doesn't sound like this is what you need.

- 100,183

- 32

- 195

- 326

If you've not already spent the money then consider just buying two 10Gbps NICs instead and running everything over those, it'll be a hell of a lot easier to setup and maintain, and I can vouch for it's simplicity and performance.

- 100,240

- 9

- 106

- 238

MOAR isn't necessarily better :)

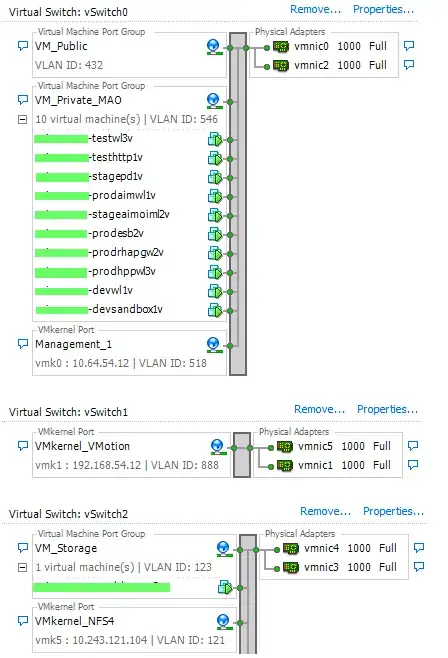

Here's a perfectly-resilient 6-NIC ESXi host setup, using:

2 pNICs - VMWare VM traffic.

2 pNICs - VMWare VMotion traffic.

2 pNICs - NFS storage. Could also be iSCSI traffic with MPIO.

You can trunk (I did in this case because the environment is multi-tenant), or you can tag individual ports with their respective VLAN. In the example here, each vSwitch have physical NICs plugged into a different member of a Cisco 3750 switch stack. We're also using diversity across physical NIC cards. So we can lose one HBA, link, switch, etc. and still pass traffic.

If you're still using 1GbE links, I'm not sure much more can be gained by utilizing more physical NICs (e.g. 12!). However, that depends on what you need your VM's to do.

- 194,921

- 91

- 434

- 799

-

I am assuming that VM_Storage is your SAN traffic. What does VM_Public do? Did you just arbitrarily pick your VLAN IDs? – Joshua Dec 18 '12 at 17:22

-

I have hundreds of ESXi hosts here, so the VLAN ID's are specific. VM_Public would be traffic going to *teh interwebz...* in this specific case, the VM's only communicate with each other and similar VM's on other hosts. VM_Storage is NFS SAN traffic. – ewwhite Dec 18 '12 at 17:25

-

-

+1 -- You *may* want to add additional NICs (or redundant pairs) if you have some particularly high-traffic VMs that saturate your main uplink (e.g. if you virtualize a backup system), but generally something like this is sane, and has less hardware to break :) – voretaq7 Dec 18 '12 at 17:57

You should configure at least one dedicated NIC for iSCSI traffic. Best practice suggests that you also dedicate a separate NIC each for Virtual Machine traffic, vMotion and Fault Tolerance. Best practice also suggests that you have two VMkernel ports configured for Management traffic.

As for the pSwitch you shouldn't need to use trunk ports. The pSwitch ports should be configured as access ports with membership in the appropriate VLAN.

- 108,377

- 6

- 80

- 171

-

I should have no problem allocating 2 nics per vswitch. When you say add a nic for each VM, do you mean that if I have 8 VMs that I should have 8 NICS assigned to that vSwitch? – Joshua Dec 18 '12 at 16:47

-

No, that isn't what I meant. I mean that your VM port group should have a dedicated NIC so that your VM traffic isn't going over the same vSwitch as your iSCSI, vMotion or FT traffic. You'll have multiple VM's in a VM port group (on one or more vSwitches). – joeqwerty Dec 18 '12 at 17:05

-

Should I create individual vSwitches for MGMT, vMotion, VM, and SCSI traffic?

VMware would recommend that you do and that they would bound to different physical NICs.

I would do the following, Assuming the iSCSI san has more than one nic

VLAN 1 - iSCSI traffic

VLAN 2 - vMotion traffic

VLAN 3 - Management traffic

VLAN 4 - VM traffic

vSwitch 1 - iSCSI

vSwitch 2 - vMotion

vSwitch 3 - Management

vSwitch 4 - VM

NIC 0 - vSwitch 1 connected to Force 10 switch 1

NIC 1 - vSwitch 1 connected to Force 10 switch 2

NIC 2 - vSwitch 2 connected to Force 10 switch 1

NIC 3 - vSwitch 2 connected to Force 10 switch 2

NIC 4 - vSwitch 3 connected to Force 10 switch 1

NIC 5 - vSwitch 3 connected to Force 10 switch 2

NIC 6 - vSwitch 4 connected to Force 10 switch 1

NIC 7 - vSwitch 4 connected to Force 10 switch 2

iSCSI SAN

NIC 0 - Force 10 switch 1

NIC 1 - Force 10 switch 2

Same on 2nd controller if it has one.

You could put the management and vMotion traffic on the same ports.

The ports connecting to the ESXi servers can be an untagged mode, It might be worth tagging the VM traffic network in case you need VMs on different VLANs in the future then it just a matter of trunking the VLAN over the VM ports.

- 1,011

- 6

- 8

-

-

So you could do 2 ports to switch 1 and 2 to switch 2 for extra throughput – Epaphus Dec 18 '12 at 17:25