Every 10 seconds or so, both our web servers (windows server 2003, running iis6), report the same errors in the event log.

> Event Type: Error Event

> Source: Application Popup Event

> Category: None Event ID: 333

> Date: 2009-08-18 Time: 22:04:06

> User: N/A Computer: DFS273

> Description: An I/O operation

> initiated by the Registry failed

> unrecoverably. The Registry could not

> read in, or write out, or flush, one

> of the files that contain the system's

> image of the Registry.

>

> For more information, see Help and

> Support Center at

> http://go.microsoft.com/fwlink/events.asp.

> Data: 0000: 00 00 00 00 01 00 6c 00

> ......l. 0008: 00 00 00 00 4d 01 00 c0

> ....M..À 0010: 00 00 00 00 4d 01 00 c0

> ....M..À 0018: 00 00 00 00 00 00 00 00

> ........ 0020: 00 00 00 00 00 00 00 00

> ........

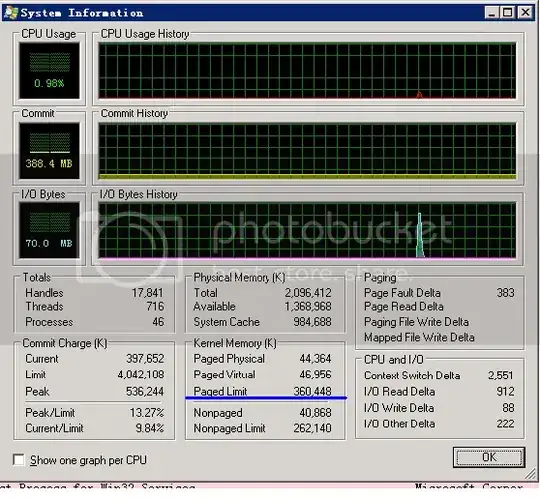

I can't find any information as to what could cause these kinds of errors. The CPU is quite busy at 90-100% but there is almost 1 GB of unused RAM.