www.hardware.fr one of the biggest French hardware news site is partner with www.ldlc.com one of the biggest French online reseller. They have access to their return stats and have been publishing failure rate reports (mother boards, power supplies, RAM, graphics cards, HDD, SSD, ...) twice a year since 2009.

These are "early death" stats, 6 months to 1 year of use. Also returns direct to the manufacturer can't be counted, but most people return to the reseller during the first year and it shouldn't affect comparisons between brands and models.

Generally speaking HDD failure rates have less variations between brands and models. The rule is bigger capacity > more platters > higher failure rate, but nothing dramatic.

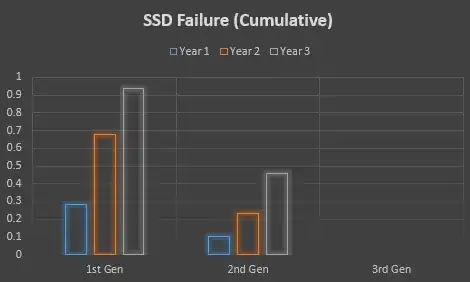

SSD failure rate is lower overall but some SSD models were really bad with around 50% returns for the infamous ones during the period you asked for (2013). Seems to have stopped now that that infamous brand was bought.

Some SSD brands are "optimising" their firmware just to get a bit higher results in benchmarks and you sometime end up with freezes, blue screens, ... This also seems to be less of a problem now than it was in 2013.

Failure rate reports are here:

2010

2011 (1)

2011 (2)

2012 (1)

2012 (2)

2013 (1)

2013 (2)

2014 (1)

2014 (2)

2015 (1)

2015 (2)

2016 (1)

2016 (2)