Recent versions of RHEL/CentOS (EL6) brought some interesting changes to the XFS filesystem I've depended on heavily for over a decade. I spent part of last summer chasing down an XFS sparse file situation resulting from a poorly-documented kernel backport. Others have had unfortunate performance issues or inconsistent behavior since moving to EL6.

XFS was my default filesystem for data and growth-partitions, as it offered stability, scalability and a good performance boost over the default ext3 filesystems.

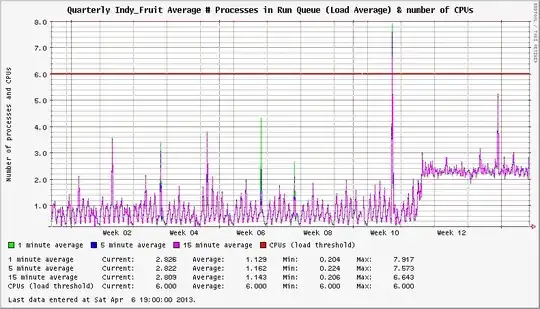

There's an issue with XFS on EL6 systems that surfaced in November 2012. I noticed that my servers were showing abnormaly-high system loads, even when idle. In one case, an unloaded system would show a constant load average of 3+. In others, there was a 1+ bump in load. The number of mounted XFS filesystems seemed to influence the severity of the load increase.

System has two active XFS filesystems. Load is +2 following upgrade to the affected kernel.

Digging deeper, I found a few threads on the XFS mailing list that pointed to an increased frequency of the xfsaild process sitting in the STAT D state. The corresponding CentOS Bug Tracker and Red Hat Bugzilla entries outline the specifics of the issue and conclude that this is not a performance problem; only an error in the reporting of system load in kernels newer than 2.6.32-279.14.1.el6.

WTF?!?

In a one-off situation, I understand that the load reporting may not be a big deal. Try managing that with your NMS and hundreds or thousands of servers! This was identified in November 2012 at kernel 2.6.32-279.14.1.el6 under EL6.3. Kernels 2.6.32-279.19.1.el6 and 2.6.32-279.22.1.el6 were released in subsequent months (December 2012 and February 2013) with no change to this behavior. There's even been a new minor release of the operating system since this issue was identified. EL6.4 was released and is now on kernel 2.6.32-358.2.1.el6, which exhibits the same behavior.

I've had a new system build queue and have had to work around the issue, either locking kernel versions at the pre-November 2012 release for EL6.3 or just not using XFS, opting for ext4 or ZFS, at a severe performance penalty for the specific custom application running atop. The application in question relies heavily on some of the XFS filesystem attributes to account for deficiencies in the application design.

Going behind Red Hat's paywalled knowledgebase site, an entry appears stating:

High load average is observed after installing kernel 2.6.32-279.14.1.el6. The high load average is caused by xfsaild going into D state for each XFS formatted device.

There is currently no resolution for this issue. It is currently being tracked via Bugzilla #883905. Workaround Downgrade the installed kernel package to a version lower then 2.6.32-279.14.1.

(except downgrading kernels not an option on RHEL 6.4...)

So we're 4+ months into this problem with no real fix planned for the EL6.3 or EL6.4 OS releases. There's a proposed fix for EL6.5 and a kernel source patch available... But my question is:

At what point does it make sense to depart from the OS-provided kernels and packages when the upstream maintainer has broken an important feature?

Red Hat introduced this bug. They should incorporate a fix into an errata kernel. One of the advantages of using enterprise operating systems is that they provide a consistent and predictable platform target. This bug disrupted systems already in production during a patch cycle and reduced confidence in deploying new systems. While I could apply one of the proposed patches to the source code, how scalable is that? It would require some vigilance to keep updated as the OS changes.

What's the right move here?

- We know this could possibly be fixed, but not when.

- Supporting your own kernel in a Red Hat ecosystem has its own set of caveats.

- What's the impact on support eligibility?

- Should I just overlay a working EL6.3 kernel on top of newly-build EL6.4 servers to gain the proper XFS functionality?

- Should I just wait until this is officially fixed?

- What does this say about the lack of control we have over enterprise Linux release cycles?

- Was relying on an XFS filesystem for so long a planning/design mistake?

Edit:

This patch was incorporated into the most recent CentOSPlus kernel release (kernel-2.6.32-358.2.1.el6.centos.plus). I'm testing this on my CentOS systems, but this doesn't help much for the Red Hat-based servers.