I've run XFS filesystems as data/growth partitions for nearly 10 years across various Linux servers.

I've noticed a strange phenomenon with recent CentOS/RHEL servers running version 6.2+.

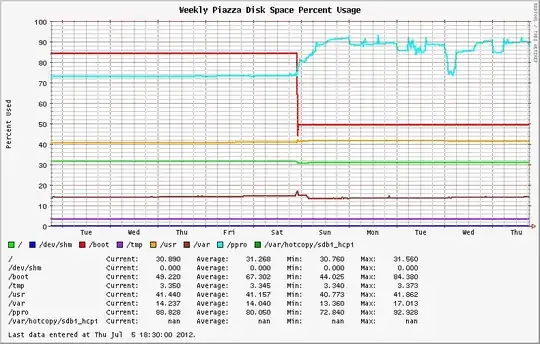

Stable filesystem usage became highly variable following the move to the newer OS revision from EL6.0 and EL6.1. Systems initially installed with EL6.2+ exhibit the same behavior; showing wild swings in disk utilization on the XFS partitions (See the blue line in the graph below).

Before and after. The upgrade from 6.1 to 6.2 occurred on Saturday.

The past quarter's disk usage graph of the same system, showing the fluctuations over the last week.

I started to check the filesystems for large files and runaway processes (log files, maybe?). I discovered that my largest files were reporting different values from du and ls. Running du with and without the --apparent-size switch illustrates the difference.

# du -skh SOD0005.TXT

29G SOD0005.TXT

# du -skh --apparent-size SOD0005.TXT

21G SOD0005.TXT

A quick check using the ncdu utility across the entire filesystem yielded:

Total disk usage: 436.8GiB Apparent size: 365.2GiB Items: 863258

The filesystem is full of sparse files, with nearly 70GB of lost space compared to the previous version of the OS/kernel!

I pored through the Red Hat Bugzilla and change logs to see if there were any reports of the same behavior or new announcements regarding XFS.

Nada.

I went from kernel version 2.6.32-131.17.1.el6 to 2.6.32-220.23.1.el6 during the upgrade; no change in minor version number.

I checked file fragmentation with the filefrag tool. Some of the biggest files on the XFS partition had thousands of extents. Running on online defrag with xfs_fsr -v during a slow period of activity helped reduce disk usage temporarily (See Wednesday in the first graph above). However, usage ballooned as soon as heavy system activity resumed.

What is happening here?