In past few days I've been trying to understand the weirdness which is happening in our infrastructure but I haven't been able to figure it our so I'm turning to you guys to give me some hints.

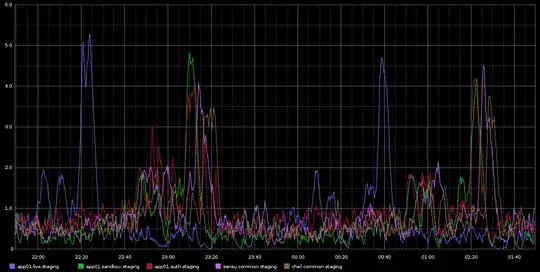

I've been noticing in Graphite, spikes in load_avg which are happening with deadly regularity approximately every 2 hours - it's not exactly 2 hours but it is very regular. I'm attaching a screenshot of this I took from Graphite

I've got stuck in investigating this - the regularity of this was leading me to thinking that it's some kind of cron job or something like that but there are NO cronjobs running on these servers - actually these are VMs running in Rackspace cloud. What I'm looking for is some kind of indication of might be causing these issues and how to investigate this further.

The servers are fairly idle - this is a staging environment so there's almost not traffic coming in/there should be no load on them. These are all 4 virtual cores VMs. What I know for sure is that we are taking a bunch of Graphite samples about every 10 seconds but if that's the cause of the load then I'd expect it to be constantly high rather than happening every 2 hours in waves in different servers.

Any help how to investigate this would be greatly appreciated!

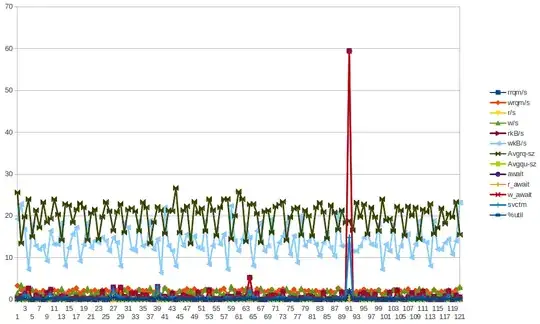

Here are some data from sar for app01 - which is the first blue spike on the picture above - I wasnt able to draw ANY conclusions from the data. Also not that the bytes write spike you see happening every half an hour (NOT EVERY 2 HOURS) is due to chef-client running every 30 minutes. I'll try to gather more data even though I already have done that but couldn't really draw any conclusions from those either.

LOAD

09:55:01 PM runq-sz plist-sz ldavg-1 ldavg-5 ldavg-15 blocked

10:05:01 PM 0 125 1.28 1.26 0.86 0

10:15:01 PM 0 125 0.71 1.08 0.98 0

10:25:01 PM 0 125 4.10 3.59 2.23 0

10:35:01 PM 0 125 0.43 0.94 1.46 3

10:45:01 PM 0 125 0.25 0.45 0.96 0

10:55:01 PM 0 125 0.15 0.27 0.63 0

11:05:01 PM 0 125 0.48 0.33 0.47 0

11:15:01 PM 0 125 0.07 0.28 0.40 0

11:25:01 PM 0 125 0.46 0.32 0.34 0

11:35:01 PM 2 130 0.38 0.47 0.42 0

11:45:01 PM 2 131 0.29 0.40 0.38 0

11:55:01 PM 2 131 0.47 0.53 0.46 0

11:59:01 PM 2 131 0.66 0.70 0.55 0

12:00:01 AM 2 131 0.81 0.74 0.57 0

CPU

09:55:01 PM CPU %user %nice %system %iowait %steal %idle

10:05:01 PM all 5.68 0.00 3.07 0.04 0.11 91.10

10:15:01 PM all 5.01 0.00 1.70 0.01 0.07 93.21

10:25:01 PM all 5.06 0.00 1.74 0.02 0.08 93.11

10:35:01 PM all 5.74 0.00 2.95 0.06 0.13 91.12

10:45:01 PM all 5.05 0.00 1.76 0.02 0.06 93.10

10:55:01 PM all 5.02 0.00 1.73 0.02 0.09 93.13

11:05:01 PM all 5.52 0.00 2.74 0.05 0.08 91.61

11:15:01 PM all 4.98 0.00 1.76 0.01 0.08 93.17

11:25:01 PM all 4.99 0.00 1.75 0.01 0.06 93.19

11:35:01 PM all 5.45 0.00 2.70 0.04 0.05 91.76

11:45:01 PM all 5.00 0.00 1.71 0.01 0.05 93.23

11:55:01 PM all 5.02 0.00 1.72 0.01 0.06 93.19

11:59:01 PM all 5.03 0.00 1.74 0.01 0.06 93.16

12:00:01 AM all 4.91 0.00 1.68 0.01 0.08 93.33

IO

09:55:01 PM tps rtps wtps bread/s bwrtn/s

10:05:01 PM 8.88 0.15 8.72 1.21 422.38

10:15:01 PM 1.49 0.00 1.49 0.00 28.48

10:25:01 PM 1.54 0.00 1.54 0.03 29.61

10:35:01 PM 8.35 0.04 8.31 0.32 411.71

10:45:01 PM 1.58 0.00 1.58 0.00 30.04

10:55:01 PM 1.52 0.00 1.52 0.00 28.36

11:05:01 PM 8.32 0.01 8.31 0.08 410.30

11:15:01 PM 1.54 0.01 1.52 0.43 29.07

11:25:01 PM 1.47 0.00 1.47 0.00 28.39

11:35:01 PM 8.28 0.00 8.28 0.00 410.97

11:45:01 PM 1.49 0.00 1.49 0.00 28.35

11:55:01 PM 1.46 0.00 1.46 0.00 27.93

11:59:01 PM 1.35 0.00 1.35 0.00 26.83

12:00:01 AM 1.60 0.00 1.60 0.00 29.87

NETWORK:

10:25:01 PM IFACE rxpck/s txpck/s rxkB/s txkB/s rxcmp/s txcmp/s rxmcst/s

10:35:01 PM lo 8.36 8.36 2.18 2.18 0.00 0.00 0.00

10:35:01 PM eth1 7.07 4.77 5.24 2.42 0.00 0.00 0.00

10:35:01 PM eth0 2.30 1.99 0.24 0.51 0.00 0.00 0.00

10:45:01 PM lo 8.35 8.35 2.18 2.18 0.00 0.00 0.00

10:45:01 PM eth1 3.69 3.45 0.65 2.22 0.00 0.00 0.00

10:45:01 PM eth0 1.50 1.33 0.15 0.36 0.00 0.00 0.00

10:55:01 PM lo 8.36 8.36 2.18 2.18 0.00 0.00 0.00

10:55:01 PM eth1 3.66 3.40 0.64 2.19 0.00 0.00 0.00

10:55:01 PM eth0 0.79 0.87 0.08 0.29 0.00 0.00 0.00

11:05:01 PM lo 8.36 8.36 2.18 2.18 0.00 0.00 0.00

11:05:01 PM eth1 7.29 4.73 5.25 2.41 0.00 0.00 0.00

11:05:01 PM eth0 0.82 0.89 0.09 0.29 0.00 0.00 0.00

11:15:01 PM lo 8.34 8.34 2.18 2.18 0.00 0.00 0.00

11:15:01 PM eth1 3.67 3.30 0.64 2.19 0.00 0.00 0.00

11:15:01 PM eth0 1.27 1.21 0.11 0.34 0.00 0.00 0.00

11:25:01 PM lo 8.32 8.32 2.18 2.18 0.00 0.00 0.00

11:25:01 PM eth1 3.43 3.35 0.63 2.20 0.00 0.00 0.00

11:25:01 PM eth0 1.13 1.09 0.10 0.32 0.00 0.00 0.00

11:35:01 PM lo 8.36 8.36 2.18 2.18 0.00 0.00 0.00

11:35:01 PM eth1 7.16 4.68 5.25 2.40 0.00 0.00 0.00

11:35:01 PM eth0 1.15 1.12 0.11 0.32 0.00 0.00 0.00

11:45:01 PM lo 8.37 8.37 2.18 2.18 0.00 0.00 0.00

11:45:01 PM eth1 3.71 3.51 0.65 2.20 0.00 0.00 0.00

11:45:01 PM eth0 0.75 0.86 0.08 0.29 0.00 0.00 0.00

11:55:01 PM lo 8.30 8.30 2.18 2.18 0.00 0.00 0.00

11:55:01 PM eth1 3.65 3.37 0.64 2.20 0.00 0.00 0.00

11:55:01 PM eth0 0.74 0.84 0.08 0.28 0.00 0.00 0.00

For people curious about cronjobs. Here's the summary of all cronjobs set up on the server ( I picked app01 but this is happening on a few other servers too with the same cronjobs set up)

$ ls -ltr /etc/cron*

-rw-r--r-- 1 root root 722 Apr 2 2012 /etc/crontab

/etc/cron.monthly:

total 0

/etc/cron.hourly:

total 0

/etc/cron.weekly:

total 8

-rwxr-xr-x 1 root root 730 Dec 31 2011 apt-xapian-index

-rwxr-xr-x 1 root root 907 Mar 31 2012 man-db

/etc/cron.daily:

total 68

-rwxr-xr-x 1 root root 2417 Jul 1 2011 popularity-contest

-rwxr-xr-x 1 root root 606 Aug 17 2011 mlocate

-rwxr-xr-x 1 root root 372 Oct 4 2011 logrotate

-rwxr-xr-x 1 root root 469 Dec 16 2011 sysstat

-rwxr-xr-x 1 root root 314 Mar 30 2012 aptitude

-rwxr-xr-x 1 root root 502 Mar 31 2012 bsdmainutils

-rwxr-xr-x 1 root root 1365 Mar 31 2012 man-db

-rwxr-xr-x 1 root root 2947 Apr 2 2012 standard

-rwxr-xr-x 1 root root 249 Apr 9 2012 passwd

-rwxr-xr-x 1 root root 219 Apr 10 2012 apport

-rwxr-xr-x 1 root root 256 Apr 12 2012 dpkg

-rwxr-xr-x 1 root root 214 Apr 20 2012 update-notifier-common

-rwxr-xr-x 1 root root 15399 Apr 20 2012 apt

-rwxr-xr-x 1 root root 1154 Jun 5 2012 ntp

/etc/cron.d:

total 4

-rw-r--r-- 1 root root 395 Jan 6 18:27 sysstat

$ sudo ls -ltr /var/spool/cron/crontabs

total 0

$

As you can see there are no HOURLY cronjobs. Only daily/weekly etc.

I have gathered bunch of stats (vmstat, mpstat, iostat) - however hard I'm trying I just can't see any leads which would suggest any VM component misbehaving...I'm starting to lean towards potential problems at hypervisor. Feel free to have a look at the stats The gist starts with sar -q output around the "offending" time and then you can see vm,mp and iostats....

Basically it's still a total mystery to me ...