We using standard mongo setup: 2 replicas + 1 arbiter. Both replica servers use same AWS m1.medium with RAID10 EBS.

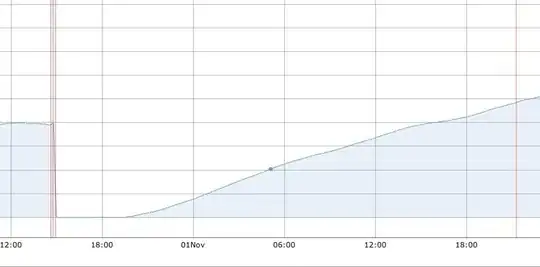

We experiencing constantly growing replication lag on secondary replica.

I tried to do full-resync, you can see it on graph, but it helped only for some hours. Our mongo usage is really low now, and frankly i can't understan why it can be.

iostat 1 for secondary:

avg-cpu: %user %nice %system %iowait %steal %idle

80.39 0.00 2.94 0.00 16.67 0.00

Device: tps kB_read/s kB_wrtn/s kB_read kB_wrtn

xvdap1 0.00 0.00 0.00 0 0

xvdb 0.00 0.00 0.00 0 0

xvdfp4 12.75 0.00 189.22 0 193

xvdfp3 12.75 0.00 189.22 0 193

xvdfp2 7.84 0.00 40.20 0 41

xvdfp1 7.84 0.00 40.20 0 41

md127 19.61 0.00 219.61 0 224

mongostat for secondary (why 100% locks? i guess its the problem):

insert query update delete getmore command flushes mapped vsize res faults locked % idx miss % qr|qw ar|aw netIn netOut conn set repl time

*10 *0 *16 *0 0 2|4 0 30.9g 62.4g 1.65g 0 107 0 0|0 0|0 198b 1k 16 replset-01 SEC 06:55:37

*4 *0 *8 *0 0 12|0 0 30.9g 62.4g 1.65g 0 91.7 0 0|0 0|0 837b 5k 16 replset-01 SEC 06:55:38

*4 *0 *7 *0 0 3|0 0 30.9g 62.4g 1.64g 0 110 0 0|0 0|0 342b 1k 16 replset-01 SEC 06:55:39

*4 *0 *8 *0 0 1|0 0 30.9g 62.4g 1.64g 0 82.9 0 0|0 0|0 62b 1k 16 replset-01 SEC 06:55:40

*3 *0 *7 *0 0 5|0 0 30.9g 62.4g 1.6g 0 75.2 0 0|0 0|0 466b 2k 16 replset-01 SEC 06:55:41

*4 *0 *7 *0 0 1|0 0 30.9g 62.4g 1.64g 0 138 0 0|0 0|1 62b 1k 16 replset-01 SEC 06:55:42

*7 *0 *15 *0 0 3|0 0 30.9g 62.4g 1.64g 0 95.4 0 0|0 0|0 342b 1k 16 replset-01 SEC 06:55:43

*7 *0 *14 *0 0 1|0 0 30.9g 62.4g 1.64g 0 98 0 0|0 0|0 62b 1k 16 replset-01 SEC 06:55:44

*8 *0 *17 *0 0 3|0 0 30.9g 62.4g 1.64g 0 96.3 0 0|0 0|0 342b 1k 16 replset-01 SEC 06:55:45

*7 *0 *14 *0 0 3|0 0 30.9g 62.4g 1.64g 0 96.1 0 0|0 0|0 186b 2k 16 replset-01 SEC 06:55:46

mongostat for primary

insert query update delete getmore command flushes mapped vsize res faults locked % idx miss % qr|qw ar|aw netIn netOut conn set repl time

12 30 20 0 0 3 0 30.9g 62.6g 641m 0 0.9 0 0|0 0|0 212k 619k 48 replset-01 M 06:56:41

5 17 10 0 0 2 0 30.9g 62.6g 641m 0 0.5 0 0|0 0|0 159k 429k 48 replset-01 M 06:56:42

9 22 16 0 0 3 0 30.9g 62.6g 642m 0 0.7 0 0|0 0|0 158k 276k 48 replset-01 M 06:56:43

6 18 12 0 0 2 0 30.9g 62.6g 640m 0 0.7 0 0|0 0|0 93k 231k 48 replset-01 M 06:56:44

6 12 8 0 0 3 0 30.9g 62.6g 640m 0 0.3 0 0|0 0|0 80k 125k 48 replset-01 M 06:56:45

8 21 14 0 0 9 0 30.9g 62.6g 641m 0 0.6 0 0|0 0|0 118k 419k 48 replset-01 M 06:56:46

10 34 20 0 0 6 0 30.9g 62.6g 640m 0 1.3 0 0|0 0|0 164k 527k 48 replset-01 M 06:56:47

6 21 13 0 0 2 0 30.9g 62.6g 641m 0 0.7 0 0|0 0|0 111k 477k 48 replset-01 M 06:56:48

8 21 15 0 0 2 0 30.9g 62.6g 641m 0 0.7 0 0|0 0|0 204k 336k 48 replset-01 M 06:56:49

4 12 8 0 0 8 0 30.9g 62.6g 641m 0 0.5 0 0|0 0|0 156k 530k 48 replset-01 M 06:56:50

Mongo version: 2.0.6