I have a Synology 1812+ NAS with 8 3TB drives configured as RAID 5. Its running DSM 4.1. It was purchased to replace USB drives, consolidate storage and short term OS X backups using Time Machine. The device and drives are only 2 months old.

Every other week I started to get IO errors from two of the drives. The logs has the following error:

Read error at internal disk [3] sector 2586312968.

And later on

Bad sector at md2 disk3 sector 250049936 has been corrected.

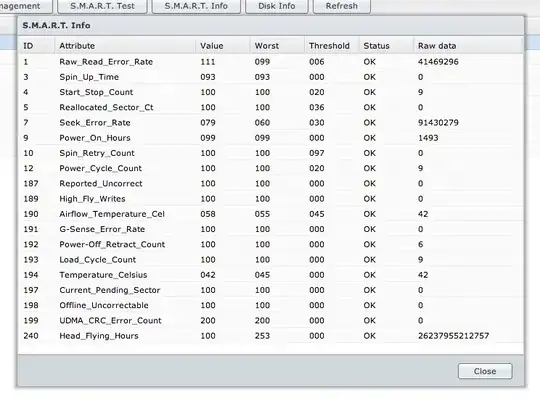

The sectors never match. The recommendation is to run a extended S.M.A.R.T. test on the drives. I did and this is the values I got:

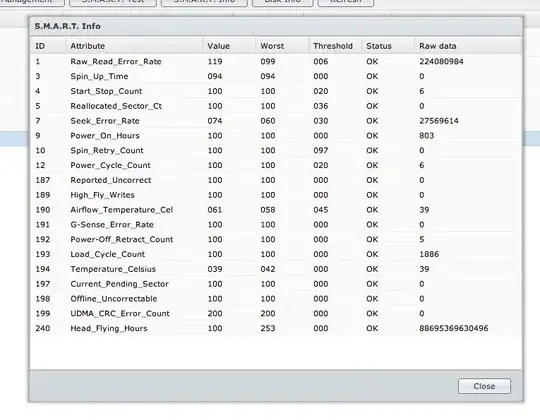

I then ran an extended extended S.M.A.R.T. test on one of the drives for which I received no complaints and here is the values I got:

The values look very similar. It is unclear to me if there is a problem and if not, what is the point of a S.M.A.R.T. test if it doesn't reveal any real problem? How should I then interpret these results and when should I know its time to replace HDD?