For a project, I'm using a SQL Server 2008 R2. One table has a filestream column.

I've made some load tests, and now the database has ~20GB used.

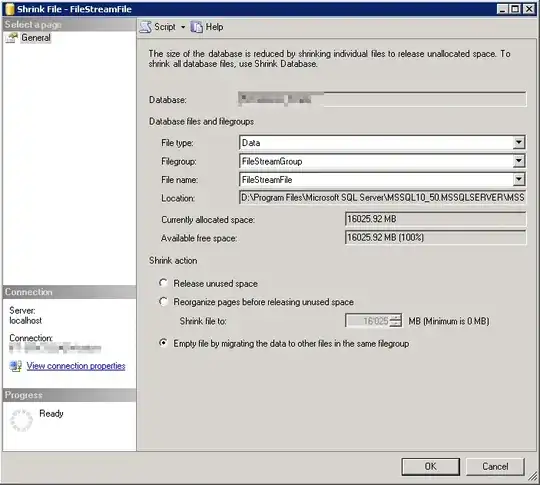

I've empty tables, except several(configuration tables). But my database was still using a lot of space. So I used the Task -> Shrink -> Database / Files But my database is still using something like 16GB.

I found that it's the filestream file is still using a lot of space.

The problem is that I need to backup this database to export it on the final production server, and event if I indicate to compress the backup I got a file more than 3.5Go. Not convenient to store and upload.

And I'm planning much bigger test, so I want to know how to shrink that empty space.

When I'm trying:

I get this exception:

The properties SIZE, MAXSIZE, or FILEGROWTH cannot be specified for the FILESTREAM data

file 'FileStreamFile'. (Microsoft SQL Server, Error: 5509)

So what should I do?

I found several topics with this error but they was about removing the filestream column.