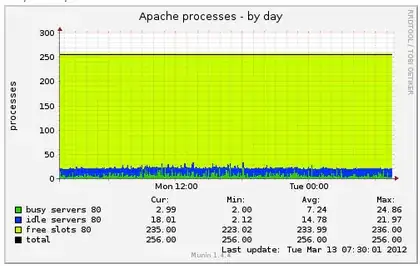

We're running a heavy Drupal website that performs financial modeling. We seem to be running into some sort of memory leak given the fact that overtime the memory used by apache grows while the number of apache processes remains stable:

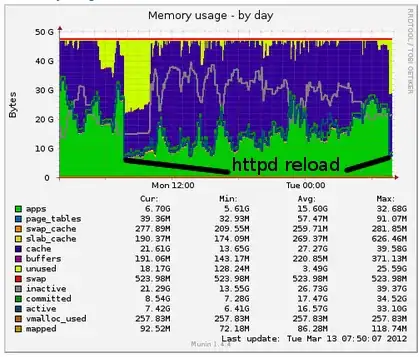

We know the memory problem is coming from apache/PHP because whenever we issue a /etc/init.d/httpd reload the memory usage drops (see above screenshot and below CLI outputs):

Before httpd reload

$ free

total used free shared buffers cached

Mem: 49447692 45926468 3521224 0 191100 22609728

-/+ buffers/cache: 23125640 26322052

Swap: 2097144 536552 1560592

After httpd reload

$ free

total used free shared buffers cached

Mem: 49447692 28905752 20541940 0 191360 22598428

-/+ buffers/cache: 6115964 43331728

Swap: 2097144 536552 1560592

Each apache thread is assigned a PHP memory_limit of 512MB which explains the high memory usage depiste the low volume of requests, and a max_execution_time of 120 sec which should terminate threads which execution is taking longer, and should therefore prevent the constant growth in memory usage we're seeing.

Q: How could we investigate what is causing this memory leak?

Ideally I'm looking for troubleshooting steps I can perform on the system without having to bother the dev team.

Additional info:

OS: RHEL 5.6

PHP: 5.3

Drupal: 6.x

MySQL: 5.6

FYI we're aware of the swapping issue which we're investigating separately and has nothing to do with the memory leak which we've observed before the swapping started to occur.