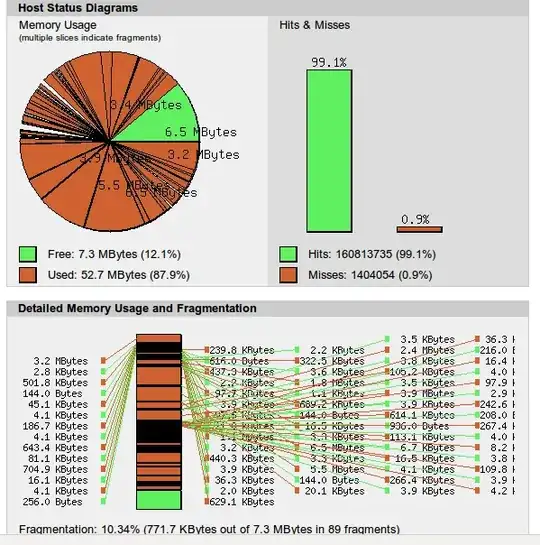

I would like to optimize APC some more but I am not sure where I could do something. First here is the screenshot after one week of running with the current configuration:

I have now the following points that I am unsure of:

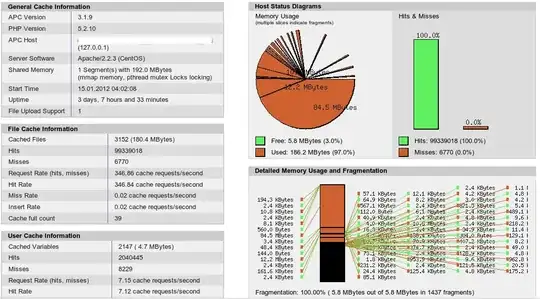

- Do I see it correctly that the fragmentation happens because the cache is used as an user cache too?

- Why does the fragmentation bar tell me 100% of only 5.8MB when I allocated 192MB in total?

- Is this just a rendering problem that the circle under "Memory Usage" is not fully closed? Because the MB values below do add up. (To say is that the circle looks nice after a restart it just becomes like this when the cache gets more and more fragmented.)

- Since the hit rate is really good I am not sure if the fragmentation is a big problem or not. Do you think that I can still optimize it?

I am mostly interested in getting those questions answered. Only then I can understand APC better and make adjustments myself.

Some detail information: On this server is Drupal and Magento running. Drupal is using it as a user cache too.

The question for me is now how I could optimize it. I could allocate more RAM but I am not sure if this really helps a lot.

UPDATE: Here is the configuration:

; The size of each shared memory segment in MB.

apc.shm_size = 192M

; Prevent files larger than this value from getting cached. Defaults to 1M.

apc.max_file_size = 2M

; The number of seconds a cache entry is allowed to idle in a slot in case

; this cache entry slot is needed by another entry.

apc.ttl = 3600

As you can see it is pretty minimal at the moment.