I have a daily ETL process in SSIS that builds my warehouse so we can provide day-over-day reports.

I have two servers - one for SSIS and the other for the SQL Server Database. The SSIS server (SSIS-Server01) is an 8CPU, 32GB RAM box. The SQL Server database (DB-Server) is another8CPU, 32GB RAM box. Both are VMWare virtual machines.

In its oversimplified form, the SSIS reads 17 Million rows (about 9GB) from a single table on the DB-Server, unpivots them to 408M rows, does a few lookups and a ton of calculations, and then aggregates it back to about 8M rows that are written to a brand new table on the same DB-Server every time (this table will then be moved into a partition to provide day-over-day reports).

I have a loop that processes 18 months worth of data at a time - a grand total of 10 years of data. I chose 18 months based on my observation of RAM Usage on SSIS-Server - at 18 months it consumes 27GB of RAM. Any higher than that, and SSIS starts buffering to disk and the performance nosedives.

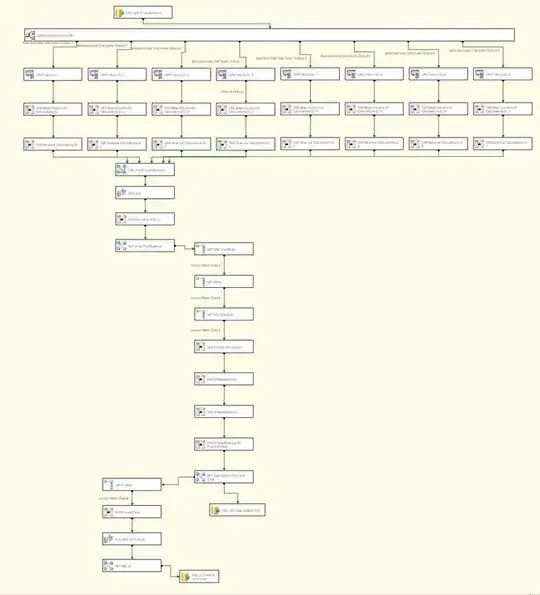

Here is my dataflow http://img207.imageshack.us/img207/4105/dataflow.jpg

I am using Microsoft's Balanced Data Distributor to send data down 8 parallel paths to maximize resource usage. I do a union before starting work on my aggregations.

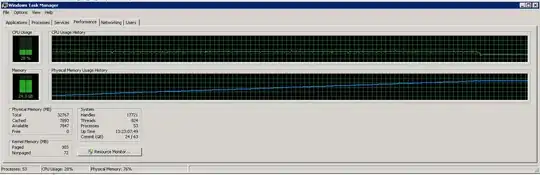

Here is the task manager graph from the SSIS server

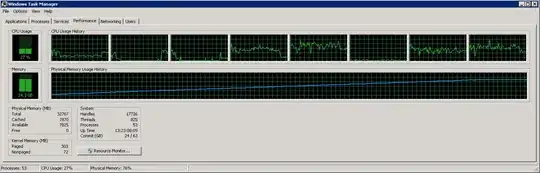

Here is another graph showing the 8 individual CPUs

As you can see from these images, the memory usage slowly increases to about 27G as more and more rows are read and processed. However the CPU usage is constant around 40%.

The second graph shows that we are only using 4 (sometimes 5) CPUs out of 8.

I am trying to make the process run faster (it is only using 40% of the available CPU).

How do I go about making this process run more efficiently (least time, most resources)?