I'm using Synology Hyper backup to backup my NAS to AWS S3. To reduce cost I added a lifecycle to the S3 bucket, that moves the data to AWS glacier after a couple of days.

Now I want to restore the data. Therefore I need to revert the step and bring all data back to S3 such that Synology's hyperbackup can retrieve them.

I already clicked on the respective bucket -> initiate restore

It says that the restoration could take 12 - 24 hours, however it's been days now and I see that the respective data has storage class "Deep glacier"

Any idea what is going wrong?

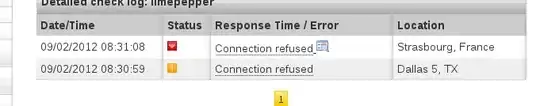

This is a snapshot of the respective bucket. As one can see two files are still marked as "Deep Glacier" although I initiated the restore action multiple times for them.

Update

Here is some related question / answer on stackoverflow (which seems to be less esoteric than serverfault...)

Update2 It seems that there was a problem, that there were many more files in subfolders which I oversaw. I'm currently trying to restore everything in the bucket recursively. Will update when finished.