I am having this issue with Django + Gunicorn + Kubernetes.

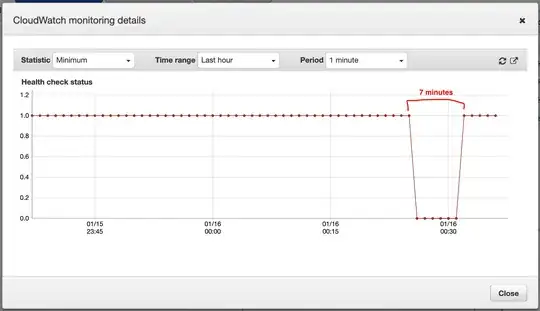

When I deploy a new release to Kubernetes, 2 containers start up with my current image. Once Kubernetes registers them as ready, which they are since the logs show that gunicorn is receiveing requests, the website is down for several minutes. It just times out for roughly 7-10 minutes, until it's fully available again:

The logs show requests, that are coming in and returning 200 responses, but when I try to open the website through the browser, it times out. Also health checkers like AWS Route53 notify me, that the website is not reachable for a few minutes.

I have tried to many things, playing around with gunicorn workers/threads, etc. But I just can't get it working to switch to a new deployment without downtime.

Here are my configurations (just the parts I think are relevant):

Requirements

django-cms==3.7.4 # https://pypi.org/project/django-cms/

django==2.2.16 # https://pypi.org/project/Django/

gevent==20.9.0 # https://pypi.org/project/gevent/

gunicorn==20.0.4 # https://pypi.org/project/gunicorn/

Gunicorn config

/usr/local/bin/gunicorn config.wsgi \

-b 0.0.0.0:5000 \

-w 1 \

--threads 3 \

--timeout 300 \

--graceful-timeout 300 \

--chdir=/app \

--access-logfile -

Kubernetes Config

livenessProbe:

path: "/health/"

initialDelaySeconds: 60

timeoutSeconds: 600

scheme: "HTTP"

probeType: "httpGet"

readinessProbe:

path: "/health/"

initialDelaySeconds: 60

timeoutSeconds: 600

scheme: "HTTP"

probeType: "httpGet"

resources:

limits:

cpu: "3000m"

memory: 12000Mi

requests:

cpu: "3000m"

memory: 12000Mi

It's worth noting, the codebase is roughly 20K lines large. In my opinion not very large. Also, the traffic is not very high, roughly 500-1000 users on average.

In terms of infrastructure, I am using the following AWS services and respective instance types:

Server Instances (3 Instances running, running 2 pods for my Django application)

m5a.xlarge (4 vCPUS, 16GB Memory)

Database (Amazon Aurora RDS)

db.r4.large (2 vCPUs, 16GB RAM)

Redis (ElastiCache)

cache.m3.xlarge (13.3GB memory)

ElasticSearch

m4.large.elasticsearch (2 vCPUS, 8GB RAM)

I rarely ask questions on Stack Overflow, so if you need more information, please let me know and I can provide them.