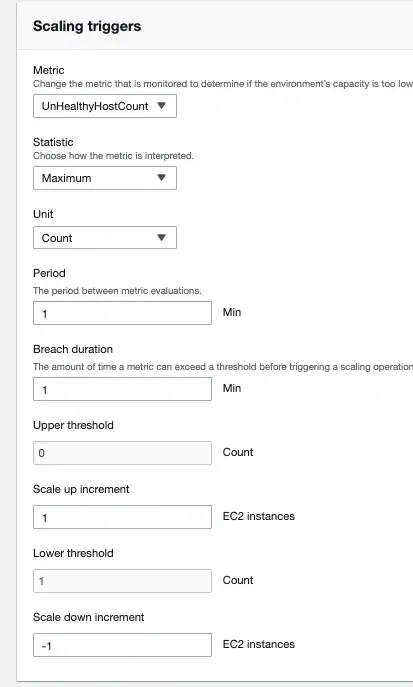

I have my scaling triggers set to look for an un-healthy host count but it does not seem to be working.

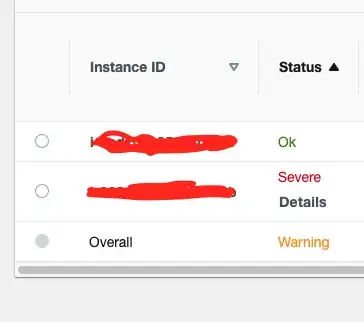

Now to test this - I am SSHing into one of my instances and halting the HTTPD service. Then, when I navigate to the health overview, I will immediately see that the server I SSHd into now has the status of severe.

I would assume that at this point, after 1 minute has passed (as per my rules) a new server would be created, but that is not happening.

If I am understanding my rules correctly - there is now 1 (the above the upper threshold) unhealthy server, so we increment up 1. And then once the number of unhealthy servers is 0 (below the lower threshold) then remove 1 sever.

But yeah, I waited around 5 minutes and no new EC2 servers were provisioned.

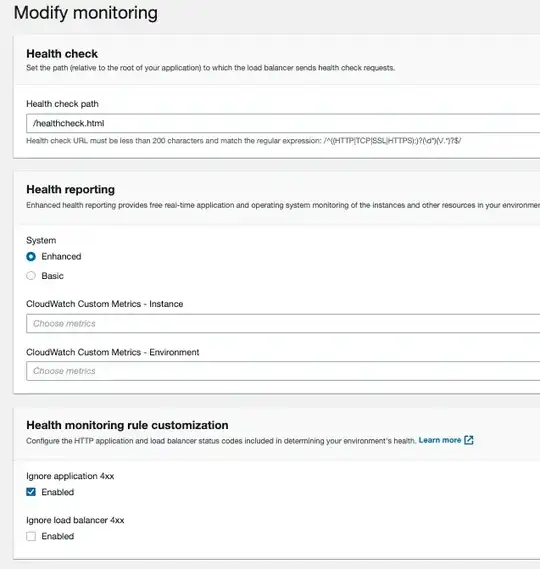

I also have some settings for the health check:

Is this conflicting with my autoscaling rules somehow? I thought that the healthcheck file needs to return a 200 response to be considered healthy and if HTTPD is halted - they it would not return that response.

So what gives?