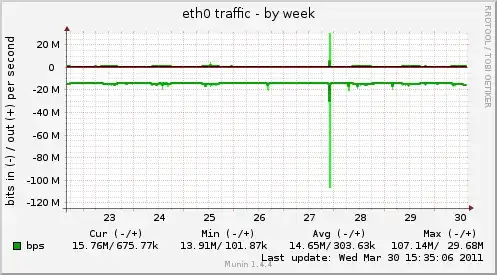

I'm scraping some metrics (openstack cinder volume sizes) every 15 minutes, and the results produce a discontinuous graph, like this:

(That's the result of the simple query cinder_volume_size_gb).

The metrics "exist" for about five minutes, but then disappear until the next scrape interval. What configuration settings would influence this behavior?