Unsane (album)

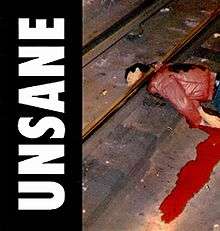

Unsane is Unsane's debut album, released in 1991 through Matador Records. It is the only studio album by the group to feature founding member Charlie Ondras, who died of a heroin overdose during the 1992 New Music Seminar in New York during the tour supporting the album.[2] The album's cover art, depicting a decapitated corpse on subway tracks, was given to the band from a friend who worked on the investigation for the case.[3]

| Unsane | ||||

|---|---|---|---|---|

| ||||

| Studio album by | ||||

| Released | November 26, 1991 | |||

| Recorded | January 16, 1991 | |||

| Studio | Fun City (New York City, New York) | |||

| Genre | Noise rock,[1] post-hardcore | |||

| Length | 36:52 | |||

| Label | Matador | |||

| Producer | Wharton Tiers Unsane | |||

| Unsane chronology | ||||

| ||||

Death metal band Entombed covered "Vandal-X" on their self-titled compilation album in 1997.

Reception

| Review scores | |

|---|---|

| Source | Rating |

| Allmusic | |

Patrick Kennedy from Allmusic called it a brilliant and daring debut that "assaults the senses like the Swans or Foetus before them, but tempers that art-scum priggishness with clear roots in punk and classic rock."[1]

Track listing

All tracks are written by Unsane.

| No. | Title | Length |

|---|---|---|

| 1. | "Organ Donor" | 2:10 |

| 2. | "Bath" | 2:54 |

| 3. | "Maggot" | 3:17 |

| 4. | "Cracked Up" | 2:57 |

| 5. | "Slag" | 2:43 |

| 6. | "Exterminator" | 5:55 |

| 7. | "Vandal-X" | 2:04 |

| 8. | "HLL." | 2:31 |

| 9. | "AZA-2000" | 2:33 |

| 10. | "Cut" | 2:48 |

| 11. | "Action Man" | 2:28 |

| 12. | "White Hand" | 4:26 |

| Total length: | 36:52 | |

Personnel

|

|

References

- Kennedy, Patrick. "Allmusic ((( Unsane > Review )))". Allmusic. Retrieved January 17, 2011.

- Jones, Brad. "Unsane in the Brain". Unsane Biography, October 1994. Retrieved March 31, 2011.

- Jagernauth, Kevin (2015-10-29). "Exclusive: Have A Religious Experience With Unsane In Clip From Amphetamine Reptile Doc 'The Color Of Noise'". indiewire.com. Indie Wire. Retrieved 2018-02-04.