Particle system

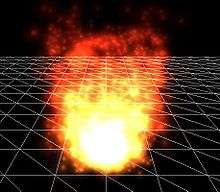

A particle system is a technique in game physics, motion graphics, and computer graphics that uses many minute sprites, 3D models, or other graphic objects to simulate certain kinds of "fuzzy" phenomena, which are otherwise very hard to reproduce with conventional rendering techniques - usually highly chaotic systems, natural phenomena, or processes caused by chemical reactions.

Introduced in the 1982 film Star Trek II: The Wrath of Khan for the fictional "Genesis effect",[1] other examples include replicating the phenomena of fire, explosions, smoke, moving water (such as a waterfall), sparks, falling leaves, rock falls, clouds, fog, snow, dust, meteor tails, stars and galaxies, or abstract visual effects like glowing trails, magic spells, etc. - these use particles that fade out quickly and are then re-emitted from the effect's source. Another technique can be used for things that contain many strands - such as fur, hair, and grass - involving rendering an entire particle's lifetime at once, which can then be drawn and manipulated as a single strand of the material in question.

Particle systems may be two-dimensional or three-dimensional.

Typical implementation

Typically a particle system's position and motion in 3D space are controlled by what is referred to as an emitter. The emitter acts as the source of the particles, and its location in 3D space determines where they are generated and where they move to. A regular 3D mesh object, such as a cube or a plane, can be used as an emitter. The emitter has attached to it a set of particle behavior parameters. These parameters can include the spawning rate (how many particles are generated per unit of time), the particles' initial velocity vector (the direction they are emitted upon creation), particle lifetime (the length of time each individual particle exists before disappearing), particle color, and many more. It is common for all or most of these parameters to be "fuzzy" — instead of a precise numeric value, the artist specifies a central value and the degree of randomness allowable on either side of the center (i.e. the average particle's lifetime might be 50 frames ±20%). When using a mesh object as an emitter, the initial velocity vector is often set to be normal to the individual face(s) of the object, making the particles appear to "spray" directly from each face but optional.

A typical particle system's update loop (which is performed for each frame of animation) can be separated into two distinct stages, the parameter update/simulation stage and the rendering stage.

Simulation stage

During the simulation stage, the number of new particles that must be created is calculated based on spawning rates and the interval between updates, and each of them is spawned in a specific position in 3D space based on the emitter's position and the spawning area specified. Each of the particle's parameters (i.e. velocity, color, etc.) is initialized according to the emitter's parameters. At each update, all existing particles are checked to see if they have exceeded their lifetime, in which case they are removed from the simulation. Otherwise, the particles' position and other characteristics are advanced based on a physical simulation, which can be as simple as translating their current position, or as complicated as performing physically accurate trajectory calculations which take into account external forces (gravity, friction, wind, etc.). It is common to perform collision detection between particles and specified 3D objects in the scene to make the particles bounce off of or otherwise interact with obstacles in the environment. Collisions between particles are rarely used, as they are computationally expensive and not visually relevant for most simulations.

Rendering stage

After the update is complete, each particle is rendered, usually in the form of a textured billboarded quad (i.e. a quadrilateral that is always facing the viewer). However, this is sometimes not necessary for games; a particle may be rendered as a single pixel in small resolution/limited processing power environments. Conversely, in motion graphics particles tend to be full but small-scale and easy-to-render 3D models, to ensure fidelity even at high resolution. Particles can be rendered as Metaballs in off-line rendering; isosurfaces computed from particle-metaballs make quite convincing liquids. Finally, 3D mesh objects can "stand in" for the particles — a snowstorm might consist of a single 3D snowflake mesh being duplicated and rotated to match the positions of thousands or millions of particles.

"Snowflakes" versus "Hair"

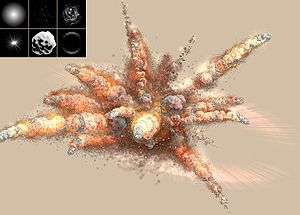

Particle systems can be either animated or static; that is, the lifetime of each particle can either be distributed over time or rendered all at once. The consequence of this distinction is similar to the difference between snowflakes and hair - animated particles are akin to snowflakes, which move around as distinct points in space, and static particles are akin to hair, which consists of a distinct number of curves.

The term "particle system" itself often brings to mind only the animated aspect, which is commonly used to create moving particulate simulations — sparks, rain, fire, etc. In these implementations, each frame of the animation contains each particle at a specific position in its life cycle, and each particle occupies a single point position in space. For effects such as fire or smoke that dissipate, each particle is given a fade out time or fixed lifetime; effects such as snowstorms or rain instead usually terminate the lifetime of the particle once it passes out of a particular field of view.

However, if the entire life cycle of each particle is rendered simultaneously, the result is static particles — strands of material that show the particles' overall trajectory, rather than point particles. These strands can be used to simulate hair, fur, grass, and similar materials. The strands can be controlled with the same velocity vectors, force fields, spawning rates, and deflection parameters that animated particles obey. In addition, the rendered thickness of the strands can be controlled and in some implementations may be varied along the length of the strand. Different combinations of parameters can impart stiffness, limpness, heaviness, bristliness, or any number of other properties. The strands may also use texture mapping to vary the strands' color, length, or other properties across the emitter surface.

Developer-friendly particle system tools

Particle systems code that can be included in game engines, digital content creation systems, and effects applications can be written from scratch or downloaded. Havok provides multiple particle system APIs. Their Havok FX API focuses especially on particle system effects. Ageia - now a subsidiary of Nvidia - provides a particle system and other game physics API that is used in many games, including Unreal Engine 3 games. Both GameMaker Studio and Unity provide a two-dimensional particle system often used by indie, hobbyist, or student game developers, though it cannot be imported into other engines. Many other solutions also exist, and particle systems are frequently written from scratch if non-standard effects or behaviors are desired.

See also

References

- Reeves, William (1983). "Particle Systems—A Technique for Modeling a Class of Fuzzy Objects" (PDF). ACM Transactions on Graphics. 2 (2): 91–108. CiteSeerX 10.1.1.517.4835. doi:10.1145/357318.357320. Retrieved 2018-06-13.

External links

- The ocean spray in your face. — Jeff Lander (Graphic Content, July 1998)

- Building an Advanced Particle System — John van der Burg (Gamasutra, June 2000)

- Particle Engine Using Triangle Strips — Jeff Molofee (NeHe)

- Designing an Extensible Particle System using C++ and Templates — Kent Lai (GameDev.net)

- repository of public 3D particle scripts in LSL Second Life format - Ferd Frederix

- GPU-Particlesystems using WebGL - Particle effects directly in the browser using WebGL for calculations.