External memory algorithm

In computing, external memory algorithms or out-of-core algorithms are algorithms that are designed to process data that are too large to fit into a computer's main memory at once. Such algorithms must be optimized to efficiently fetch and access data stored in slow bulk memory (auxiliary memory) such as hard drives or tape drives, or when memory is on a computer network.[1][2] External memory algorithms are analyzed in the external memory model.

Model

External memory algorithms are analyzed in an idealized model of computation called the external memory model (or I/O model, or disk access model). The external memory model is an abstract machine similar to the RAM machine model, but with a cache in addition to main memory. The model captures the fact that read and write operations are much faster in a cache than in main memory, and that reading long contiguous blocks is faster than reading randomly using a disk read-and-write head. The running time of an algorithm in the external memory model is defined by the number of reads and writes to memory required.[3] The model was introduced by Alok Aggarwal and Jeffrey Vitter in 1988.[4] The external memory model is related to the cache-oblivious model, but algorithms in the external memory model may know both the block size and the cache size. For this reason, the model is sometimes referred to as the cache-aware model.[5]

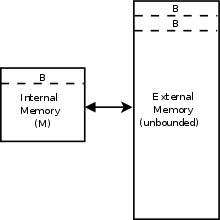

The model consists of a processor with an internal memory or cache of size M, connected to an unbounded external memory. Both the internal and external memory are divided into blocks of size B. One input/output or memory transfer operation consists of moving a block of B contiguous elements from external to internal memory, and the running time of an algorithm is determined by the number of these input/output operations.[4]

Algorithms

Algorithms in the external memory model take advantage of the fact that retrieving one object from external memory retrieves an entire block of size . This property is sometimes referred to as locality.

Searching for an element among objects is possible in the external memory model using a B-tree with branching factor . Using a B-tree, searching, insertion, and deletion can be achieved in time (in Big O notation). Information theoretically, this is the minimum running time possible for these operations, so using a B-tree is asymptotically optimal.[4]

External sorting is sorting in an external memory setting. External sorting can be done via distribution sort, which is similar to quicksort, or via a -way merge sort. Both variants achieve the asymptotically optimal runtime of to sort N objects. This bound also applies to the fast Fourier transform in the external memory model.[2]

The permutation problem is to rearrange elements into a specific permutation. This can either be done either by sorting, which requires the above sorting runtime, or inserting each element in order and ignoring the benefit of locality. Thus, permutation can be done in time.

Applications

The external memory model captures the memory hierarchy, which is not modeled in other common models used in analyzing data structures, such as the random access machine, and is useful for proving lower bounds for data structures. The model is also useful for analyzing algorithms that work on datasets too big to fit in internal memory.[4]

A typical example is geographic information systems, especially digital elevation models, where the full data set easily exceeds several gigabytes or even terabytes of data.

This methodology extends beyond general purpose CPUs and also includes GPU computing as well as classical digital signal processing. In general-purpose computing on graphics processing units (GPGPU), powerful graphics cards (GPUs) with little memory (compared with the more familiar system memory, which is most often referred to simply as RAM) are utilized with relatively slow CPU-to-GPU memory transfer (when compared with computation bandwidth).

History

An early use of the term "out-of-core" as an adjective is in 1962 in reference to devices that are other than the core memory of an IBM 360.[6] An early use of the term "out-of-core" with respect to algorithms appears in 1971.[7]

See also

References

- Vitter, J. S. (2001). "External Memory Algorithms and Data Structures: Dealing with MASSIVE DATA". ACM Computing Surveys. 33 (2): 209–271. CiteSeerX 10.1.1.42.7064. doi:10.1145/384192.384193.

- Vitter, J. S. (2008). Algorithms and Data Structures for External Memory (PDF). Foundations and Trends in Theoretical Computer Science. Series on Foundations and Trends in Theoretical Computer Science. 2. Hanover, MA: Now Publishers. pp. 305–474. CiteSeerX 10.1.1.140.3731. doi:10.1561/0400000014. ISBN 978-1-60198-106-6.

- Zhang, Donghui; Tsotras, Vassilis J.; Levialdi, Stefano; Grinstein, Georges; Berry, Damon Andrew; Gouet-Brunet, Valerie; Kosch, Harald; Döller, Mario; Döller, Mario; Kosch, Harald; Maier, Paul; Bhattacharya, Arnab; Ljosa, Vebjorn; Nack, Frank; Bartolini, Ilaria; Gouet-Brunet, Valerie; Mei, Tao; Rui, Yong; Crucianu, Michel; Shih, Frank Y.; Fan, Wenfei; Ullman-Cullere, Mollie; Clark, Eugene; Aronson, Samuel; Mellin, Jonas; Berndtsson, Mikael; Grahne, Gösta; Bertossi, Leopoldo; Dong, Guozhu; et al. (2009). "I/O Model of Computation". Encyclopedia of Database Systems. Springer Science+Business Media. pp. 1333–1334. doi:10.1007/978-0-387-39940-9_752. ISBN 978-0-387-35544-3.

- Aggarwal, Alok; Vitter, Jeffrey (1988). "The input/output complexity of sorting and related problems". Communications of the ACM. 31 (9): 1116–1127. doi:10.1145/48529.48535.

- Demaine, Erik (2002). Cache-Oblivious Algorithms and Data Structures (PDF). Lecture Notes from the EEF Summer School on Massive Data Sets. Aarhus: BRICS.

- NASA SP. NASA. 1962. p. 276.

- Computers in Crisis. ACM. 1971. p. 296.