Neural network software

Neural network software is used to simulate, research, develop, and apply artificial neural networks, software concepts adapted from biological neural networks, and in some cases, a wider array of adaptive systems such as artificial intelligence and machine learning.

Simulators

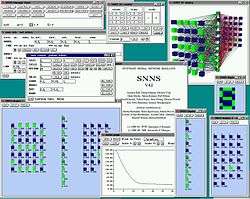

Neural network simulators are software applications that are used to simulate the behavior of artificial or biological neural networks. They focus on one or a limited number of specific types of neural networks. They are typically stand-alone and not intended to produce general neural networks that can be integrated in other software. Simulators usually have some form of built-in visualization to monitor the training process. Some simulators also visualize the physical structure of the neural network.

Research simulators

Historically, the most common type of neural network software was intended for researching neural network structures and algorithms. The primary purpose of this type of software is, through simulation, to gain a better understanding of the behavior and the properties of neural networks. Today in the study of artificial neural networks, simulators have largely been replaced by more general component based development environments as research platforms.

Commonly used artificial neural network simulators include the Stuttgart Neural Network Simulator (SNNS), Emergent and Neural Lab.

In the study of biological neural networks however, simulation software is still the only available approach. In such simulators the physical biological and chemical properties of neural tissue, as well as the electromagnetic impulses between the neurons are studied.

Commonly used biological network simulators include Neuron, GENESIS, NEST and Brian.

Data analysis simulators

Unlike the research simulators, data analysis simulators are intended for practical applications of artificial neural networks. Their primary focus is on data mining and forecasting. Data analysis simulators usually have some form of preprocessing capabilities. Unlike the more general development environments data analysis simulators use a relatively simple static neural network that can be configured. A majority of the data analysis simulators on the market use backpropagating networks or self-organizing maps as their core. The advantage of this type of software is that it is relatively easy to use. Neural Designer is one example of a data analysis simulator.

Simulators for teaching neural network theory

When the Parallel Distributed Processing volumes[1] [2][3] were released in 1986-87, they provided some relatively simple software. The original PDP software did not require any programming skills, which led to its adoption by a wide variety of researchers in diverse fields. The original PDP software was developed into a more powerful package called PDP++, which in turn has become an even more powerful platform called Emergent. With each development, the software has become more powerful, but also more daunting for use by beginners.

In 1997, the tLearn software was released to accompany a book.[4] This was a return to the idea of providing a small, user-friendly, simulator that was designed with the novice in mind. tLearn allowed basic feed forward networks, along with simple recurrent networks, both of which can be trained by the simple back propagation algorithm. tLearn has not been updated since 1999.

In 2011, the Basic Prop simulator was released. Basic Prop is a self-contained application, distributed as a platform neutral JAR file, that provides much of the same simple functionality as tLearn.

In 2012, Wintempla included a namespace called NN with a set of C++ classes to implement: feed forward networks, probabilistic neural networks and Kohonen networks. Neural Lab is based on Wintempla classes. Neural Lab tutorial and Wintempla tutorial explains some of these classes for neural networks. The main disadvantage of Wintempla is that it compiles only with Microsoft Visual Studio.

Development environments

Development environments for neural networks differ from the software described above primarily on two accounts – they can be used to develop custom types of neural networks and they support deployment of the neural network outside the environment. In some cases they have advanced preprocessing, analysis and visualization capabilities.[5]

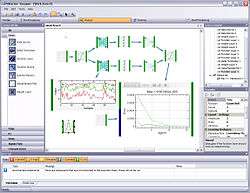

Component based

A more modern type of development environments that are currently favored in both industrial and scientific use are based on a component based paradigm. The neural network is constructed by connecting adaptive filter components in a pipe filter flow. This allows for greater flexibility as custom networks can be built as well as custom components used by the network. In many cases this allows a combination of adaptive and non-adaptive components to work together. The data flow is controlled by a control system which is exchangeable as well as the adaptation algorithms. The other important feature is deployment capabilities.

With the advent of component-based frameworks such as .NET and Java, component based development environments are capable of deploying the developed neural network to these frameworks as inheritable components. In addition some software can also deploy these components to several platforms, such as embedded systems.

Component based development environments include: Peltarion Synapse, NeuroDimension NeuroSolutions, Scientific Software Neuro Laboratory, and the LIONsolver integrated software. Free open source component based environments include Encog and Neuroph.

Criticism

A disadvantage of component-based development environments is that they are more complex than simulators. They require more learning to fully operate and are more complicated to develop.

Custom neural networks

The majority implementations of neural networks available are however custom implementations in various programming languages and on various platforms. Basic types of neural networks are simple to implement directly. There are also many programming libraries that contain neural network functionality and that can be used in custom implementations (such as TensorFlow, Theano, etc., typically providing bindings to languages such as Python, C++, Java).

Standards

In order for neural network models to be shared by different applications, a common language is necessary. The Predictive Model Markup Language (PMML) has been proposed to address this need. PMML is an XML-based language which provides a way for applications to define and share neural network models (and other data mining models) between PMML compliant applications.

PMML provides applications a vendor-independent method of defining models so that proprietary issues and incompatibilities are no longer a barrier to the exchange of models between applications. It allows users to develop models within one vendor's application, and use other vendors' applications to visualize, analyze, evaluate or otherwise use the models. Previously, this was very difficult, but with PMML, the exchange of models between compliant applications is now straightforward.

PMML consumers and producers

A range of products are being offered to produce and consume PMML. This ever-growing list includes the following neural network products:

- R: produces PMML for neural nets and other machine learning models via the package pmml.

- SAS Enterprise Miner: produces PMML for several mining models, including neural networks, linear and logistic regression, decision trees, and other data mining models.

- SPSS: produces PMML for neural networks as well as many other mining models.

- STATISTICA: produces PMML for neural networks, data mining models and traditional statistical models.

See also

- AI accelerator

- Physical neural network

- Comparison of deep learning software

- Data Mining

- Integrated development environment

- Logistic regression

- Memristor

References

- Rumelhart, D.E., J.L. McClelland and the PDP Research Group (1986). Parallel Distributed Processing: Explorations in the Microstructure of Cognition. Volume 1: Foundations, Cambridge, MA: MIT Press

- McClelland, J.L., D.E. Rumelhart and the PDP Research Group (1986). Parallel Distributed Processing: Explorations in the Microstructure of Cognition. Volume 2: Psychological and Biological Models, Cambridge, MA: MIT Press

- McClelland and Rumelhart "Explorations in Parallel Distributed Processing Handbook", MIT Press, 1987

- Plunkett, K. and Elman, J.L., Exercises in Rethinking Innateness: A Handbook for Connectionist Simulations (The MIT Press, 1997)

- "The R&D Pipeline Continues: Launching Version 11.1—Stephen Wolfram". blog.stephenwolfram.com. Retrieved 2017-03-22.

External links

- Comparison of Neural Network Simulators at University of Colorado