MilkyWay@home

MilkyWay@home is a volunteer distributed computing project in astrophysics running on the Berkeley Open Infrastructure for Network Computing (BOINC) platform. Using spare computing power from over 38,000 computers run by over 27,000 active volunteers as of November 2011,[3] the MilkyWay@home project aims to generate accurate three-dimensional dynamic models of stellar streams in the immediate vicinity of the Milky Way. With SETI@home and Einstein@home, it is the third computing project of this type that has the investigation of phenomena in interstellar space as its primary purpose. Its secondary objective is to develop and optimize algorithms for distributed computing.

| |

| Developer(s) | Rensselaer Polytechnic Institute |

|---|---|

| Development status | Active |

| Operating system | Cross-platform |

| Platform | BOINC |

| Type | astroinformatics |

| License | GNU GPL v3[1] |

| Average performance | 1,597,056 GFLOPS (May 2020)[2] |

| Active users | 15,322 |

| Total users | 234,297 |

| Active hosts | 26,711 |

| Total hosts | 34,424 |

| Website | milkyway |

Purpose and design

MilkyWay@home is a collaboration between the Rensselaer Polytechnic Institute's departments of Computer Science and Physics, Applied Physics and Astronomy and is supported by the U.S. National Science Foundation. It is operated by a team that includes astrophysicist Heidi Jo Newberg and computer scientists Malik Magdon-Ismail, Bolesław Szymański and Carlos A. Varela.

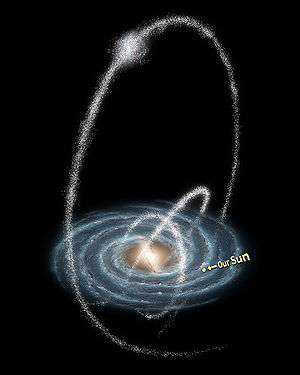

By mid-2009 the project's main astrophysical interest is in the Sagittarius Stream,[4] a stellar stream emanating from the Sagittarius Dwarf Spheroidal Galaxy which partially penetrates the space occupied by the Milky Way and is believed to be in an unstable orbit around it, probably after a close encounter or collision with the Milky Way[5] which subjected it to strong galactic tide forces. Mapping such interstellar streams and their dynamics with high accuracy is expected to provide crucial clues for understanding the structure, formation, evolution, and gravitational potential distribution of the Milky Way and similar galaxies. It could also provide insight on the dark matter issue. As the project evolves, it might turn its attention to other star streams.

Using data from the Sloan Digital Sky Survey, MilkyWay@home divides starfields into wedges of about 2.5 deg. width and applies self-optimizing probabilistic separation techniques (i.e., evolutionary algorithms) to extract the optimized tidal streams. The program then attempts to create a new, uniformly dense wedge of stars from the input wedge by removing streams of data. Each stream removed is characterized by six parameters: percent of stars in the stream; the angular position in the stripe; the three spatial components (two angles, plus the radial distance from Earth) defining the removed cylinder; and a measure of width. For each search, the server application keeps track of a population of individual stars, each of which is attached to a possible model of the Milky Way.

Project details and statistics

MilkyWay@home has been active since 2007, and optimized client applications for 32-bit and 64-bit operating systems became available in 2008. Its screensaver capability is limited to a revolving display of users' BOINC statistics, with no graphical component. Instead, animations of the best computer simulations are shared through YouTube.[6]

The work units that are sent out to clients used to require only 2–4 hours of computation on modern CPUs, however, they were scheduled for completion with a short deadline (typically, three days). By early 2010, the project routinely sent much larger units that take 15–20 hours of computation time on the average processor core, and are valid for about a week from a download. This made the project less suitable for computers that are not in operation for periods of several days, or for user accounts that do not allow BOINC to compute in the background. As of 2018, many GPU-based tasks only require less than a minute to complete on a high-end graphics card.

The project's data throughput progress has been very dynamic recently. In mid-June 2009, the project had about 24,000 registered users and about 1,100 participating teams in 149 countries and was operating at 31.7 TeraFLOPS. As of 12 January 2010, these figures were at 44,900 users and 1,590 teams in 170 countries, but average computing power had jumped to 1,382 TFlops,[7] which would rank MilkyWay@home second among the TOP500 list of supercomputers. MilkyWay@home is currently the 2nd largest distributed computing project behind Folding@Home which crossed 5,000 TFlops in 2009.

That data throughput massively outpaced new user acquisition is mostly due to the deployment of client software that uses commonly available medium and high performance graphics processing units (GPUs) for numerical operations in Windows and Linux environments. MilkyWay@home CUDA code for a broad range of Nvidia GPUs was first released on the project's code release directory on June 11, 2009 following experimental releases in the MilkyWay@home(GPU) fork of the project. An OpenCL application for AMD Radeon GPUs is also available and currently outperforming the CPU application. For example, a task that requires 10 minutes using a Radeon HD 3850 GPU or 5 minutes using a Radeon HD 4850 GPU, requires 6 hours using one core of an AMD Phenom II processor at 2.8 GHz.

MilkyWay@home is a whitelisted gridcoin project.[8] It is the second largest manufacturer of gridcoins.

Scientific results

Large parts of the MilkyWay@home project build on the thesis of Nathan Cole[9] and have been published in The Astrophysical Journal.[10] Other results have been presented at several astrophysical and computing congresses.[11]

References

- milkyway released under GPLv3

- de Zutter W. "MilkyWay@home: Detailed stats". boincstats.com. Retrieved 2020-05-04.

- de Zutter W. "MilkyWay@home: Credit overview". boincstats.com. Retrieved 2017-09-18.

- Static 3D rendering of the Sagittarius stream Archived

- Simulation of the Sagittarius stream development by Kathryn V. Johnston at Columbia University Archived

- Videos of the best-discovered computer simulations of this project.

- Data retrieved from BOINC project statistics page Archived 2014-02-26 at the Wayback Machine on June 22, 2009, and January 12, 2010, respectively

- "Gridcoin's Whitelist". Retrieved November 29, 2015.

- Cole, Nathan (2009). Maximum Likelihood Fitting of Tidal Streams with Application to the Sagittarius Dwarf Tidal Tails (PDF) (Ph.D. thesis). Rensselaer Polytechnic Institute. Retrieved January 27, 2012.

- Cole, Nathan; Newberg, Heidi Jo; Magdon-Ismail, Malik; Desell, Travis; Dawsey, Kristopher; Hayashi, Warren; Liu, Xinyang Fred; Purnell, Jonathan; Szymanski, Boleslaw; Varela, Carlos; Willett, Benjamin; Wisniewski, James (2008), et al., "Maximum Likelihood Fitting of Tidal Streams with Application to the Sagittarius Dwarf Tidal Tails" (PDF), The Astrophysical Journal, 683 (2): 750–766, arXiv:0805.2121, Bibcode:2008ApJ...683..750C, doi:10.1086/589681

- For an up-to-date list, see the project's web portal.