GOAL agent programming language

GOAL is an agent programming language for programming cognitive agents. GOAL agents derive their choice of action from their beliefs and goals. The language provides the basic building blocks to design and implement cognitive agents by programming constructs that allow and facilitate the manipulation of an agent's beliefs and goals and to structure its decision-making. The language provides an intuitive programming framework based on common sense or practical reasoning.

Overview

The main features of GOAL include:

- Declarative beliefs: Agents use a symbolic, logical language to represent the information they have, and their beliefs or knowledge about the environment they act upon in order to achieve their goals. This knowledge representation language is not fixed by GOAL but, in principle, may be varied according to the needs of the programmer.

- Declarative goals: Agents may have multiple goals that specify what the agent wants to achieve at some moment in the near or distant future. Declarative goals specify a state of the environment that the agent wants to establish, they do not specify actions or procedures how to achieve such states.

- Blind commitment strategy: Agents commit to their goals and drop goals only when they have been achieved. This commitment strategy, called a blind commitment strategy in the literature, is the default strategy used by GOAL agents. Cognitive agents are assumed to not have goals that they believe are already achieved, a constraint which has been built into GOAL agents by dropping a goal when it has been completely achieved.

- Rule-based action selection: Agents use so-called action rules to select actions, given their beliefs and goals. Such rules may underspecify the choice of action in the sense that multiple actions may be performed at any time given the action rules of the agent. In that case, a GOAL agent will select an arbitrary enabled action for execution.

- Policy-based intention modules: Agents may focus their attention and put all their efforts on achieving a subset of their goals, using a subset of their actions, using only knowledge relevant to achieving those goals. GOAL provides modules to structure action rules and knowledge dedicated to achieving specific goals. Informally, modules can be viewed as policy-based intentions in the sense of Michael Bratman.

- Communication at the knowledge level: Agents may communicate with each other to exchange information, and to coordinate their actions. GOAL agents communicate using the knowledge representation language that is also used to represent their beliefs and goals.

- Testing: You can also write tests for GOAL.

GOAL agent program

|

|

A GOAL agent program consists of six different sections, including the knowledge, beliefs, goals, action rules, action specifications, and percept rules, respectively. The knowledge, beliefs and goals are represented in a knowledge representation language such as Prolog, Answer set programming, SQL (or Datalog), or the Planning Domain Definition Language, for example. Below, we illustrate the components of a GOAL agent program using Prolog.

The overall structure of a GOAL agent program looks like:

main: <agentname> {

<sections>

}

The GOAL agent code used to illustrate the structure of a GOAL agent is an agent that is able to solve Blocks world problems. The beliefs of the agent represent the current state of the Blocks world whereas the goals of the agent represent the goal state. The knowledge section listed next contains additional conceptual or domain knowledge related to the Blocks world domain.

knowledge{

block(a), block(b), block(c), block(d), block(e), block(f), block(g).

clear(table).

clear(X) :- block(X), not(on(Y,X)).

tower([X]) :- on(X,table).

tower([X,Y|T]) :- on(X,Y), tower([Y|T]).

}

Note that all the blocks listed in the knowledge section reappear in the beliefs section again as the position of each block needs to be specified to characterize the complete configuration of blocks.

beliefs{

on(a,b), on(b,c), on(c,table), on(d,e), on(e,table), on(f,g), on(g,table).

}

All known blocks also are present in the goals section which specifies a goal configuration which reuses all blocks.

goals{

on(a,e), on(b,table), on(c,table), on(d,c), on(e,b), on(f,d), on(g,table).

}

A GOAL agent may have multiple goals at the same time. These goals may even be conflicting as each of the goals may be realized at different times. For example, an agent might have a goal to watch a movie in the movie theater and to be at home (afterwards).

In GOAL, different notions of goal are distinguished. A primitive goal is a statement that follows from the goal base in conjunction with the concepts defined in the knowledge base. For example, tower([a,e,b]) is a primitive goal and we write goal(tower([a,e,b]) to denote this. Initially, tower([a,e,b]) is also an achievement goal since the agent does not believe that a is on top of e, e is on top of b, and b is on the table. Achievement goals are primitive goals that the agent does not believe to be the case and are denoted by a-goal(tower([a,e,b]). It is also useful to be able to express that a goal has been achieved. goal-a(tower([e,b]) is used to express, for example, that the tower [e,b] has been achieved with block e on top of block b. Both achievement goals as well as the notion of a goal achieved can be defined:

a-goal(formula) ::= goal(formula), not(bel(formula)) goal-a(formula) ::= goal(formula), bel(formula)

There is a significant literature on defining the concept of an achievement goal in the agent literature (see the references).

GOAL is a rule-based programming language. Rules are structured into modules. The main module of a GOAL agent specifies a strategy for selecting actions by means of action rules. The first rule below states that moving block X on top of block Y (or, possibly, the table) is an option if such a move is constructive, i.e. moves the block in position. The second rule states that moving a block X to the table is an option if block X is misplaced.

main module{

program{

if a-goal(tower([X,Y|T])), bel(tower([Y|T])) then move(X,Y).

if a-goal(tower([X|T])) then move(X,table).

}

}

Actions, such as the move action used above, are specified using a STRIPS-style specification of preconditions and postconditions. A precondition specifies when the action can be performed (is enabled). A postcondition specifies what the effects of performing the action are.

actionspec{

move(X,Y) {

pre{ clear(X), clear(Y), on(X,Z), not(X=Y) }

post{ not(on(X,Z)), on(X,Y) }

}

Finally, the event module consists of rules for processing events such as percepts received from the environment. The rule below specifies that for all percepts received that indicate that block X is on block Y, and X is believed to be on top of Z unequal to Y, the new fact on(X,Y) is to be added to the belief base and the atom on(X,Z) is to be removed.

event module{

program{

forall bel( percept(on(X,Y)), on(X,Z), not(Y=Z) ) do insert(on(X,Y), not(on(X,Z))).

}

}

Related agent programming languages

The GOAL agent programming language is related to but different from other agent programming languages such as AGENT0, AgentSpeak, 2APL, Golog, JACK Intelligent Agents, Jadex, and, for example, Jason. The distinguishing feature of GOAL is the concept of a declarative goal. Goals of a GOAL agent describe what an agent wants to achieve, not how to achieve it. Different from other languages, GOAL agents are committed to their goals and only remove a goal when it has been completely achieved. GOAL provides a programming framework with a strong focus on declarative programming and the reasoning capabilities required by cognitive agents.

See also

- Agent communication language

- Autonomous agent

- Cognitive architecture

- Declarative programming

- Practical reasoning

- Rational agent

References

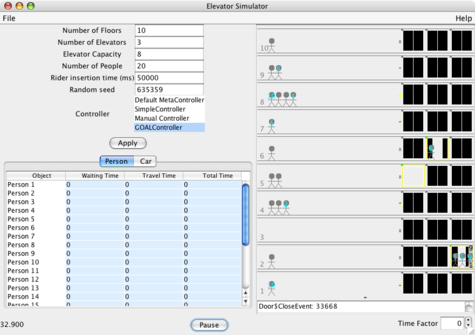

- The elevator simulator is originally written by Chris Dailey and Neil McKellar and is available in its original form via http://sourceforge.net/projects/elevatorsim.

- Notes

Literature on the notion of a goal:

- Lars Braubach, Alexander Pokahr, Daniel Moldt and Winfried Lamersdorf (2004). Goal Representation for BDI Agent Systems, in: The Second International Workshop on Programming Multiagent Systems.

- Philip R. Cohen and Hector J. Levesque (1990). Intention Is Choice with Commitment. Artificial Intelligence 42, 213–261.

- Andreas Herzig and D. Longin (2004). C&l intention revisited. In: Proc. of the 9th Int. Conference Principles of Knowledge Representation and Reasoning (KR’04), 527–535.

- Koen V. Hindriks, Frank S. de Boer, Wiebe van der Hoek, John-Jules Ch. Meyer (2000). Agent Programming with Declarative Goals. In: Proc. of the 7th Int. Workshop on Intelligent Agents VII (ATAL’00), pp. 228–243.

- Anand S. Rao and Michael P. Georgeff (1993). Intentions and Rational Commitment. Tech. Rep. 8, Australian Artificial Intelligence Institute.

- Birna van Riemsdijk, Mehdi Dastani, John-Jules Ch. Meyer (2009). Goals in Conflict: Semantic Foundations of Goals in Agent Programming. International Journal of Autonomous Agents and Multi-Agent Systems.