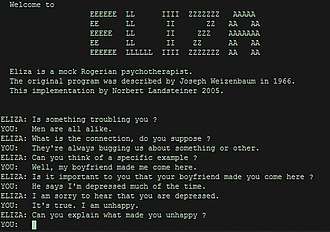

ELIZA

ELIZA is an early natural language processing computer program created from 1964 to 1966[1] at the MIT Artificial Intelligence Laboratory by Joseph Weizenbaum.[2] Created to demonstrate the superficiality of communication between humans and machines, Eliza simulated conversation by using a "pattern matching" and substitution methodology that gave users an illusion of understanding on the part of the program, but had no built in framework for contextualizing events.[3][4] Directives on how to interact were provided by "scripts", written originally in MAD-Slip, which allowed ELIZA to process user inputs and engage in discourse following the rules and directions of the script. The most famous script, DOCTOR, simulated a Rogerian psychotherapist (in particular, Carl Rogers, who was well-known for simply parroting back at patients what they'd just said),[5][6] and used rules, dictated in the script, to respond with non-directional questions to user inputs. As such, ELIZA was one of the first chatterbots and one of the first programs capable of attempting the Turing test.

ELIZA's creator, Weizenbaum regarded the program as a method to show the superficiality of communication between man and machine, but was surprised by the number of individuals who attributed human-like feelings to the computer program, including Weizenbaum’s secretary.[2] Many academics believed that the program would be able to positively influence the lives of many people, particularly those suffering from psychological issues, and that it could aid doctors working on such patients' treatment.[2][7] While ELIZA was capable of engaging in discourse, ELIZA could not converse with true understanding.[8] However, many early users were convinced of ELIZA’s intelligence and understanding, despite Weizenbaum’s insistence to the contrary.

Overview

Joseph Weizenbaum’s ELIZA, running the DOCTOR script, was created to provide a parody of "the responses of a non-directional psychotherapist in an initial psychiatric interview"[9] and to "demonstrate that the communication between man and machine was superficial".[10] While ELIZA is most well known for acting in the manner of a psychotherapist, this mannerism is due to the data and instructions supplied by the DOCTOR script.[11] ELIZA itself examined the text for keywords, applied values to said keywords, and transformed the input into an output; the script that ELIZA ran determined the keywords, set the values of keywords, and set the rules of transformation for the output.[12] Weizenbaum chose to make the DOCTOR script in the context of psychotherapy to "sidestep the problem of giving the program a data base of real-world knowledge",[2] as in a Rogerian therapeutic situation, the program had only to reflect back the patient's statements.[2] The algorithms of DOCTOR allowed for a deceptively intelligent response, which deceived many individuals when first using the program.[13]

Weizenbaum named his program ELIZA after Eliza Doolittle, a working-class character in George Bernard Shaw's Pygmalion. According to Weizenbaum, ELIZA's ability to be "incrementally improved" by various users made it similar to Eliza Doolittle,[12] since Eliza Doolittle was taught to speak with an upper-class accent in Shaw's play.[14] However, unlike in Shaw's play, ELIZA is incapable of learning new patterns of speech or new words through interaction alone. Edits must be made directly to ELIZA’s active script in order to change the manner by which the program operates.

Weizenbaum first implemented ELIZA in his own SLIP list-processing language, where, depending upon the initial entries by the user, the illusion of human intelligence could appear, or be dispelled through several interchanges. Some of ELIZA's responses were so convincing that Weizenbaum and several others have anecdotes of users becoming emotionally attached to the program, occasionally forgetting that they were conversing with a computer.[2] Weizenbaum's own secretary reportedly asked Weizenbaum to leave the room so that she and ELIZA could have a real conversation. Weizenbaum was surprised by this, later writing: "I had not realized ... that extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people."[15]

In 1966, interactive computing (via a teletype) was new. It was 15 years before the personal computer became familiar to the general public, and three decades before most people encountered attempts at natural language processing in Internet services like Ask.com or PC help systems such as Microsoft Office Clippit. Although those programs included years of research and work, ELIZA remains a milestone simply because it was the first time a programmer had attempted such a human-machine interaction with the goal of creating the illusion (however brief) of human–human interaction.

At the ICCC 1972 ELIZA met another early artificial-intelligence program named PARRY and had a computer-only conversation. While ELIZA was built to be a "Doctor", PARRY was intended to simulate a patient with schizophrenia.[16]

Design

Weizenbaum originally wrote ELIZA in MAD-Slip for the IBM 7094, as a program to make natural-language conversation possible with a computer. To accomplish this, Weizenbaum identified five "fundamental technical problems" for ELIZA to overcome: the identification of critical words, the discovery of a minimal context, the choice of appropriate transformations, the generation of responses appropriate to the transformation or in the absence of critical words and the provision of an ending capacity for ELIZA scripts.[12] Weizenbaum solved these problems in his ELIZA program and made ELIZA such that it had no built-in contextual framework or universe of discourse.[11] However, this required ELIZA to have a script of instructions on how to respond to inputs from users.

ELIZA starts its process of responding to an input by a user by first examining the text input for a "keyword"[4]. A "keyword" is a word designated as important by the acting ELIZA script, which assigns to each keyword a precedence number, or a RANK, designed by the programmer.[8] If such words are found, they are put into a "keystack", with the keyword of the highest RANK at the top. The input sentence is then manipulated and transformed as the rule associated with the keyword of the highest RANK directs.[12] For example, when the DOCTOR script encounters words such as "alike" or "same", it would output a message pertaining to similarity, in this case “In what way?”,[3] as these words had high precedence number. This also demonstrates how certain words, as dictated by the script, can be manipulated regardless of contextual considerations, such as switching first-person pronouns and second-person pronouns and vice versa, as these too had high precedence numbers. Such words with high precedence numbers are deemed superior to conversational patterns and are treated independently of contextual patterns.

Following the first examination, the next step of the process is to apply an appropriate transformation rule, which includes two parts: the "decomposition rule" and the "reassembly rule".[12] First, the input is reviewed for syntactical patterns in order to establish the minimal context necessary to respond. Using the keywords and other nearby words from the input, different disassembly rules are tested until an appropriate pattern is found. Using the script's rules, the sentence is then "dismantled" and arranged into sections of the component parts as the "decomposition rule for the highest-ranking keyword" dictates. The example that Weizenbaum gives is the input "I are very helpful" (remembering that "I" is "You" transformed), which is broken into (1) empty (2) "I" (3) "are" (4) "very helpful". The decomposition rule has broken the phrase into four small segments that contain both the keywords and the information in the sentence.[12]

The decomposition rule then designates a particular reassembly rule, or set of reassembly rules, to follow when reconstructing the sentence[4]. The reassembly rule then takes the fragments of the input that the decomposition rule had created, rearranges them, and adds in programmed words to create a response. Using Weizenbaum's example previously stated, such a reassembly rule would take the fragments and apply them to the phrase "What makes you think I am (4)", which would result in "What makes you think I am very helpful". This example is rather simple, since depending upon the disassembly rule, the output could be significantly more complex and use more of the input from the user. However, from this reassembly, ELIZA then sends the constructed sentence to the user in the form of text on the screen.[12]

These steps represent the bulk of the procedures that ELIZA follows in order to create a response from a typical input, though there are several specialized situations that ELIZA/DOCTOR can respond to. One Weizenbaum specifically wrote about was when there is no keyword. One solution was to have ELIZA respond with a remark that lacked content, such as "I see" or "Please go on".[12] The second method was to use a "MEMORY" structure, which recorded prior recent inputs, and would use these inputs to create a response referencing a part of the earlier conversation when encountered with no keywords.[13] This was possible due to Slip’s ability to tag words for other usage, which simultaneously allowed ELIZA to examine, store and repurpose words for usage in outputs.[12]

While these functions were all framed in ELIZA's programming, the exact manner by which the program dismantled, examined, and reassembled inputs is determined by the operating script. However, the script is not static and can be edited, or a new one created, as is necessary for the operation in the context needed (thus how ELIZA can "learn" new information). This would allow the program to be applied in multiple situations, including the well-known DOCTOR script, which simulates a Rogerian psychotherapist.

Weizenbaum's original MAD-SLIP implementation was re-written in Lisp by Bernie Cosell.[17][18] A BASIC version appeared in Creative Computing in 1977 (although it was written in 1973 by Jeff Shrager).[19] This version, which was ported to many of the earliest personal computers, appears to have been subsequently translated into many other versions in many other languages.

Another version of Eliza popular among software engineers is the version that comes with the default release of GNU Emacs, and which can be accessed by typing M-x doctor from most modern Emacs implementations.

In popular culture

In 1969, George Lucas and Walter Murch incorporated an Eliza-like dialogue interface in their screenplay for the feature film THX-1138. Inhabitants of the underground future world of THX, when stressed, would retreat to "confession booths" and initiate a one-sided Eliza-formula conversation with a Jesus-faced computer who claimed to be "Omm".

ELIZA influenced a number of early computer games by demonstrating additional kinds of interface designs. Don Daglow wrote an enhanced version of the program called Ecala on a DEC PDP-10 minicomputer at Pomona College in 1973 before writing the computer role-playing game Dungeon (1975).

ELIZA is given credit as additional vocals on track 10 of the eponymous Information Society album.

In the 2008 anime RD Sennou Chousashitsu, aka Real Drive, a character named Eliza Weizenbaum appears, an obvious tribute to ELIZA and Joseph Weizenbaum. Her behavior in the story often mimics the responses of the ELIZA program.

The 2011 video game Deus Ex: Human Revolution features an artificial-intelligence Picus TV Network newsreader named Eliza Cassan.[20]

In January 2018, the twelfth episode of the American sitcom Young Sheldon starred the protagonist "conversing" with ELIZA, hoping to resolve a domestic issue.[21]

On July 19, 2018, ELIZA was in a brief mention by the protagonist of the movie Zoe to support his reasoning behind why his relationship with Zoe, a hyper-realistic AI, wasn't real.

On August 12, 2019, independent game developer Zachtronics published a visual novel called Eliza, about an AI-based counseling service inspired by ELIZA.[22][23]

Response and legacy

Lay responses to ELIZA were disturbing to Weizenbaum and motivated him to write his book Computer Power and Human Reason: From Judgment to Calculation, in which he explains the limits of computers, as he wants to make clear in people's minds his opinion that the anthropomorphic views of computers are just a reduction of the human being and any life form for that matter. In the independent documentary film Plug & Pray (2010) Weizenbaum said that only people who misunderstood ELIZA called it a sensation.[24]

The Israeli poet David Avidan, who was fascinated with future technologies and their relation to art, desired to explore the use of computers for writing literature. He conducted several conversations with an APL implementation of ELIZA and published them – in English, and in his own translation to Hebrew – under the title My Electronic Psychiatrist – Eight Authentic Talks with a Computer. In the foreword he presented it as a form of constrained writing.[25]

There are many programs based on ELIZA in different programming languages. In 1980 a company called "Don't Ask Software" created a version called "Abuse" for the Apple II, Atari, and Commodore 64 computers, which verbally abused the user based on the user's input.[26] Other versions adapted ELIZA around a religious theme, such as ones featuring Jesus (both serious and comedic), and another Apple II variant called I Am Buddha. The 1980 game The Prisoner incorporated ELIZA-style interaction within its gameplay. In 1988 the British artist and friend of Weizenbaum Brian Reffin Smith created and showed at the exhibition "Salamandre" in the Musée du Berry, Bourges, France, two art-oriented ELIZA-style programs written in BASIC, one called "Critic" and the other "Artist", running on two separate Amiga 1000 computers. The visitor was supposed to help them converse by typing in to "Artist" what "Critic" said, and vice versa. The secret was that the two programs were identical. GNU Emacs formerly had a psychoanalyze-pinhead command that simulates a session between ELIZA and Zippy the Pinhead.[27] The Zippyisms were removed due to copyright issues, but the DOCTOR program remains.

ELIZA has been referenced in popular culture and continues to be a source of inspiration for programmers and developers focused on artificial intelligence. It was also featured in a 2012 exhibit at Harvard University titled "Go Ask A.L.I.C.E", as part of a celebration of mathematician Alan Turing's 100th birthday. The exhibit explores Turing's lifelong fascination with the interaction between humans and computers, pointing to ELIZA as one of the earliest realizations of Turing's ideas.[1]

See also

Notes

- "Alan Turing at 100". Harvard Gazette. Retrieved 2016-02-22.

- Weizenbaum, Joseph (1976). Computer Power and Human Reason: From Judgment to Calculation. New York: W. H. Freeman and Company. pp. 2, 3, 6, 182, 189. ISBN 0-7167-0464-1.

- Norvig, Peter (1992). Paradigms of Artificial Intelligence Programming. New York: Morgan Kaufmann Publishers. pp. 151–154. ISBN 1-55860-191-0.

- Weizenbaum, Joseph (January 1966). "ELIZA--A Computer Program for the Study of Natural Language Communication Between Man and Machine" (PDF). Commungicatins of the ACM. 9: 36–35 – via universelle-automation.

- Bassett, Caroline (2019). "The computational therapeutic: exploring Weizenbaum's ELIZA as a history of the present". AI & Society. 34 (4): 803–812. doi:10.1007/s00146-018-0825-9.

- "The Samantha Test". Retrieved 2019-05-25.

- Colby, Kenneth Mark; Watt, James B.; Gilbert, John P. (1966). "A Computer Method of Psychotherapy". The Journal of Nervous and Mental Disease. 142 (2): 148–52. doi:10.1097/00005053-196602000-00005. PMID 5936301.

- Shah, Huma; Warwick, Kevin; Vallverdú, Jordi; Wu, Defeng (2016). "Can machines talk? Comparison of Eliza with modern dialogue systems" (PDF). Computers in Human Behavior. 58: 278–95. doi:10.1016/j.chb.2016.01.004.

- Weizenbaum 1976, p. 188.

- Epstein, J.; Klinkenberg, W. D. (2001). "From Eliza to Internet: A brief history of computerized assessment". Computers in Human Behavior. 17 (3): 295–314. doi:10.1016/S0747-5632(01)00004-8.

- Wortzel, Adrianne (2007). "ELIZA REDUX: A Mutable Iteration". Leonardo. 40 (1): 31–6. doi:10.1162/leon.2007.40.1.31. JSTOR 20206337.

- Weizenbaum, Joseph (1966). "ELIZA—a computer program for the study of natural language communication between man and machine". Communications of the ACM. 9: 36–45. doi:10.1145/365153.365168.

- Wardip-Fruin, Noah (1976). Expressive Processing: Digital Fictions, Computer Games, and Software Studies. Cambridge: The MIT Press. p. 33. ISBN 9780262013437 – via eBook Collection (EBSCOhost).

- Markoff, John (2008-03-13), "Joseph Weizenbaum, Famed Programmer, Is Dead at 85", The New York Times, retrieved 2009-01-07.

- Weizenbaum, Joseph (1976). Computer power and human reason: from judgment to calculation. W. H. Freeman. p. 7.

- Megan, Garber (Jun 9, 2014). "When PARRY Met ELIZA: A Ridiculous Chatbot Conversation From 1972". The Atlantic. Archived from the original on 2017-01-18. Retrieved 19 January 2017.

- "Coders at Work: Bernie Cosell". codersatwork.com.

- "elizagen.org". elizagen.org.

- Big Computer Games: Eliza – Your own psychotherapist at www.atariarchives.org.

- Tassi, Paul. "'Deus Ex: Mankind Divided's Ending Is Disappointing In A Different Way". Forbes. Retrieved 2020-04-04.

- McCarthy, Tyler (2018-01-18). "Young Sheldon Episode 12 recap: The family's first computer almost tears it apart". Fox News. Retrieved 2018-01-24.

- O'Connor, Alice (2019-08-01). "The next Zachtronics game is Eliza, a visual novel about AI". Rock, Paper, Shotgun. Retrieved 2019-08-01.

- Machkovech, Sam (August 12, 2019). "Eliza review: Startup culture meets sci-fi in a touching, fascinating tale". Ars Technica. Retrieved August 12, 2019.

- maschafilm. "Content: Plug & Pray Film – Artificial Intelligence – Robots". plugandpray-film.de.

- Avidan, David (2010), Collected Poems, 3, Jerusalem: Hakibbutz Hameuchad, OCLC 804664009.

- Davidson, Steve (January 1983), "Abuse", Electronic Games, 1 (11).

- "lol:> psychoanalyze-pinhead".

References

- McCorduck, Pamela (2004), Machines Who Think (2nd ed.), Natick, MA: A. K. Peters, Ltd., ISBN 1-56881-205-1

- Weizenbaum, Joseph (1976), Computer power and human reason: from judgment to calculation, W. H. Freeman and Company, ISBN 0-7167-0463-3.

- Whitby, Blay (1996), "The Turing Test: AI's Biggest Blind Alley?", in Millican, Peter; Clark, Andy (eds.), Machines and Thought: The Legacy of Alan Turing, 1, Oxford University Press, pp. 53–62, ISBN 0-19-823876-2, archived from the original on 2008-06-19, retrieved 2008-08-11.

- This article is based on material taken from the Free On-line Dictionary of Computing prior to 1 November 2008 and incorporated under the "relicensing" terms of the GFDL, version 1.3 or later.

- Norvig, Peter. Paradigms of Artificial Intelligence Programming. (San Francisco: Morgan Kaufmann Publishers, 1992), 151–154, 159, 163–169, 175, 181. ISBN 1-55860-191-0.

- Wardip-Fruin, Noah. Expressing Processing: Digital Fictions, Computer Games, and Software Studies. (Cumberland: MIT Press, 2014), 24–36. ISBN 9780262517539.

External links

- A page dedicated to the genealogy of Eliza programs.

- Collection of several source code versions at GitHub

- dialogues with colorful personalities of early AI, a collection of dialogues between ELIZA and various conversants, such as a company vice president and PARRY (a simulation of a paranoid schizophrenic)

- Weizenbaum. Rebel at work – Peter Haas, Silvia Holzinger, Documentary film with Joseph Weizenbaum and ELIZA.